Responsible AI: What It Means & How To Achieve It

The information age has leapt forward with the explosive rise of generative AI. Capabilities like natural language processing, image generation, and code automation are now mainstream — driving the business goals of winning customers, enhancing productivity, and reducing costs across every sector.

New large language models are emerging almost daily, existing language models are optimized in a frantic race to the top. There seems no stopping the AI boom. McKinsey estimates that generative AI could contribute between $2.6 and $4.4 trillion annually to the global economy, underscoring its potential to revolutionize entire industries.

But while AI excites the world and business leaders accelerate its integration into every facet of their operations, the reality is that this powerful technology brings with it significant risks. Hallucinations, bias, misuse, and data security concerns are technical challenges that must be tackled, alongside the societal fears of:

- Layoffs and changes in work structures

- Loss of control and the unknown

In this article, let’s consider the approaches to addressing these concerns, which may lead to the best possible outcomes for AI and modern society.

What does responsible AI mean?

To address AI risks and concerns, the term Responsible AI refers to a tactical approach to designing, developing, and using AI systems in ways that are safe, trustworthy, and ethical.

The NIST AI Risk Management Framework outlines the core concepts of responsible AI as being rooted in human centricity, social responsibility, and sustainability.

(Note: though responsible AI is usually understood as the concept, there is a global, member-driven non-profit named RAI, the Responsible AI Institute. Learn more about RAI’s efforts here.)

Drivers and reasons for responsible AI

Responsible AI involves aligning the decisions about AI system design, development, and use with intended aims and values. How? By getting organizations to think more critically about the context and potential impacts of the AI systems they are deploying. This means:

- Encouraging thoughtful evaluation of the impacts of AI on people and ecosystems.

- Considering how design and deployment choices affect society — now and in the future.

- Ensuring that AI systems are equitable, accountable, and beneficial to all stakeholders.

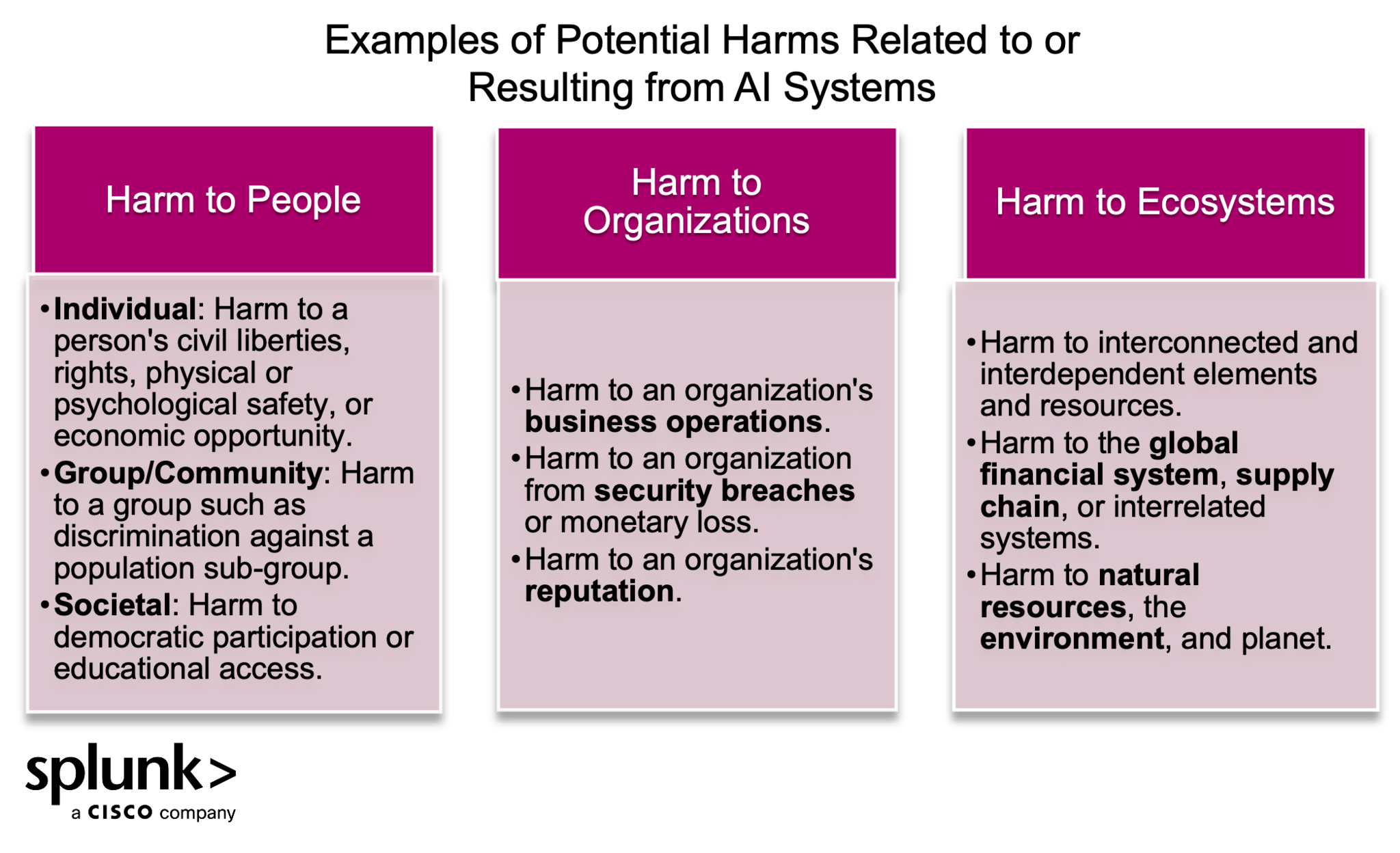

Responsible AI seeks to mitigate negative risks to people, organizations, and ecosystems and instead contribute to their benefit.

(Source: NIST AI RMF)

The hidden risks in developing AI systems

Because of the complexity and massive scale of information and effort required to configure and train AI models, there are specific risks that are inherent to the process of developing AI systems, which require addressing through responsible AI practices. Examples of such AI-specific risks include:

- Data used for building AI systems may not be a true or appropriate representation of the context or intended use of the AI system.

- Datasets used to train AI systems may become detached from their original and intended context.

- Harmful bias, statistical uncertainty, limited reproducibility, and other data quality issues can affect AI system trustworthiness.

- Difficulty in performing regular AI-based software testing or determining what to test.

- Higher degree of difficulty in predicting failure modes for emergent properties of large-scale pre-trained models

- Computational and environmental costs for developing AI systems

Principles of responsible and ethical AI

To mitigate these risks, organizations must adopt ethical principles that embed responsible AI in every step of AI system design, development and use. The international standards body ISO lists some key principles of AI ethics that seek to counter the ramifications of AI harms including:

- Fairness: Datasets used for training the AI system must be given careful consideration to avoid discrimination.

- Transparency: AI systems should be designed in a way that allows users to understand how the algorithms work.

- Non-maleficence: AI systems should avoid harming individuals, society, or the environment.

- Accountability: Developers, organizations, and policymakers all must make sure that AI is developed and used responsibly.

- Privacy: AI must protect people’s personal data, which involves developing mechanisms for individuals to control how their data is collected and used.

- Robustness: AI systems should be secure — that is, resilient to errors, adversarial attacks, and unexpected inputs.

- Inclusiveness: Engaging with diverse perspectives helps identify potential ethical concerns of AI and ensures a collective effort to address them.

How to build responsible AI

To adopt these responsible AI principles, an organization will need to put in place mechanisms for regulating the design, development, and operation of AI systems. The drivers for such mechanisms can be…:

- Self-generated through policies, processes, and technology

- Encouraged by industry peers through standards

- A push by government bodies through laws and regulations

Frameworks and standards

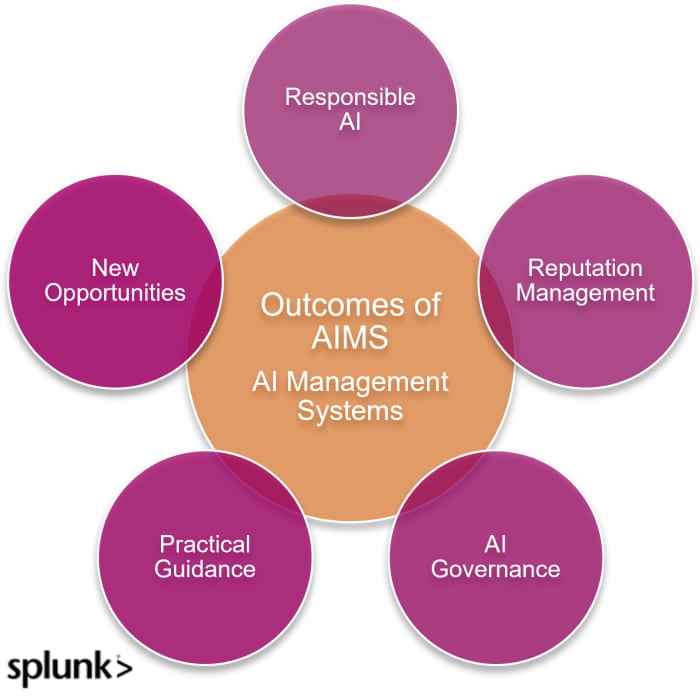

Organizations can choose a framework like the NIST AI RMF or adopt a standard such as ISO/IEC 42001 to ensure the ethical use of AI throughout its lifecycle. This involves:

- Setting policies and objectives related to responsible AI in your organization.

- Establishing associated processes and support elements elated to the development, provision, and use of AI systems.

(Related reading: AI risk frameworks and AI development frameworks.)

Organizational culture

For responsible AI to succeed, it must be embedded within the enterprise culture. That starts with the leadership, demonstrating their commitment through:

- Their policy directives to the rest of the staff

- Contractual arrangements with relevant partners and suppliers

Risk management

Risk management is at the heart of responsible AI: organizations are expected to conduct comprehensive AI risk and impact assessments to identify potential risks on individuals, society, and the environment. Only then should you develop and implement strategies to minimize negative impacts, such as:

- Maintaining transparency in AI decision-making processes.

- Implementing robust security and privacy controls.

- Complying with applicable laws and regulations.

Technical controls and governance

From a technology governance perspective, organizations can validate AI systems against a set of international recognized principles through standard tests.

A useful tool is Singapore’s AI Verify testing framework. Another tool for responsible AI from the UK AI Security Institute is Inspect, an open-source Python framework for evaluating LLMs.

Here are examples of technical controls that can mitigate risks related to responsible AI include:

- Actively assessing machine learning training data for potential biases, including detecting where bias might be introduced during data generation, collection, processing, or annotation.

- Establishing processes that facilitate confidential reporting of AI systems concerns and adverse impacts by interested parties.

- Tracking and reporting resource utilization by AI systems including computing resources and associated environmental impacts.

- Monitoring of AI systems event logs to detect performance outside of intended operating parameters that results in undesirable performance or impacts.

- Tackling AI-specific information threats related to data poisoning and model inversion.

The global regulatory landscape

Gartner predicts that by 2026, half of governments worldwide will enforce use of responsible AI through regulations and policy. Leading the charge is the EU AI Act, the first binding worldwide regulation on AI. It takes a risk-based approach:

- Unacceptable risks are prohibited.

- High-risk AI systems require rigorous assessment.

- Transparency is mandatory for general-purpose AI like LLMs.

The goals of this Act are clear: AI systems in the European Union must be safe, transparent, traceable, non-discriminatory, environmentally responsible, and overseen by humans — not left entirely to automation.

Compliance with the EU AI Act or its forthcoming codes of practices can help your organization prove its commitment to ethical AI.

Final thoughts

In the last two years, generative AI has been propelled to the top of strategic agendas for most digital-led organizations, but challenges persist due to risks arising from the evolving technology, societal concerns, and stringent compliance requirements. By investing in responsible AI, companies can build trust with their internal and external stakeholders, thereby strengthening their credibility and differentiating themselves from competitors.

According to PWC, responsible AI isn’t a one-time exercise, but an ongoing commitment of addressing inherent risks in every step of developing, deploying, using and monitoring AI-based technologies. Those who embed responsibility at the core of their AI strategies won’t just comply with regulations — they’ll lead the way in innovation, trust, and long-term value creation.

See an error or have a suggestion? Please let us know by emailing splunkblogs@cisco.com.

This posting does not necessarily represent Splunk's position, strategies or opinion.

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.