Writing Ansible Playbooks for New Terraform Servers

Over the past few years, cloud computing has enabled agile, dynamic management of software and hardware components, on-demand. Nowadays, we can define our desired infrastructure in as little as a few lines of code, and we can provision real servers on cloud providers like AWS or Azure.

Over the past few years, cloud computing has enabled agile, dynamic management of software and hardware components, on-demand. Nowadays, we can define our desired infrastructure in as little as a few lines of code, and we can provision real servers on cloud providers like AWS or Azure.

Terraform is an open-source infrastructure-as-code (IaC) tool that has become the de facto solution for provisioning one aspect of those components. We do that by defining our high-level configuration topology using Hashicorp Configuration Language (HCL). After that, we analyze and execute that plan, letting Terraform do the hard work of connecting to the cloud providers and provisioning the requested services.

This setup shows the true power of cloud computing, and this process allows us to manage our software or hardware requirements more efficiently and predictably. The configuration artifacts can be stored in a version control system so they can be reviewed, tested and evaluated by the team before they’re merged.

Ansible Plus Terraform For Resilience and Speed

On the other hand, having the infrastructure set up and ready to go doesn’t necessarily mean our servers and services are working – these are only a few pieces of the puzzle. We also need to install software and configure the system with secure defaults. Once you reach this point, Ansible comes into the equation.

Ansible is another open-source tool that does software provisioning, configuration management and application deployments. In simple words, it takes over a newly created server instance and installs the required software based on a recipe book (called a playbook). In the end, we have a fully operational server that comes from a predictable and traceable pipeline.

Now that we have a general view of what Terraform and Ansible are and what they do, let’s see a practical example of how we can pair those tools together to set up and deploy a new server with Nginx in the cloud.

For the purposes of this tutorial, I’ll be using the latest Terraform binary installed using this guide. I’ve also installed Ansible using this installation guide. For the cloud provider, I’ll be using Digital Ocean – although the concepts can be applied to others as well. (Note that if you want to follow along with this tutorial, creating new Droplets will cost you some money, so beware.) The code is also available on GitHub.

Provisioning with Terraform

We start with provisioning a simple Ubuntu server using Terraform. Then, we subsequently install Nginx using Ansible.

To allow Terraform to interact with Digital Ocean, we need to get a list of our SSH keys:

1) Get a Digital Ocean API key

2) Create a .env file and place the token there

$ touch .env $ echo "export DIGITALOCEAN_TOKEN=<token>" >> .env

3) Find your ssh_keys needed for connection. Make sure you have jq installed

$ source .env && ./find_ssh_keys.sh > 1122112

Where find_ssh_keys.sh is a simple script to get the SSH key number:

#/usr/bin/env bash set -eou pipefail if [[ -z "$DIGITALOCEAN_TOKEN" ]] then echo "$DIGITALOCEAN_TOKEN is empty. Please provide it." exit 1 fi curl -X GET \ -s \ -H "Content-Type: application/json" \ -H "Authorization: Bearer $DIGITALOCEAN_TOKEN" \ "https://api.digitalocean.com/v2/account/keys" | jq ".ssh_keys[].id"

Now we’re ready to define our Droplet using Terraform. First, we need to configure the provider. Create a new file named provider.tf and add the following lines:

$ touch provider.tf

$ echo “provider “digitalocean” {}” >> .env

This provider can take a variable named token that corresponds to the $DIGITALOCEAN_TOKEN that we defined earlier. But, because we have this exposed in the .env file, we can omit any specification here.

Next, we need need to describe our Droplet. For this tutorial, we’re going to use a single ubuntu-18-04 Droplet with some common defaults. Create a new file named main.tf and add the following lines:

$ touch main.tf

$ cat <<ENDL > main.tf

resource "digitalocean_droplet" "www-example" {

image = "${var.image}"

name = "${var.name}"

region = "${var.region}"

size = "${var.size}"

backups = "${var.with_backups}"

monitoring = "${var.with_monitoring}"

ipv6 = "${var.with_ipv6}"

private_networking = "${var.with_private_networking}"

resize_disk = "${var.with_resize_disk}"

ssh_keys = ["${var.ssh_keys}"]

}

ENDL

We used the resource for requesting a Digital Ocean Droplet named www-example and we passed some variables taken from the official provider here. Where do we specify those variables, you say? Well, we need another file for that, one named variables.tf. Let’s create that now:

$ touch variables.tf

$ cat <<ENDL > variables.tf

variable "ssh_keys" {}

variable "image" {

description = "The Droplet image id"

default = "ubuntu-18-04-x64"

}

variable "name" {

description = "The name of the Droplet"

default = "nginx"

}

variable "region" {

description = "The region of the Droplet"

default = "LON1"

}

variable "size" {

description = "The instance size"

default = "1gb"

}

variable "with_backups" {

description = "Boolean controlling if backups are made"

default = false

}

variable "with_monitoring" {

description = "Boolean controlling whether monitoring agent is installed"

default = false

}

variable "with_ipv6" {

description = "Boolean controlling if IPv6 is enabled"

default = false

}

variable "with_private_networking" {

description = "Boolean controlling if private networks are enabled"

default = false

}

variable "with_resize_disk" {

description = "Whether to increase the disk size when resizing a Droplet"

default = true

}

ENDL

When we create the Droplet, the service will assign a unique public IP address for the Droplet so we can connect to it. To expose that using Terraform, we just need to use an output file for that:

$ touch outputs.tf

$ cat <<ENDL > outputs.tf

output "ip" {

description = "The Droplet ipv4 address"

value = "${digitalocean_droplet.www-example.ipv4_address}"

}

ENDL

Now we’re ready to apply the deployment.

First, we need to initialize Terraform for our project. This will read the existing configuration from the current folder and download the necessary plugins and providers. If something’s amiss, it will spit out an error.

$ terraform init Initializing the backend… … * provider.digitalocean: version = "~> 1.10" Terraform has been successfully initialized!

That went OK. Next, we need to apply the plan for our infrastructure needs. This command will do a dry-run on the actual build command to check and verify the requested components:

$ source .env && terraform apply --var 'ssh_keys=1122112'

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# digitalocean_droplet.www-example will be created

+ resource "digitalocean_droplet" "www-example" {

+ backups = false

+ created_at = (known after apply)

+ disk = (known after apply)

+ id = (known after apply)

+ image = "ubuntu-18-04"

+ ipv4_address = (known after apply)

+ ipv4_address_private = (known after apply)

+ ipv6 = false

+ ipv6_address = (known after apply)

+ ipv6_address_private = (known after apply)

+ locked = (known after apply)

+ memory = (known after apply)

+ monitoring = false

+ name = "nginx"

+ price_hourly = (known after apply)

+ price_monthly = (known after apply)

+ private_networking = false

+ region = "lon1"

+ resize_disk = true

+ size = "1gb"

+ ssh_keys = [

+ "1122112",

]

+ status = (known after apply)

+ urn = (known after apply)

+ vcpus = (known after apply)

+ volume_ids = (known after apply)

}

Plan: 1 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value:

Note that because we use the latest version of Terraform, there’s no need to run the plan command beforehand. Also, note that we passed the id of the ssh_keys we retrieved from the API earlier. If we want a more secure solution for handling SSH keys, then an enterprise secrets management service like Conjur Cyberark or Vault may be more appropriate. We could’ve also used a data resource to retrieve the SSH keys from remote storage. For example:

data "digitalocean_ssh_key" "myKey" {

name = "theo"

}

...

ssh_keys = [data.digitalocean_ssh_key.myKey.theo]

For now, we’re just re-using our local ssh keys.

The above command just displays some useful information about the requested infrastructure components and awaits our decision. Take some time to verify that we indeed requested those resources and enter yes:

Enter a value: yes

digitalocean_droplet.www-example: Creating... digitalocean_droplet.www-example: Still creating... [10s elapsed] digitalocean_droplet.www-example: Still creating... [20s elapsed] digitalocean_droplet.www-example: Still creating... [30s elapsed] digitalocean_droplet.www-example: Creation complete after 35s [id=166857324]

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

Outputs:

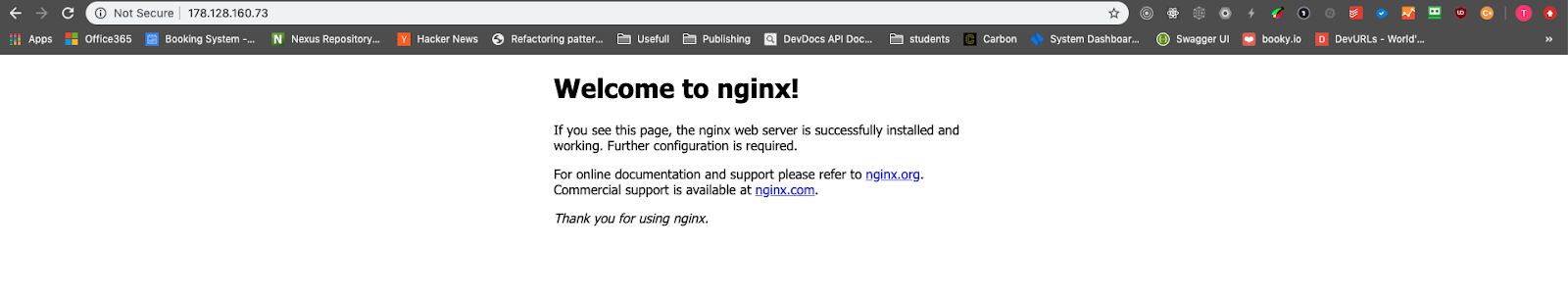

ip = 178.128.160.73

The preceding steps create a Droplet and returns the output of the IP address of that server. This covers the infrastructure components only; it doesn’t install and configure the server. Let’s continue by exploring how we can use Ansible to take over the application deployments and to run playbooks.

Configuration Management with Ansible

Ansible works by using a playbook, which is a file containing a declarative description of our configuration state. In our case, we just need to install Nginx. So, let’s create a file named playbook.yml in the same folder that we host the Terraform files and add the following content:

$ touch playbook.yml $ cat <<ENDL > playbook.yml --- - hosts: all become: yes become_user: root become_method: sudo tasks: - name: Install nginx apt: name: nginx state: latest - name: Restart Nginx service: name=nginx state=restarted become: yes ENDL

For convenience, we need to add an ansible.cfg file to define common parameters before we run the ansible-playbook command. Create one now with the following contents:

$ touch ansible.cfg $ cat <<ENDL > ansible.cfg [defaults] host_key_checking = False remote_user = root ENDL

Ideally, we would use an ssh management store to retrieve the ssh keys for running the playbook and delete them after the playbook is finished.

Now, the easiest way we can run Ansible playbooks is by configuring the server after we create the Droplet is to use the local-exec provisioner, which simply runs the specified commands in the local machine (the one that we used to execute the Terraform plan). In our example, we can add the following command inside the main.tf Droplet resource declaration.

provisioner "local-exec" {

command = "sleep 120; ansible-playbook -i '${digitalocean_droplet.www-example.ipv4_address}' playbook.yml"

}

However, this approach has a major flaw. It relies on an arbitrary sleep command, which again relies on intuition and not deterministic factors. For example, we can see from the following logs that the local-exec command triggers when the Droplet is created. But, the Droplet may or may not be ready to accept connections. And, if it accepts right away, then we will still have to wait for two minutes before the playbook runs.

digitalocean_droplet.www-example: Still creating... [40s elapsed] digitalocean_droplet.www-example: Provisioning with 'local-exec'... digitalocean_droplet.www-example (local-exec): Executing: ["/bin/sh" "-c" "sleep 120; ansible-playbook -i '142.93.46.82,' playbook.yml"] digitalocean_droplet.www-example: Still creating... [50s elapsed] digitalocean_droplet.www-example: Still creating... [1m0s elapsed] digitalocean_droplet.www-example: Still creating... [1m10s elapsed] digitalocean_droplet.www-example: Still creating... [1m20s elapsed] digitalocean_droplet.www-example: Still creating... [1m30s elapsed] digitalocean_droplet.www-example: Still creating... [1m40s elapsed] digitalocean_droplet.www-example: Still creating... [1m50s elapsed] digitalocean_droplet.www-example: Still creating... [2m0s elapsed] digitalocean_droplet.www-example: Still creating... [2m10s elapsed] digitalocean_droplet.www-example: Still creating... [2m20s elapsed] digitalocean_droplet.www-example: Still creating... [2m30s elapsed] digitalocean_droplet.www-example: Still creating... [2m40s elapsed] PLAY [all] *************************************************************************************************************************************************************************** TASK [Gathering Facts] *************************************************************************************************************************************************************** ok: [178.128.160.73] TASK [Install nginx] ***************************************************************************************************************************************************************** [WARNING]: Could not find aptitude. Using apt-get instead changed: [178.128.160.73] TASK [Restart Nginx] ***************************************************************************************************************************************************************** changed: [178.128.160.73] PLAY RECAP *************************************************************************************************************************************************************************** 178.128.160.73 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

A slightly better way to overcome this issue is to prepend a remote-exec provision step before we run the local-exec in the pipeline containing a simple echo command:

provisioner "remote-exec" {

inline = ["echo 'Hello World'"]

connection {

type = "ssh"

user = "root"

host = "${self.ipv4_address}"

private_key = "${file(var.ssh_key_private)}"

}

}

You also need to define a new variable, var.ssh_key_private for pointing to the private ssh key for the connection to establish successfully. This way is slightly better but almost more confusing when looking at the code because you don’t really do anything. If we add those steps, it’s important for visibility to provide documentation about why they’re required.

A different path is also available. We can leverage community plugins such as the terraform-provisioner-ansible or the terraform-provider-ansible to add the necessary steps to run Ansible-local playbooks in the server. Each one of them needs to be evaluated independently to see if, and how, they satisfy business requirements.

As a side note, we can also go the other way around and use Ansible to manage Terraform. For example, we could set up a server that runs Ansible deployments. And, when using the Terraform module, we can apply Terraform plans and run playbook roles as part of the pipeline.

As you can see, there are plenty of options available to use both technologies together. It’s just a matter of usage criteria.

Before we close the tutorial, let’s not forget to destroy the server:

$ terraform destroy --var 'ssh_keys=1122112' digitalocean_droplet.www-example: Refreshing state... [id=166869916]

An execution plan has been generated and is shown below. Resource actions are indicated with the following symbols: - destroy … digitalocean_droplet.www-example: Destroying... [id=166869916] digitalocean_droplet.www-example: Still destroying... [id=166869916, 10s elapsed] digitalocean_droplet.www-example: Still destroying... [id=166869916, 20s elapsed] digitalocean_droplet.www-example: Destruction complete after 23s Destroy complete! Resources: 1 destroyed

The Next Steps

In this tutorial, we went through the process of installing, configuring and deploying a server with Nginx installed using Terraform and Ansible. We have seen a few ways to combine those tools together and get the best of both worlds.

The process of defining and executing deployments has become more streamlined but it still requires careful planning and management. What’s also important is to have a way of getting notifications if a process or a pipeline execution has failed. In that case, we can use Splunk On-Call Integrations to aid us in being on top of incidents or anomalies that may happen.

Once you’ve built out Ansible playbooks for your Terraform servers, you need a collaborative alerting and on-call platform to help improve visibility into these operations. Try out Splunk On-Call in a free 14 day trial to see for yourself how to get started.

About the author

Theo Despoudis is a Senior Software Engineer and an experienced mentor. He has a keen interest in Open Source Architectures, Cloud Computing, best practices and functional programming. He occasionally blogs on several publishing platforms and enjoys creating projects from inspiration.

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.