Our perspective on progress

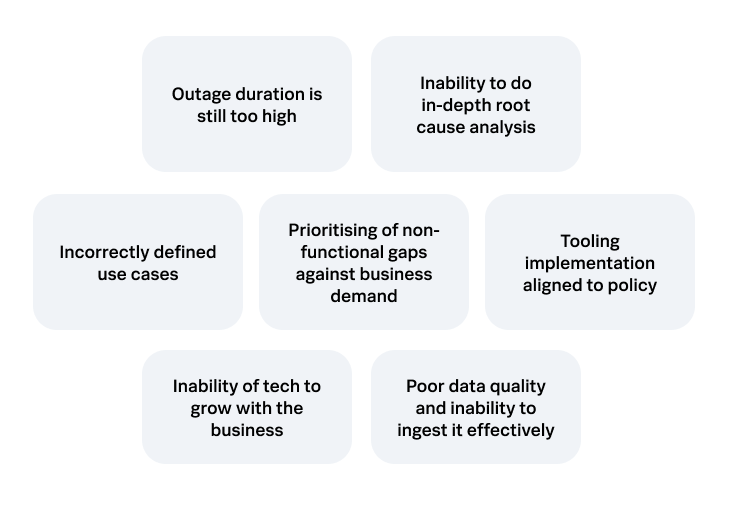

So why aren’t we moving more quickly towards better resilience measures? A major reason is the inability (or unwillingness) to show how imperfectly we understand factors that impact important services, especially from leadership. Most improvement initiatives are based on incremental upgrade cycles, blanket changes or best guess hypotheses rather than operational insight — a myopic focus on availability, data flows and incident handling.

This short-term approach is understandable, as there's lots of work remaining to provide consistent foundations. However, simply wrapping these initiatives in different 'treatment' boxes will do little to move beyond an expensive reactionary stance — which is essential to make true progress towards better understanding.

No one has the resources to provide 100% quality coverage and improvements, so consistent prioritisation is critical. This should be based on leading (not lagging) service-level measurements and assumptions, which are currently complete blind spots for the majority of organisations. This can be shaped, tested and improved to fit your service, organisation and customer needs.

We need to be relentless in showing how imperfectly perfect our services are, using more expansive and relevant measurements than just basic system performance. With new measures, we have a chance to focus on truly building resilient capacity rather than mitigating perpetual failure.

To read more industry insights like this one, sign up for our Perspectives newsletter.