Sequenced Event Templates via Risk-based Alerting

Sequenced event templates are pretty cool, but they were developed around the time that Risk-based Alerting (RBA) was developed in Splunk Enterprise Security. Additionally, they don’t have all the great context we can generate with the holistic picture provided by risk, so I want to provide guidance on how we would implement its equivalent in the RBA context as they are now deprecated in Splunk Enterprise Security 8.0. There are two approaches we can utilize that do slightly different things.

A Similar Approach

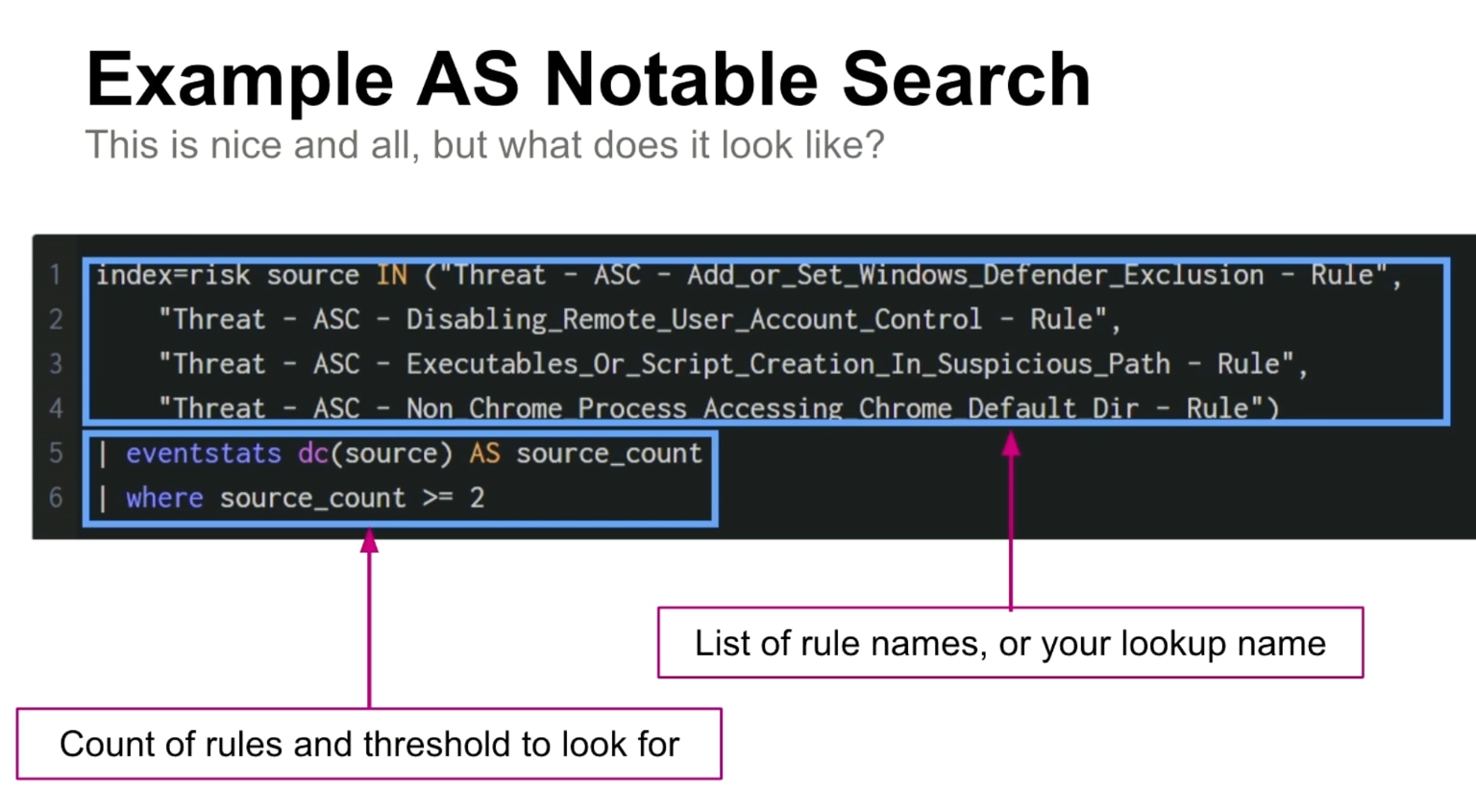

This was pioneered by Ryan Moss while working at Verizon, at a .conf23 talk called SEC1402A - Analytic Stories and RBA: Converting Those Notables From NM to OMG! Rather than utilize risk_score, Ryan found it easier to find interesting events by simply alerting when one entity fired multiple events from a group of detections, or analytic story. So, if you had 30 detections in one analytic story, why not just create an alert when one entity has done 3 or more of those things in a time period?

I won’t cover all of that in this article, but here’s a screenshot from that talk to give you an idea of what he’s suggesting:

You can also look at the RBA Github for a similar rule that looks across data sourcetypes to find when an entity is firing events from multiple security data sources, which can be a tell-tale way to find anomalous behavior.

Chaining Behaviors

If we want to do exactly what sequenced events are doing — where events happen in a specific order — we will have to use more SPL.

This approach is from the RBA Github for Chaining Behaviors. Here is the SPL:

index=risk search_name="Search1" OR search_name="Search2" | sort by user _time | dedup _time search_name user | delta _time as gap | autoregress search_name as prev_search | autoregress user as prev_user | where user = prev_user | table _time gap src user search_name prev_search | where search_name!=prev_search AND gap<600)

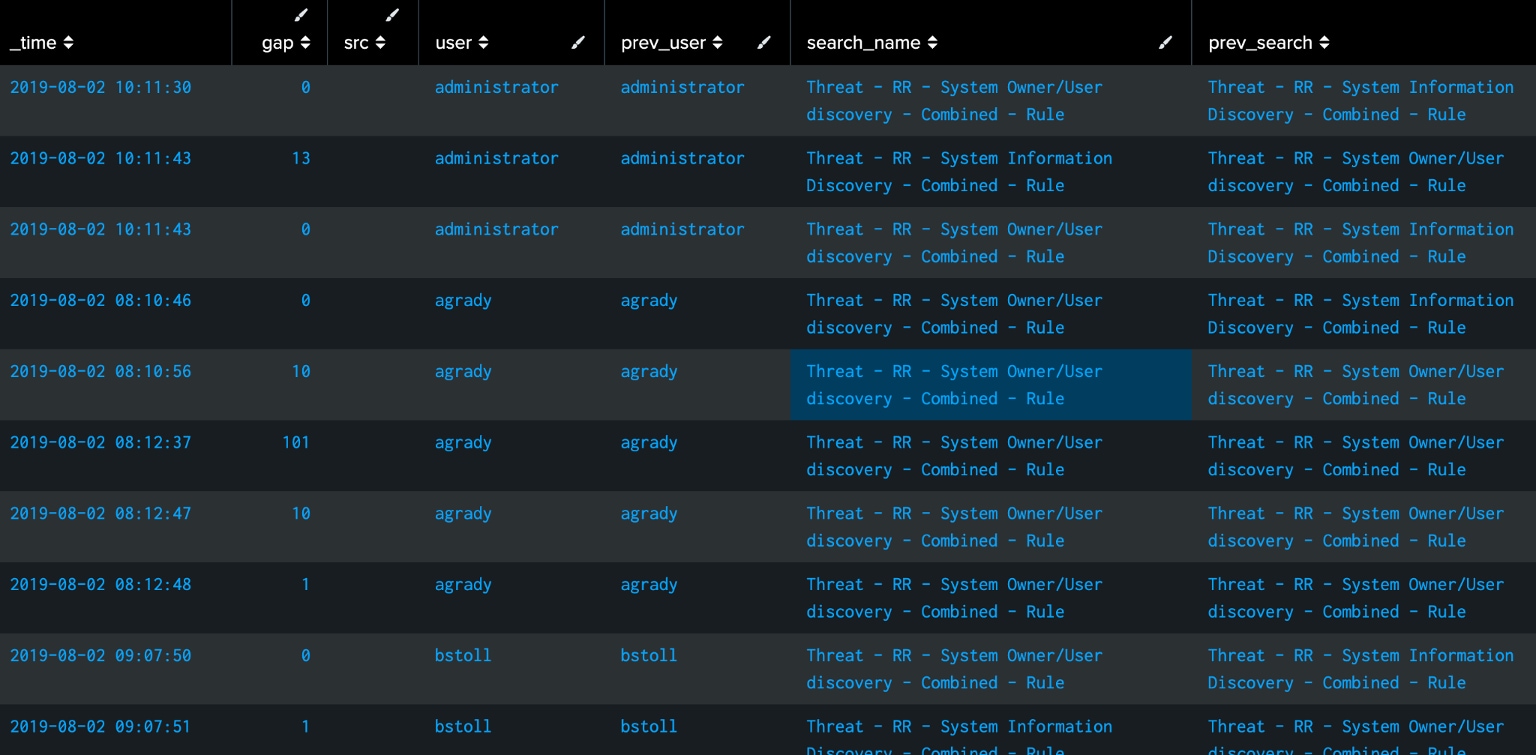

Let’s take this step by step. I want to create a rule that looks for two potential recon activities close to each other, which we already have firing in the risk index. Let’s table out the results so we can see what happens as we utilize autoregress.

index=risk sourcetype=stash search_name IN ("Threat - RR - System Owner/User discovery -

Combined - Rule","Threat - RR - System Information Discovery - Combined - Rule")

| sort by user _time

| dedup _time search_name user

| delta _time as gap

| table _time gap src user search_name

We are sorting by user and _time, but as soon as there’s a new user in the following row, the gap between the two events becomes something really wacky; in this case -7257 or 3302. What we want to do is throw out events where the previous event is not the same user because these aren’t actually related. With autoregress, we bring forward what is in that same field in the preceding event:

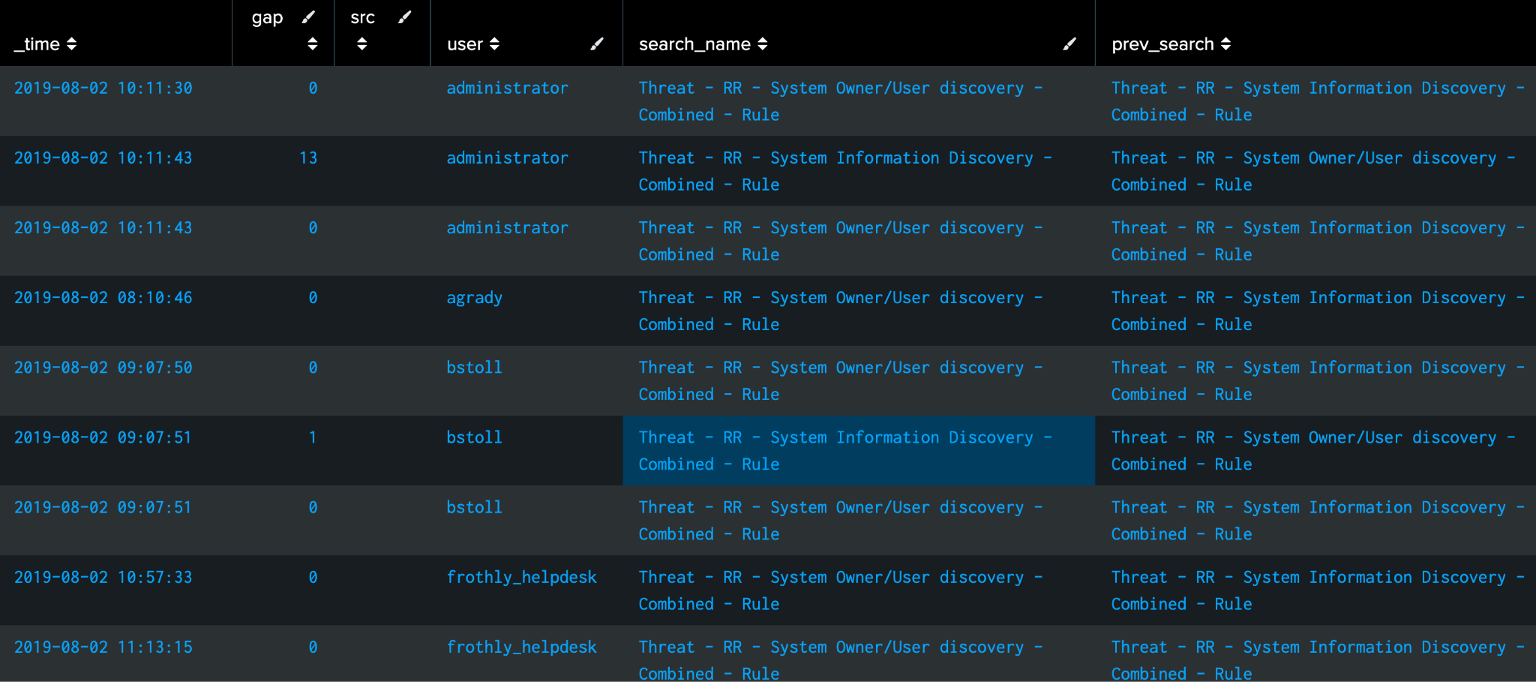

index=risk sourcetype=stash search_name IN ("Threat - RR - System Owner/User discovery -

Combined - Rule","Threat - RR - System Information Discovery - Combined - Rule")

| sort by user _time

| dedup _time search_name user

| delta _time as gap

| autoregress search_name as prev_search

| autoregress user as prev_user

| where user = prev_user

| table _time gap src user prev_user search_name prev_search

We’ve thrown out the irrelevant events, so we can drop the prev_user field since it’s done its job. Now we can use the prev_search field to find when events happen in sequence in a time range defined by the gap field. In this case we’ll use 600 seconds, or 10 minutes. We use search_name!=prev_search so we only see when the sequenced events are different, but you may want to include those events.

index=risk sourcetype=stash search_name IN ("Threat - RR - System Owner/User discovery -

Combined - Rule","Threat - RR - System Information Discovery - Combined - Rule")

| sort by user _time

| dedup _time search_name user

| delta _time as gap

| autoregress search_name as prev_search

| autoregress user as prev_user

| where user = prev_user

| table _time gap src user search_name prev_search

| where search_name!=prev_search AND gap<600

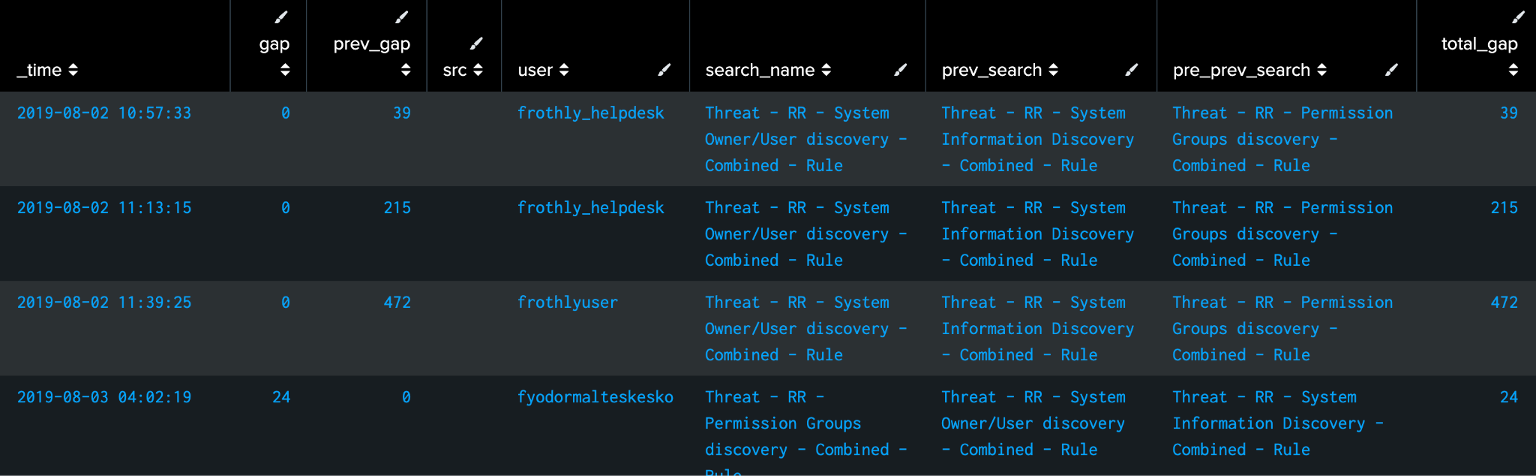

What happens when we want to have more than two rules, and make sure they fire in a certain order? We’ll just use more autoregress, keep track of the total_gap, and make sure we’re getting different events in sequence:

index=risk sourcetype=stash search_name IN ("Threat - RR - System Owner/User discovery -

Combined - Rule","Threat - RR - System Information Discovery - Combined - Rule","Threat -

RR - Permission Groups discovery - Combined - Rule")

| sort by user _time

| dedup _time search_name user

| delta _time as gap

| autoregress search_name as prev_search

| autoregress user as prev_user

| autoregress prev_search as pre_prev_search

| autoregress gap as prev_gap

| where user = prev_user

| table _time gap prev_gap src user search_name prev_search pre_prev_search

| eval total_gap = gap+prev_gap

| where search_name!=prev_search AND search_name!=pre_prev_search AND prev_search!=pre_prev_search AND total_gap<600

Pretty cool. We could also throw as many searches as we like into the base search, and use this same logic to alert when any three of them fire in sequence. Or, we could re-create the ability of sequenced events to have specific “start” and “end” events by defining which search is in search_name and which is in pre_prev_search.

Once you have things working, include additional fields like IPs, process_names, and cmdlines (which would be easier as threat_objects) so you can see more detail about each individual event when the new detection fires. Now you can use this sequenced detection as a direct alert or create a new risk event with a higher risk score than the separate detections would have by themselves. I found this really useful to create more value from detections that fired hundreds or thousands of times per day with a risk_score of zero.

Happy hunting!

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.