Measuring Hunting Success with PEAK

As organizations struggle to keep up with attackers in the cybersecurity arms race, continuous improvement is a strategic priority. An effective threat hunting program is one of the best ways to drive positive change across an organization’s entire security posture, but both hunters and their leaders often struggle to define what “effective” means for their programs.

The PEAK threat hunting framework provides a set of key metrics you can use as a starting point for measuring the impact that your hunting program has on your security program. It also incorporates the Hunting Maturity Model, which leaders can use to assess the current state of their hunting program and figure out how to get where they would like to be.

One of the most common ways to measure a hunt (and, by extension, the hunt program) is by the number of new incidents opened during a hunt. Though this is probably the most obvious measure, it’s not a strong indicator of success. Hunters don’t control adversary actions or timing, and just because the thing you hunted wasn’t happening when you looked for it doesn’t mean your hunt failed or that it wasn’t still useful.

Before we get into what the good metrics might be, let’s talk a little about PEAK’s approach to metrics.

(This article is part of our PEAK Threat Hunting Framework series. Explore the framework to unlock happy hunting!)

The PEAK Metrics Philosophy

In very broad terms, you have two choices when it comes to metrics. You can:

- Measure what you’ve done

- Measure the effects of what you’ve done

It’s the difference between saying, “We performed nine hunts this quarter,” and “We put twelve new automated detections into production this quarter that find cloud exfiltration activity that we previously missed.” Sure, the number of hunts you could do is interesting information, but it’s essentially just a measure of how hard your team worked. You should track this, too, but the purpose of hunting isn’t just to work hard.

The reason we hunt is to drive continuous security improvement; therefore, the number of new and updated detections you created is a much more relevant measure. It shows just how much you were able to strengthen your security posture as a result of hunting. PEAK’s key metrics are designed to help you measure improvements this way across several important facets of your organization’s security posture.

PEAK’s Key Metrics

PEAK’s metrics are all designed to highlight the effectiveness of both your individual hunts and your overall program, but they are by no means the only metrics you should collect. Rather, consider them a good starting point that any organization can benefit from. We expect (and actively encourage!) organizations to develop their own metrics as well.

There are five measures of security impact that every hunting program can benefit from tracking. Let’s talk about each (in no particular order).

The Number of Detections Created or Updated

As we discussed above, the number of new detections created due to your hunting efforts is one of the most useful ways to track improvements to automated detection. Throw in the number of existing detections you improved (e.g., decreased false positives or added detections for edge cases), and you begin to tell the story of how you’re driving improvement to automated detection.

The Number of Incidents Opened During or as a Result of the Hunt

We said earlier that simply tracking the number of new incidents opened during your hunts was not a great metric, and this is true. You may have a great hypothesis or a killer machine-learning approach to finding the bad guys, but if they were not active in your network recently, you wouldn’t find them.

Tracking the number of incidents opened during the hunt is useful, but an even better approach would be to track the number of new incidents opened after the hunt due to the detections you created or improved. This will require you to track which detections came from which hunts, but it will give you a clear view of how many security incidents you caught (and hopefully mitigated before they became breaches) that were due directly to your hunting efforts.

The Numbers of Gaps Identified and Gaps Closed

As you go about your hunting, you will inevitably notice that some things are missing. Maybe it’s a log source that you’re not collecting but would be perfect for detecting some crucial type of behavior. Perhaps you are collecting it, but some systems aren’t logging as they should. Or, possibly, the data sources are fine, but you can’t tell because there’s no baseline documentation to show what’s normal.

Gaps can occur in data visibility, access, documentation, or even the tooling required to make those things work. Identifying these gaps and either closing them or bringing them to the attention of the responsible parties is an important function of the hunt team.

Although the hunt team is probably not the team that’s responsible for closing most of the gaps, tracking both the number reported and the number eventually closed as a result of your reports is another great way to measure the impact you’re having.

The Number of Vulnerabilities & Misconfigurations Identified and the Number Closed

The more you start poking around in new areas, the more opportunities for improvement you’ll find. Many of these may be gaps of some sort that need to be closed, as mentioned above, but you will also frequently encounter misconfigurations, older software versions, or other situations that create actual vulnerabilities. Reporting these is critical, but so is tracking your reports and the vulnerabilities eventually remediated as a result of your reporting.

Techniques Hunted by ATT&CK, Kill Chain, or Pyramid of Pain

Variety is the spice of both life and hunting programs. You’re hunting for different types of activity each time, so be sure to track what you’re hunting for. The simple way to do this is just to count the number of unique techniques you’re looking for (“We hunted nine different adversary techniques this quarter”), but consider also matching this up with a framework such as:

For example, tracking your hunts against the Kill Chain can give you an idea of your potential impact because targeting activity closer to the beginning of the chain gives you more time to intervene before the adversary can complete their attack. Tracking against ATT&CK can help you identify and prioritize new hunt topics, though you should keep in mind that not everything in ATT&CK is intended to be turned into a detection (we dare you to send an alert every time PowerShell runs, for example).

Each represents a different way of looking at your hunt topics, so you may even want to measure against more than one.

Measuring Program Maturity

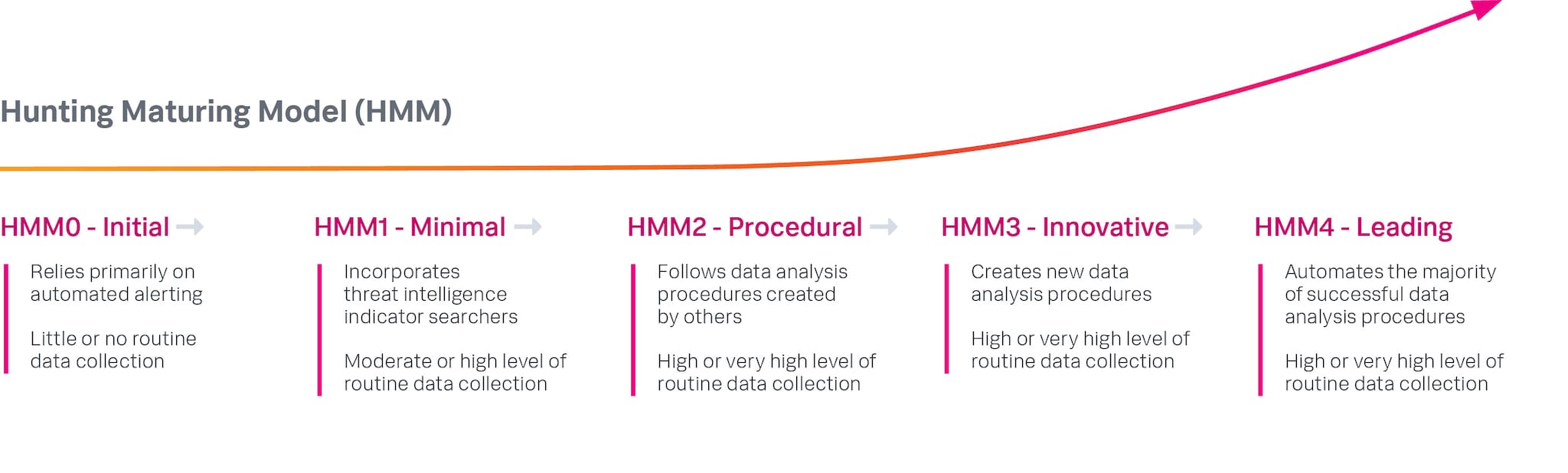

So far, we’ve looked at ways to measure the effects of our hunts, either individually or cumulatively. But what about measuring our program as a whole? That’s where the Hunting Maturity Model (HMM) comes in!

The Hunting Maturity Model, by David J. Bianco (2015)

First published in 2015, the HMM gives CISOs and other hunt leaders a simple way to measure the maturity of their threat hunting program. It considers the three key measures of a threat hunting program (data collection, data access, and hunters’ analysis skills) and reduces these to a single maturity number, HMM0 - HMM4.

Not only is the HMM useful to gauge a program’s current state, but it also serves as a roadmap that shows how to get from where you are today to where you’d like to be. Choose your desired maturity level, note the differences between where you are now and where you’d like to be, and plan for the improvements necessary to achieve the goal. Most hunting organizations should be at least at HMM2 (high level of data collection, generally following hunts designed by others), though many will opt to go for HMM3 (creating novel hunt procedures, perhaps with M-ATH), or, ideally, HMM4 (turning the majority of their hunts into automated detections).

Conclusion

Measuring the effectiveness of your threat hunting program can be challenging for both hunters and leaders. The PEAK threat hunting framework offers a starting point, with five key metrics designed to help you tell the story not of how hard your team worked but how effective they were at improving your overall security.

PEAK also incorporates the Hunting Maturity Model to help assess your program’s current state and provide guidance on potential next steps for improvement. Organizations can use these metrics and the maturity model to help measure, tune, and improve their ability to keep ahead of adversaries in the cybersecurity arms race.

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.