Exploring AI for Vulnerability Investigation and Prioritisation

The sheer volume of cybersecurity vulnerabilities is overwhelming. In 2024, there were 39,998 CVEs — an average of 109.28 per day! This constant stream of new threats makes it increasingly difficult for security teams to keep up. Large Language Models (LLMs) offer a possible solution, helping automate vulnerability investigation and prioritisation, allowing teams to more efficiently assess and respond to emerging risks.

Do you even have time to skim over 109 CVEs a day?

As discussed in our previous post ”Vulnerability Prioritisation Is a Treat for Defenders,” it’s critical to prioritise effectively rather than attempting to chase every vulnerability:

The importance of vulnerability prioritisation for small and medium-sized businesses (SMBs) and large enterprise networks cannot be overstated. Whether you're a small organization with limited IT resources or a vast enterprise managing complex systems, understanding which vulnerabilities to tackle first can make all the difference in safeguarding your infrastructure from costly breaches.

AI to the Rescue?

AI security tools are everywhere, but many focus on offense—automating attacks, finding exploits, and aiding red teams. So why not create an LLM tool designed specifically for defenders? Such a tool could filter the noise and prioritize vulnerabilities using real-world context from platforms like Splunk. By leveraging the data you’ve already got in Splunk, you can identify which assets are exposed, actively targeted, or genuinely impactful to your environment. Defenders could move beyond endless CVE lists and abstract risk scores, focusing instead on vulnerabilities that truly matter.

Visibility Is Tough

Achieving good visibility remains challenging, largely due to poorly maintained Configuration Management Databases (CMDBs). A CMDB is essentially a central repository that stores detailed information about all the hardware and software assets within an organization, along with their configurations, relationships, and dependencies. In vulnerability prioritization, an accurate CMDB plays a critical role by helping security teams quickly identify affected systems, understand their importance to business operations, and determine potential impacts of vulnerabilities. Unfortunately, outdated or incomplete CMDBs mean operating with incomplete insights, making it difficult to know what's truly at risk or needs immediate attention. Accurate, contextual data is crucial, underscoring the importance of better CMDB practices.

How Did I Set This Up?

To test whether an LLM could practically address these challenges, I developed a straightforward proof-of-concept. Here's how it came together.

Scraped the NIST NVD API using Pydantic for validation.

- Summarised CVE data using an LLM.

- Queried Splunk (with the Asset and Risk Intelligence platform installed) for context, identifying what's in our environment to refine risk assessments.

- Parsed CVE reference links, summarising key details to distill essential context.

- Leveraged the LLM to translate CVE/CVSS scores into clear, understandable summaries.

I prioritised clarity and actionable context over verbose descriptions and repetitive information when designing the prompts.

DIY or Outsource the LLM?

Initially, I tried running local open-source LLMs for control and cost reduction but quickly pivoted to OpenAI's GPT-4o and GPT-4o-mini models. Implementing caching of responses, where I wrote the response to disk after the first use, significantly cut latency and improved efficiency during testing. Even extensive querying resulted in a few cents of cost a day, proving far more practical and affordable than maintaining self-hosted GPU setups. I’d expect even with ~100 CVEs a day, summarising all the posts, you’d be hard-pressed to spend more than a dollar two, which is far cheaper than paying a human to do the same job!

So, Did It Actually Help?

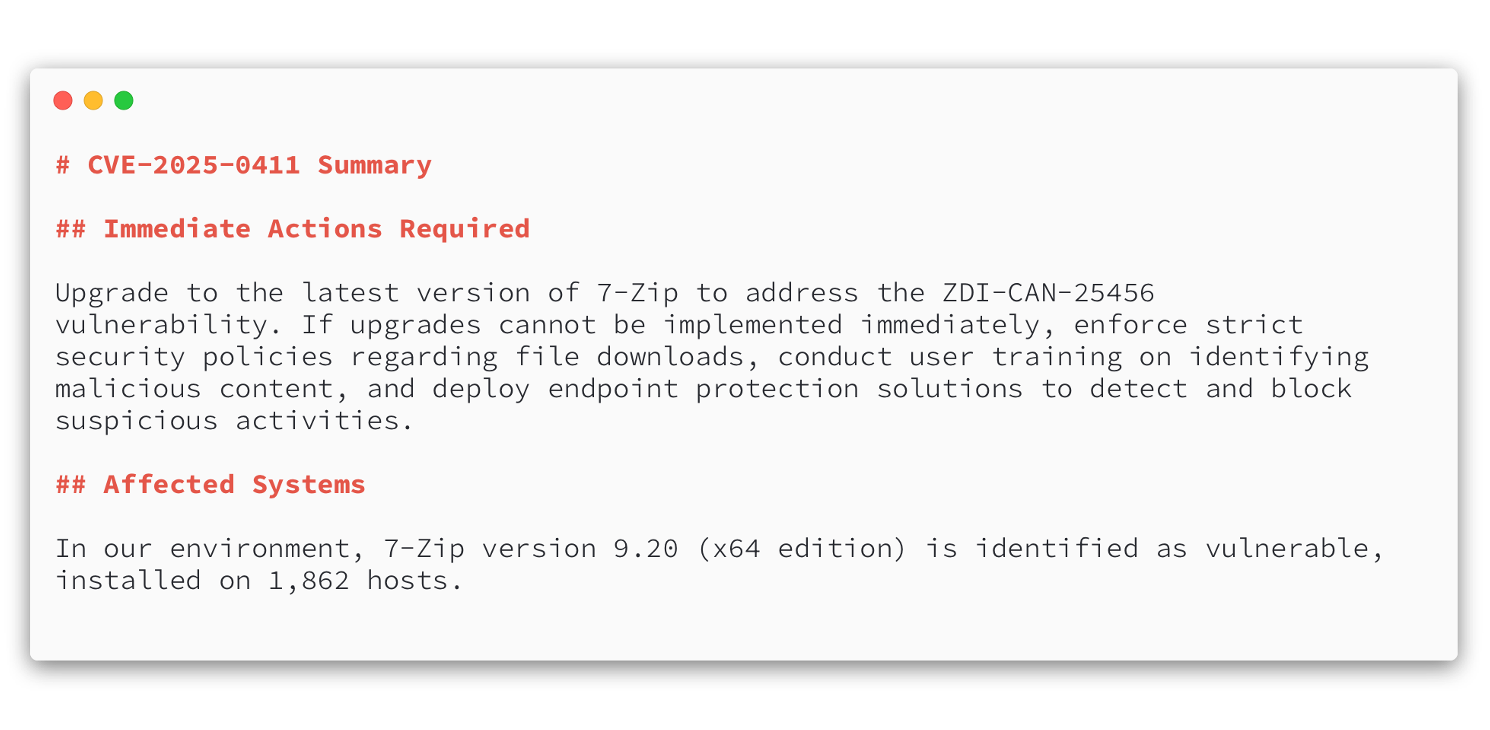

Overall, the approach was pretty effective. The LLM-generated summaries were significantly easier to digest than going through each CVE and its multiple references. Pulling contextual data from Splunk greatly enhanced the relevance of the assessments. Moreover, the LLM provided useful suggestions when it was unclear whether we had the affected products in our environment, helping to broaden our searches and reducing the uncertainty typically involved in vulnerability investigations.

An example of the output for CVE-2025-0411.

Prompt Engineering: Finding the Write Stuff

Crafting effective prompts took a bit of trial and error, but once dialed in, the LLM responses significantly improved. Initial prompts often lacked enough detail or were too broad, resulting in generic outputs. After tuning prompts to clearly specify context and intent, the LLM produced much more relevant, actionable summaries. Iterating on prompts and consistently testing outputs proved critical to achieving practical, context-aware results.

Hallucinations—where an LLM invents or overlooks details—are a common concern, but in testing multiple CVEs, I didn't observe the model incorrectly identifying or ignoring affected hosts. Explicitly prompting the LLM with clear context about our environment seemed effective at preventing this issue.

What Didn't Go Well?

Scraping Websites Is Still a Pain

Automating content scraping from referenced websites remains a challenge. Websites vary widely in structure, enforce rate limits, and implement anti-bot measures. JavaScript-dependent pages required headless browsers, adding complexity. While functional, there's ample room to improve handling inconsistencies and edge cases. I allowed it to skip many references that were unparseable or actively blocked scraping, acknowledging the impracticality of capturing everything.

NIST NVD, CVEs and Me

I found scraping the NVD REST API practical, though it occasionally ran slowly or unreliably. The Python requests library, combined with retry mechanisms using exponential backoff, ensured effective data retrieval. Despite occasional API hiccups, persistence made it a reliable approach for automation.

Github and OSINT

I explored GitHub as an additional reference source for vulnerability insights, but older references often added unnecessary noise without useful context, while newer entries frequently lacked detail or duplicated existing information in the references. Similarly, OSINT platforms like Mastodon or Twitter mostly reposted or misinterpreted existing references. Unless continuously monitored at scale, these sources offered limited practical value.

Graph Databases: Nice Visuals, Limited Value

I also experimented with ingesting the data into a graph database, hoping the relationships might provide additional context. Unfortunately, that didn't add much practical value - apart from confirming something most defenders already suspect: vulnerabilities tend to cluster around the same CWEs or underlying issues repeatedly, no matter which vendor or software is involved. While interesting to visualize, it didn't make prioritization decisions any clearer or simpler, so it wasn't particularly helpful in refining the approach.

Just a sampling of the vulnerabilities that suffer from Race Conditions (CWE-362)

Conclusion

This proof-of-concept demonstrated the potential for LLMs to save some time and effort in vulnerability investigations by summarising complex reference information and highlighting critical threats clearly and quickly. Although integrating context from datasets and websites presents ongoing challenges, the results show that it's feasible to leverage AI-driven summaries to focus efforts more effectively, and explicit prompting helps to avoid errors. With continued refinement, such an approach can meaningfully enhance vulnerability prioritisation, but for now it’s a relatively simple low-maintenance approach, with minimal expenditure on third-party LLM services.

If you're interested in exploring the code behind this project, check back soon; we'll be sharing the details here.

As always, security at Splunk is a team effort. Credit to authors and collaborators: James Hodgkinson, Ryan Fetterman, David Bianco, Marcus LaFerrera.

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.