Exploratory Data Analysis for Anomaly Detection

Many of you might have seen our blogs about different techniques for identifying anomalies. Perhaps you even have a handful of analytics running in Splunk to identify anomalies in your data using statistical models, using probability density functions or even using clustering.

With great choice comes great responsibility, however, and one of the most frequent questions I encounter when speaking to customers about anomaly detection is how do I choose the best approach for identifying anomalies in my data?

The simplest answer to this question is one of the dark arts of data science: Exploratory Data Analysis (EDA). EDA is a wonderful catch-all term for the wide variety of analysis you can perform to figure out what comprises your data and what patterns exist within it. While the spectrum of EDA is broad - and we cover some approaches in our MLTK documentation - I thought I would walk through a few simple techniques that can be used to understand how to prepare your data for anomaly detection.

Essentially, we are going to take you through two examples of how to profile your data here using time series analysis and using histograms to understand how the data is distributed.

Time series analysis

Broadly there are a couple of ways of detecting anomalies in Splunk, either:

- Determining thresholds based on historic data that apply uniformly to your data or

- Determining adaptive thresholds from historic data that apply at different times or for different sources to your data

It may sound simple, but the easiest way to identify which approach to use is to plot your data over time and see if you can spot any obvious signs that one approach may be better than the other.

If we look at an example dataset that ships with the MLTK – the bitcoin_transaction.csv data – and plot the value over time it is clear that this data is better for using uniform thresholds. The rationale for this is that the profile is pretty flat (with a few obvious outliers), which tells us that the value of the transactions isn’t dependent on any particular time of day or day of week, i.e. there isn’t a periodic nature to this data. Here we could simply calculate the average and standard deviation from our historic data, and set a threshold based on these statistics to find anomalies.

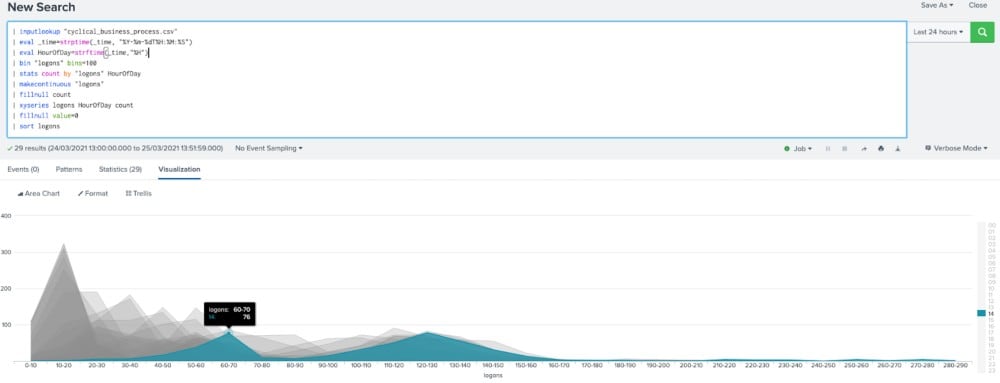

Taking another example, the cyclical_business_process.csv data, plotting this data over time there is a clear periodic pattern – with certain times of day and certain days of the week having more or less activity than others. This dataset would be much better suited to an ‘adaptive thresholding’ technique where baseline ranges can be calculated to match certain periods of time, rather than trying to model all times of day as the same.

Histograms

Now that we have identified a periodic dataset we want to try and identify the best way of splitting our data so that the underlying behaviour will be best captured by an anomaly detection algorithm. A simple way of doing this is to use a histogram to understand how our data is distributed.

Thankfully the MLTK comes with a histogram macro, and we have used this macro below to view the distribution of our logon data in the cyclical_business_process.csv dataset. The chart backs up our conclusions that the data can’t be treated uniformly as there are three peaks in the data. Peaks in a histogram are commonly referred to as a mode, which describes a value that occurs frequently in a dataset.

The next step in understanding this particular data is to break it down. From looking at the time series chart above it appears that the hour of the day seems quite important, so we will start by breaking our data into hourly intervals then generate a histogram profile for each hour. This is often referred to as quantization, which can apply to breaking other data types as well as time intervals. While for our data this shows that some hours behave in a similar fashion, there are also times of data that still have a couple of modes – as shown in the chart below, with 2 pm having two distinct peaks. Note that command+shift+e or ctrl+shift+e can be used to expand the histogram macro into a full Splunk search, which we have done to form the base of the search below.

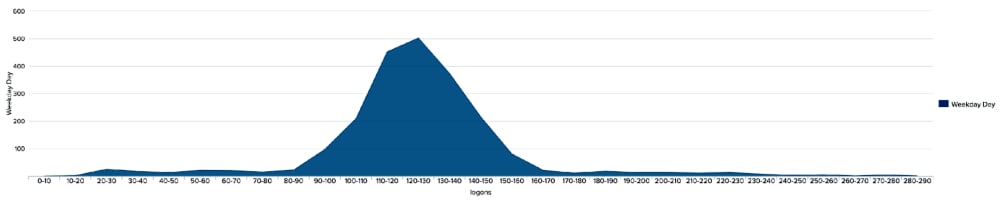

By breaking this down even further and focussing purely on the weekdays we can now see that each hour of the day has a nice distribution centred around a single point.

Maybe we have split our data down to finely, however, as many hours of the day appear to have very similar behaviour. We can add in a case statement to calculate a period now by visually examining each of the hourly histograms, and it is this period that we will use to determine the thresholds for detecting anomalies. Essentially after originally breaking our data down into hourly intervals we are now selecting more specific time periods by combining hours with similar behaviour together.

It is worth mentioning that although we have used the time field to decide how to split our data up here you do not need to focus on time alone. For example, you might find that for certain datasets host, source or user are better suited for splitting data than the time (or perhaps even a combination of a few of these).

What next?

So now we have identified the best way of splitting the data – if required – using our analysis with histograms and time series analysis. The next question is which anomaly detection technique should we apply to the data?

Here I use a simple rule of thumb: if the data is nicely distributed fitting a bell curve-like normal distribution, then using statistics is a good approach. The weekday day histogram we calculated above fits this pattern nicely, with an even spread of data on either side of the peak.

For data that shows some kind of skew to one side than using the DensityFunction algorithm in the MLTK is a better approach. Our Night period histogram is a good fit for this approach – being quite closely centred to zero and having a long tail running out into the hundreds.

If in doubt the DensityFunction also supports data that is normally distributed – so can be a good choice to use either way!

Hopefully, this has been a useful article to guide you through your own exploratory data analysis, and you will be up and running with some anomaly detection searches in no time!

Happy Splunking.

Greg

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.