Splunk’s Ingest Processor - Why It Is A Game Changer For Managing Log Data In The World Of Observability

Logs and other supporting data, like metrics and traces, have become one of the most important elements of observability in recent times. The ability to harness the power of this data has enabled teams to significantly speed up problem resolution by quickly understanding the root-cause of issues as well as enabling them to understand why a system behaves the way that it does. It can also be used for business analytics too; ranging from “why do our customers leave at certain parts of the journey” through to “are the marketing events that we are running successful?” As observability data is critical to the management of modern platforms today, the ability to manage that data quickly, easily and in a cost effective manner is key to ensuring the maximum value derived from it. I go into more depth of why log data is important and the key challenges of harnessing in my blog here.

Since the time of writing the previous blog, Splunk has announced the release of the new and exciting Ingest Processor technology which now makes it even easier to manage this log data. But, before we get into why this is an important piece of tech, let’s recap the challenges with harnessing log data generally and how Splunk helps solve them:

Why Is Collecting Log Data A Challenge?

- The data - when you begin to think about the data in these environments it is vast and varied, with no agreed standards of how the data should be stored - a simple IP address might be stored under many variations of field names e.g. src_ip, source, source_ip etc, within different data sets. As the data is typically unstructured, there are no structural clues as to what it means, for example, the data could be comma or space separated. Equally, the lack of structure means that there are no constraints on what a developer might want to express through their log data. The challenge is how do you find a way of effectively extracting that mass of data to make it meaningful and contextual - and not have to worry about schema’s or data relationships etc.?

- Collection - given the vast volume and array of data sets, organisations typically have multiple tools and agents deployed to try and collect this data. Each of these tools also has their own approach in collecting it too. This results in collecting only data that the tooling decides is important or indeed, it can cope with. This significantly reduces the visibility that the data can provide as well as the data remaining separate within each tool, thus making it siloed and difficult to harness. With this challenge that value in the data will not be fully harnessed.

- Correlation - this is extremely hard when the data collected is stored across multiple tools and locations. How do you correlate data across these vast data sets or establish relationships and, of course, do this at scale and speed? Not to mention the waste of valuable people and resources in trying to do it all manually.

- Storage - where and how the data is stored can be costly, particularly with the use of multiple tools across multiple locations and methods. You may also be storing the same data more than once and therefore increasing the cost of storing this data. Furthermore, how do you protect that data against security threats in a common, easy to manage way?

- Performance - speed of data onboarding, processing and analysing the data is a big challenge, particularly at an enterprise scale.

These challenges mean it becomes too difficult, too hard and too time consuming to harness this data and utilise the valuable insights it contains. This will result in issues taking longer to identify and longer to troubleshoot and fix them as the correct data isn’t available to the right teams.

Splunk's Data Platform Solves This Problem

Splunk has over two decades of experience when it comes to harnessing the power of log data, from both an observability, security and business perspective, with its award-winning data platform. The following unique principles explain the why:

1. Schema-at-read - At the lowest level, Splunk has built a platform where there is no requirement to understand the format of the data before ingestion; simply onboard the data into Splunk and start observing insights from it.

2. Search Processing Language (SPL) - SPL allows you to quickly search and interrogate the data, build out visualisations, use the machine learning toolkit to spot anomalies and forecast the future trends of that data and much, much more.

3. Correlation - one of the biggest challenges of harnessing data from these platforms is being able to correlate different data sets together - for example, what if I wanted to find the same user ID in multiple data sets to create a single journey? In Splunk this is easy - using the schema-at-read approach - this data correlation is quickly done at search time byusing SPL, by combining multiple data sets together.

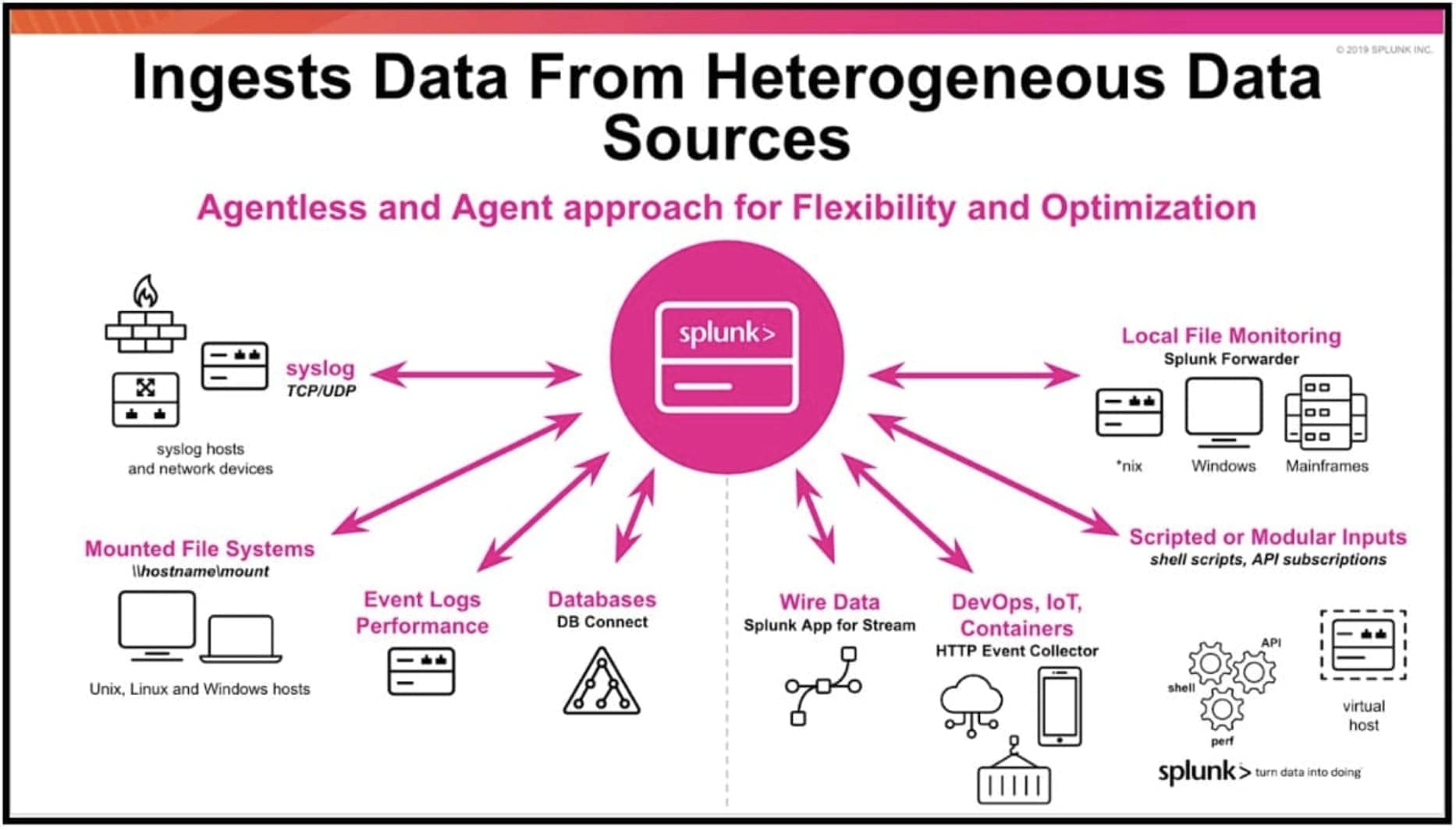

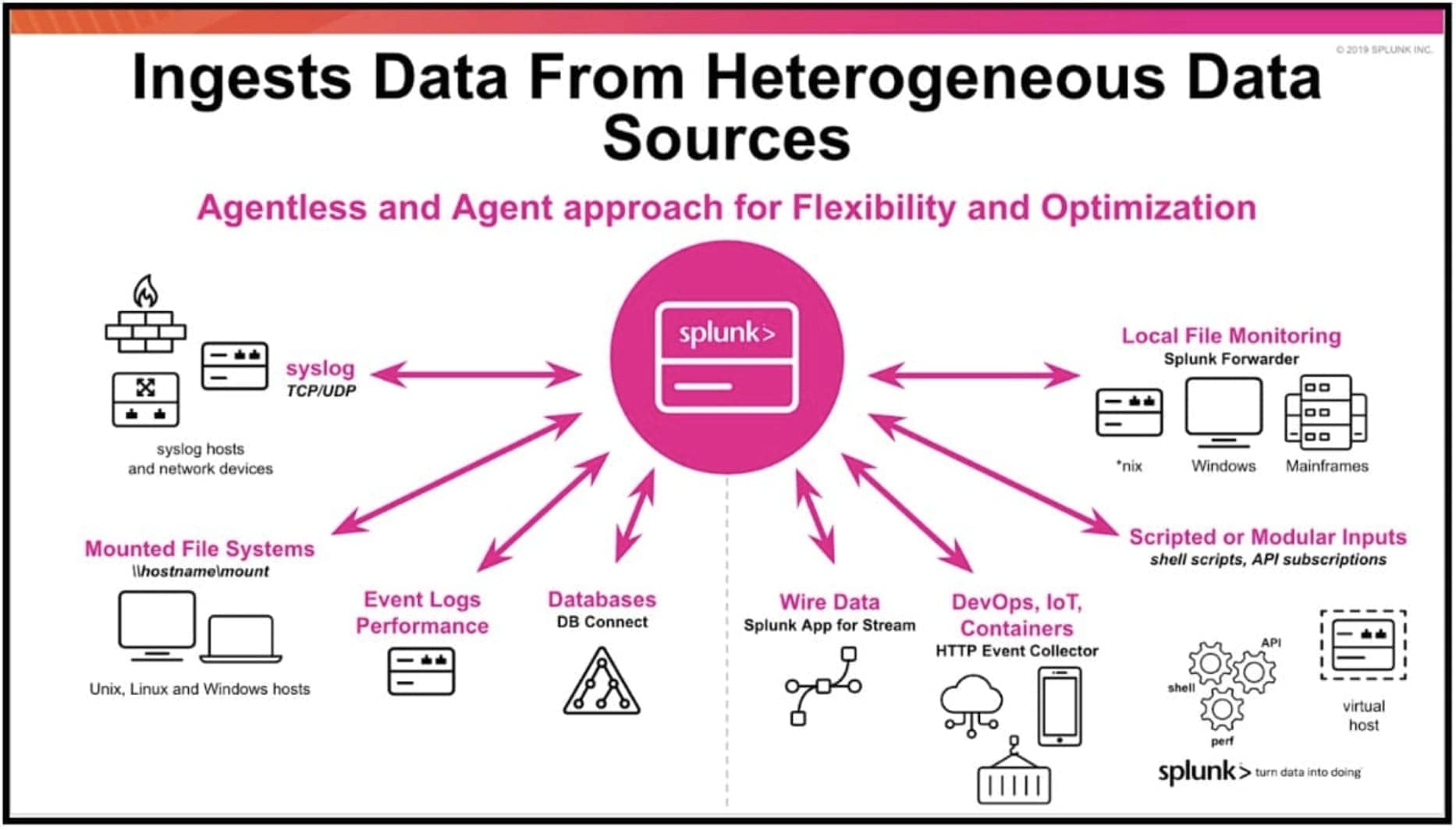

4. Lots of data sets (what Splunk calls GDI - get[ing] data in) - Splunk supports any human readable data into the platform. In fact, there are over 2000 technical add-ons and apps to assist with this - which are technology vendor, Splunk and community written and Splunk vetted - available on Splunkbase which makes getting data in even easier to do. And not just from agents; the platform supports agentless approaches too, as shown below.

5. Storage - multiple options to ensure the most economical approach to storing data securely and also ensuring it remains available.

6. Performance - Splunk’s data platform uses patented technology to quickly ingest, process, store, analyse and view the varied data sets across the observed platform as fast as possible, to ensure you receive your insights when it matters most.

The New Splunk’s Ingest Processor

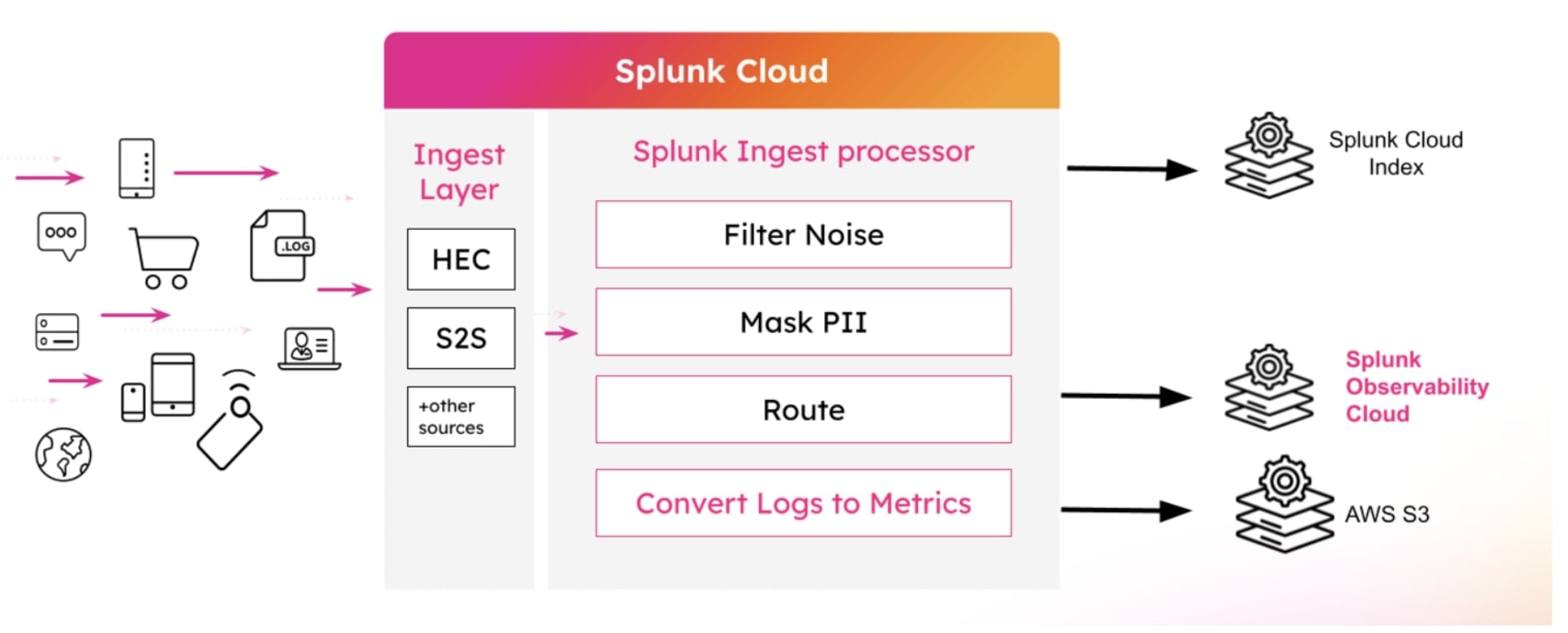

What is the Ingest Processor? It is a new service that is part of your Splunk Cloud stack and it observes all the incoming data before it is ingested into Splunk. As it sits within the data stream itself, you can do some really cool powerful things to the data, essentially making your data much more valuable and focused on what you need it for. Another bonus is that it can aid in making your Splunk license far more efficient by not only ingesting the data you need for observability but also ensuring that you are ingesting the right data that really matters in both solving issues and providing intelligence of your platform. Once the data has been processed, the data is then forwarded to Splunk to be ingested into the Splunk data platform.

What Can You Do With It?

The benefits of Ingest Processor include:

- Filtering and masking data - thus ensuring only the data you need is ingested into Splunk.

- Data Removal - any data that is irrelevant, duplicated or too noisy can be easily removed from the data stream and therefore not ingested into Splunk. This ensures that the data that is ingested is indeed the critical data needed to troubleshoot and solve issues.

- Data Enrichment - you can enrich the data by adding or creating additional key fields or combining data from other data sets into one.

- Metricising Logs - creating metrics from logs will provide additional visibility of the observed platform from early, proactive warnings of issues through to key information about customer and system behaviour. See here for a quick example of converting JSON logs into metrics.

- Data Routing - once the data is processed, it can be routed to the Splunk data platform for ingestion, to an Amazon S3 bucket and/or to the Splunk Observability Platform.

- Data Pipeline Templates - these quickly enable you to start using the Ingest Processor by harnessing pre-built templates and the multi-step walk through wizard. You can leverage these to enable the key use cases. Click here to get started with Pipeline Templates.

What is SPL2?

Building out data pipelines is easy within the Ingest Processor as it uses SPL2, a version of SPL that is designed for effective processing of data in motion - in stream and search - as well as at rest. And with an easy-to-use pipeline interface, along with a walk through wizard, you can quickly create a data pipeline and route the data to where you want it to go. The pipeline can also be tested on the data to ensure that it works and is providing the output you want before deployment.

In summary, the introduction of the Ingest Processor will revolutionise the use of log data within your environment. It will make the data much more relevant and focused, enabling quicker identification of issues, faster troubleshooting and issue resolution as well as being able to interrogate that log data to understand both customer and system behaviour. Check out the below links for some further reading:

- See the Ingest Processor in action with this short video.

- Learn all about SPL2 with the search reference docs.

- Read all about the Ingest Processor on Splunk docs.

- Use Data Pipeline Templates to quickly setup the Ingest Processor.

- Metricising Logs - converting a JSON log to metrics example.

Thanks to my colleague and fellow blogger, Ben Lovley, for his contribution to this blog. Check out Ben’s blogs here and learn more about doing cool things with data and Splunk.

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.