Using Splunk for SEO Log File Analysis

Here at Splunk, we know a thing or two about log files. Splunk Enterprise and Splunk Cloud, our flagship products, are some of the best tools in the industry for understanding logs, searching for anomalies, creating dashboards and visualizations, setting up alerts and doing all sorts of useful things with log files.

Many teams within Splunk use our own products for a variety of use cases and the SEO Team, within the Growth Marketing organization, uses Splunk Cloud for SEO log file analysis. This blog post will showcase just a few of the ways we’re using Splunk to improve our own technical SEO.

Quick Resources:

- Register for Splunk Cloud Platform – free for 14 days

- Learn more about SPL in in this Primer and Cookbook

- Learn the basics and how to get started with Splunk

Status 5xx Monitoring

When Googlebot sees a Status 500 response, or other 5xx error, its an indication that there is an issue with the server. This is different from a 404 – in which the server is responding, but the requested content simply isn’t available.

Many websites are configured to serve a Status 500 response to malicious bots. Websites will also frequently show this response if the server is overloaded with requests, such as during a DDoS attack. And at its most basic, if the server is offline or then users will receive a Status 500 response.

Its certainly important for users to never – or rarely – receive a Status 500 error when they are using a website. Its also important for Googlebot to not see this message. If Googlebot sees this critical error response, Googlebot will try the URL again. If it continues to receive the response, it will continue to request the URL at a slower rate (as requesting too quickly can be the cause of the error). Eventually, though, if a large section of a website consistently give 500 errors, then Googlebot will stop crawling the site and stop serving the pages to users of Google Search.

Google wants to send users to the best content on the web – and Google certainly doesn’t want their users to have a bad time by clicking on search results that take them to Status 500 errors!

You can learn more about how Google handles Status 500 errors in this video from Google Office Hours:

To get started finding Status 500 errors, we can use a Search Processing Language (SPL) query such as:

index="webmkt" uri_path="/en_us/*" "googlebot" status="5*"

After this, we can dive into more advanced and refined queries, correlate outages with on-site changes from specific timeframes, and determine if the issues causing the errors have been resolved. Additionally, using the “Save As” dropdown menu we can easily save this (or any) view as a Report, Dashboard Panel, or an Alert.

Keep in mind that some Status 5xx errors are intentional and beneficial! For example, if you’ve identified a bot or crawler that is causing problems for your website, you might decide to block them, and it could be good to see certain User-Agents or IP addresses getting a 5xx response.

We can look at all User-Agents (no longer restricting to Googlebot) and view the last 30 days with this query:

index="webmkt" uri_path="/en_us/*" status="5*"

Its easy to see that there were exactly 3 days last month that were responsible for the bulk of the activity. Looking more closely, patterns start to appear. In the #1 example below, it is the Screaming Frog User-Agent which is being blocked. This is intentional, expected, not cause for concern. #2 and #3 on the list, though, have nearly identical fingerprints with very odd matching query strings on the URLs. It is probably not a normal human website user and doesn’t appear to be any known benevolent bot.

Monitoring Googlebot Visits

Using Splunk’s built-in Visualization, we can see how Googlebot is increasingly visiting a new website section. Set at timeframe for last year, using this query:

index="webmkt" uri_path="/en_us/blog/learn/*" googlebot | timechart count by day

We might edit the query to identify the top 100 URLs in a timeframe getting visits from Googlebot

index="webmkt" uri_path="/en_us/blog/learn/*" googlebot | top limit=100 uri

Or further adjust the query to find the URLs which had the fewest visits from Googlebot (but still, at least 1 visit):

index="webmkt" uri_path="/en_us/blog/learn/*" googlebot | rare uri

Website Analytics

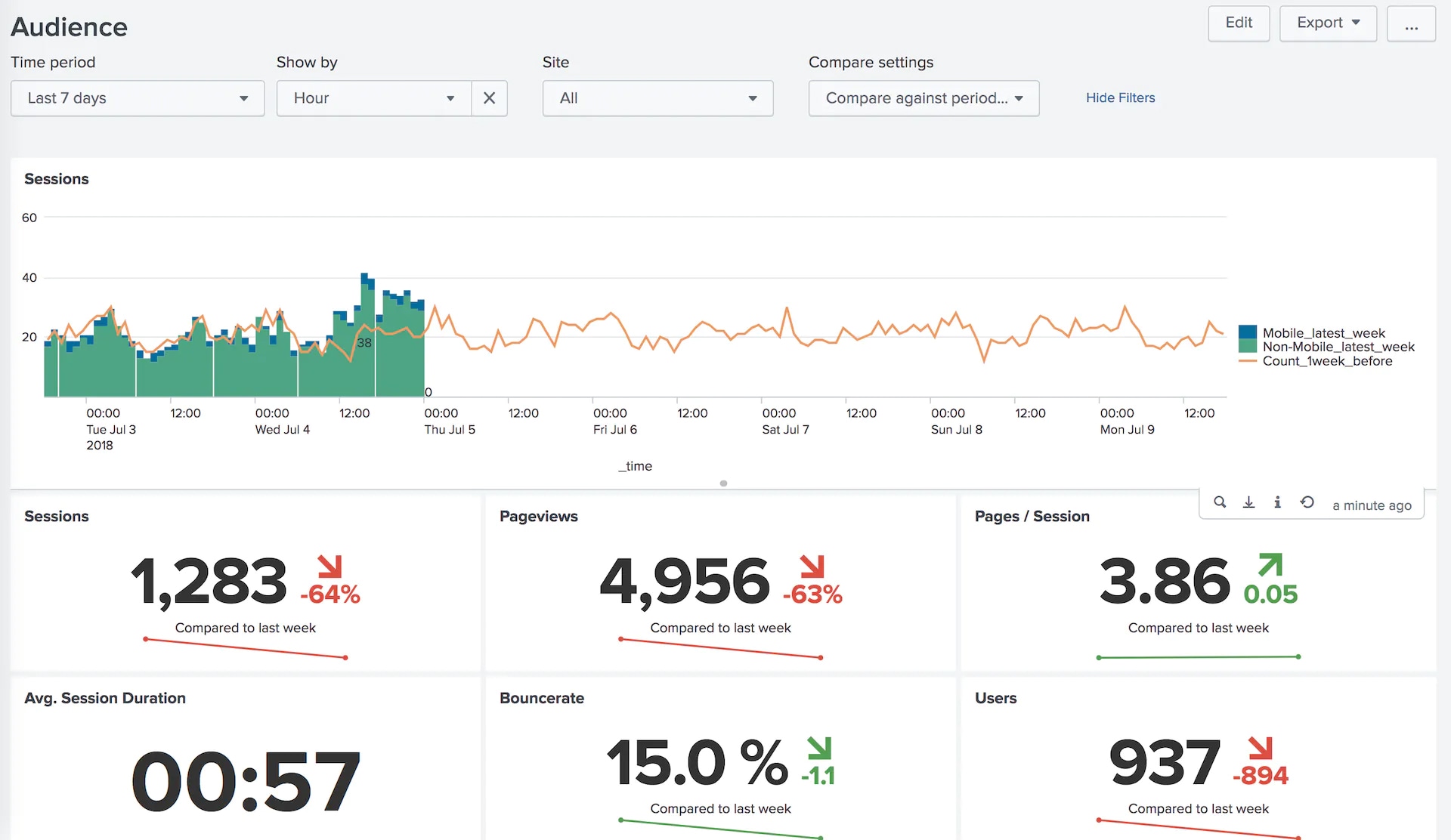

While Splunk Enterprise and Cloud are not normally a replacement for something like Google Analytics, they can be used for analytics and in some cases it makes a lot of sense. In case your company wants to get website analytics without using JavaScript or cookies, Splunk App for Web Analytics can let you access analytics data similar to Google Analytics or Adobe Analytics for both historical data as well as new real-time data streams. You can learn more about it works in this blog post.

If your company relies on Google Analytics, Adobe Analytics, or any other common website analytics tool, Splunk can still help as a backup. Its an unfortunate reality that sometimes mistakes happen on production – including losing analytics tracking codes. If you have Splunk running, even without using Splunk App for Web Analytics, it can serve as a quick replacement for your normal website analytics tools if you are in a bind.

Suppose, for example, that our website has experienced a Google Analytics outage over the past couple of weeks, so we’re having trouble confirming our traffic is stable or growing. we need to report on traffic entering a particular site section coming from Google – which can serve as a rough approximation for Natural Search traffic in a pinch.

Using a query like:

index="webmkt" uri_path="/en_us/blog/learn/*" referer_domain="*google*" status="200"

We can clearly see growth in traffic to the site section from the referrer we care about.

A similar approach could be used to track website visits with specific query strings or other tracking codes, and to understand where that traffic is coming from.

Using a slightly edited query:

index="webmkt" uri_path="/en_us/blog/learn/*" referer_domain="*google*" status="200" | top limit=10 uri

…and along with the built-in Visualization tools, we can easily see the top 10 blog posts in a section the last month or year, and compare with a prior period to understand growth or decline in traffic by URL or folder.

Finding 404s

404s generally aren’t a bad thing – they’re a natural part of a website. Sometimes a page needs to be unpublished and isn’t being moved or replaced with anything. In these cases, its helpful to use a 404 page to let users know that the thing they’re looking for is no longer available. Of course, you never want to link to a 404 error! Typically a website crawler like ScreamingFrog is the best option for finding links to 404 errors on your website.

But there is another scenario: when visitors are hitting 404s on your website, but you aren’t linking to them. This can happen if you’ve retired a page, stopped linking to it, but there are still emails, or applications, or other websites pointing to the old now-404 URLs. You need to discover these URLs in order to further investigate and consider taking an action (such as updating/editing/removing the offending link on some other platform). This is the type of 404 that ScreamingFrog can never alert you to.

In this case, we can use a SPL query such as:

index="webmkt" status="404" uri_path="/en_us/*" | top limit=100 uri

Using this type of query, we can start to identify the top URLs in a particular site section and timeframe that are resulting in a 404 response. After refining and further investigation, I can begin to find referrers and thus actionable fixes to solve for these 404 errors.

These same techniques are perfect for identifying new 404 errors occurring directly after the launch of a major website change, redesign, migration, etc. By looking at specific dates before and after major launches, its easy to find clues for errors that users are experiencing.

Wrapping Up

This is just the tip of the iceberg of some of the use cases for Splunk for SEO. Get started by trying either one of these options:

See an error or have a suggestion? Please let us know by emailing splunkblogs@cisco.com.

This posting does not necessarily represent Splunk's position, strategies or opinion.

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.