Introduction to Reinforcement Learning

Like humans, machines can learn from experiences (and mistakes)! This concept is known as reinforcement learning, and it’s at the core of some of the most prominent AI breakthroughs in the last decade.

Large language models (LLMs) including ChatGPT, the Nobel Prize-winning protein discovery technology AlphaFold, and the historic victory of the AlphaGo machine against a human champion at the complex ancient Chinese game of Go — they all incorporate reinforcement learning algorithms.

In this article, we will review key Reinforcement Learning concepts and approaches, as well the latest advancements behind the latest technological breakthroughs.

What is reinforcement learning?

Reinforcement learning (RL) refers to an AI methodology in which an agent interacts with its environment and learns an optimal policy that maximizes a reward function.

This concept is partly inspired by animal psychology, especially the concepts of:

- Learning from trials and errors

- Rewards and punishments

- Exploration vs. exploitation of resources,

- Learning a policy that maps an environment state to the behavior of the agent

(Related reading: machine data and machine customers.)

How is reinforcement learning different from supervised and unsupervised learning?

Yes, the concepts of reinforcement learning share some similarities with supervised learning. But the approach and goal of reinforcement learning is vastly different and totally in contrast to unsupervised learning.

In the supervised learning approach, you create a model that learns to map a data distribution to its known domain. For example, a set of images of animals, each correctly labeled with the animal names. A supervised learning model would learn how different features within the image map to its corresponding label (which is available, hence the supervised learning).

In the unsupervised learning approach, the labels of such images are not explicitly provided. Instead, the model extracts features within the images to separate them. Images with similar features are clustered together — each cluster is considered a separate label class.

In both supervised and unsupervised learning, when the model is exposed to new (unknown) images, it can read the features within the image to predict its corresponding label.

How reinforcement differs

Reinforcement learning is different:

- RL is not supervised, because no class labels are provided.

- RL is also not unsupervised, since the agent interacts with the environment to extract knowledge regarding the environment state.

The agent eventually learns to associate an action policy or a value to all states that it may encounter.

Another key difference exists: the goal for the agent is to learn how to act in the environment — not to learn patterns within data.

Reinforcement learning stems from the branch of science called optimal control. Together with supervised learning, it has helped produce some of the most promising AI breakthroughs: the approach of Reinforcement Learning from Human Feedback (RLHF) merges RL algorithms (PPO) with some human intervention to engineer safe and secure optimal control of LLMs.

How reinforcement learning works

The agent interfaces with its surrounding environment by perceiving the existing environment state and how some action (interaction) brings it to another state.

At each interaction step, the agent receives an input containing information about the present environment state. The agent then chooses an action to interact with the environment and transition to another state. A value is associated with this state transition in the form of a reinforcement signal — either reward or penalty.

The behavior policy of this agent should be to maximize the long-run measure of reward. This is learned systematically over time using trial and error.

Simple demo

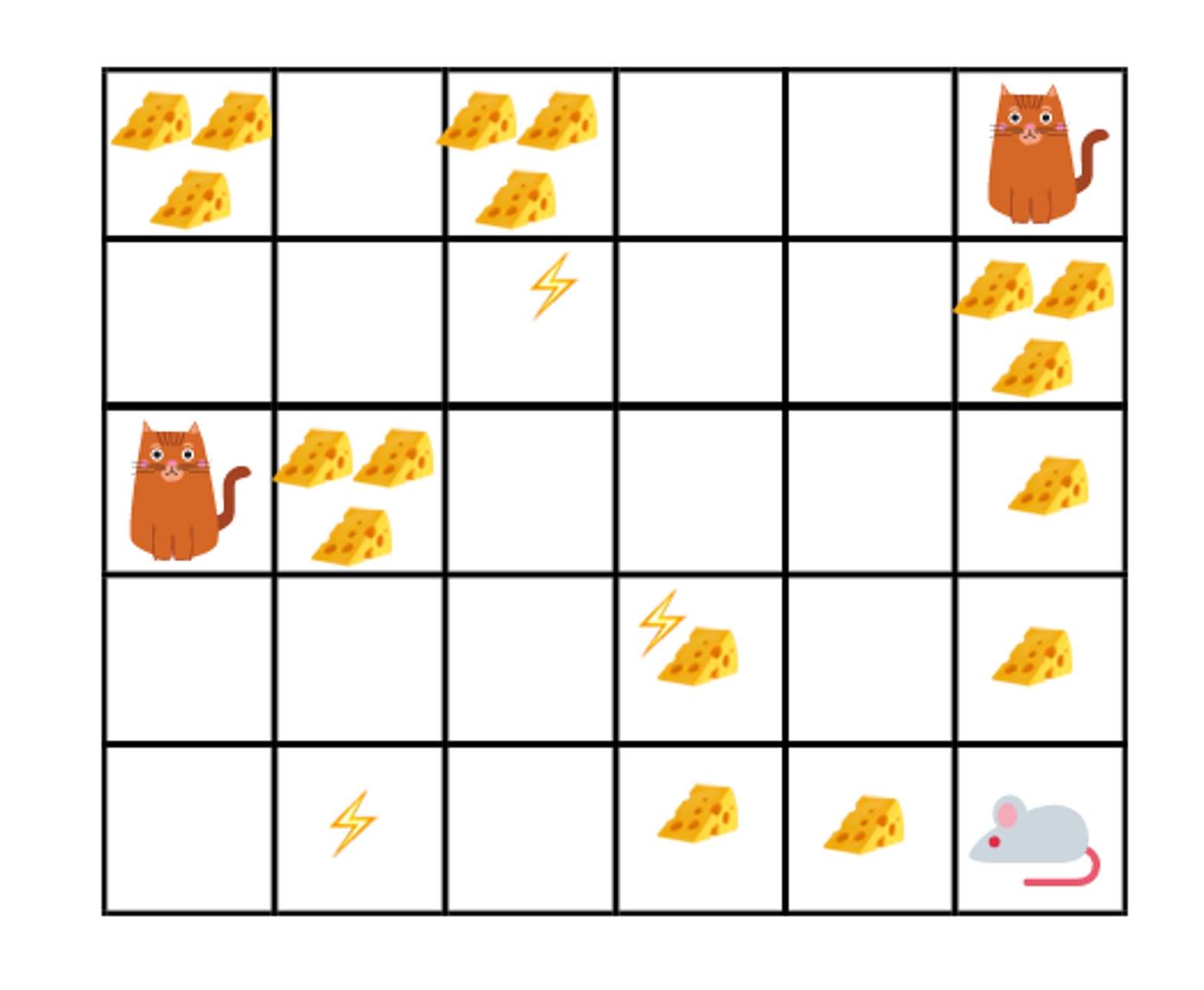

Here’s a simple demonstration of a reinforcement learning model, where a mouse (the agent) has to traverse the maze (the environment) to find cheese (the reward).

The goal of this agent is to explore the entire environment to maximize the long-term reward.

The environment also includes states where an adversary can meet the agent and cause a penalty to the accumulated reward. For example:

- Meeting an environment state (any box in the maze) with a cat can cause a very high penalty.

- Whereas meeting a state with lightning can have a low penalty.

(Source)

The mouse can aim for less reward (single cheese slice) or walk around the maze to find a large sum of reward (three slices of cheese in the same box). The mouse can choose a strategy where it can either:

- Risk exploring many states farther from its location in search for higher cumulative reward.

- OR, exploit nearby states for immediate reward.

This is essentially the exploration versus exploitation problem of reinforcement learning. You can find a more technical and detailed explanation of these concepts here.

Approaches for reinforcement learning

An important question arises. What exactly should the agent learn in order to achieve its desired states? Reinforcement learning offers the following approaches:

Value-based learning

Here, the agent’s goal is to learn how valuable it is to be in a certain state or take a specific action therein.

The idea is to learn how important it is to be in any given state based on that value. The agent can choose state-action value pairs to make this decision. Examples include:

- Q-Learning

- SARSA

- A2C: Advantage Actor-Critic

Policy-based learning

In this strategy, the agent doesn’t learn values. Instead, the agent learns a policy — a function that maps states to actions.

It explicitly defines the policy of taking a specific action in any given state. This makes policy-based learning more effective in environments with high-dimensional or continuous action spaces. Examples include:

- PPO: Proximal Policy Optimization

- TD3: Twin Delayed Deep Deterministic Policy Gradient

- SAC: Soft Actor-Critic

Model-based learning

In this approach, the agent builds or uses a model that simulates the dynamics of the environment — that is, the environment and how actions transition between states.

It simulates future outcomes, either by creating a model of the environment or relying on an algorithm that explicitly learns it. That means it can help plan, so it’s useful for reducing sample complexity. Examples of model-based learning include:

- MCTS: Monte Carlo Tree Search

- MPC: Model Predictive Control

- DP: Dynamic Programming

Model-free learning

In this model-free approach, the agent does not model the environment dynamics. Instead, the ageny simply relies on trial and error to learn.

Model-free learning is useful when modeling the entire environment is unknown, intractable, and/or complex. Examples include DQN, DDPG and A3C.

- DQN Deep Q-Network

- DDPG Deep Deterministic Policy Gradient

- A3C Asynchronous Advantage Actor-Critic

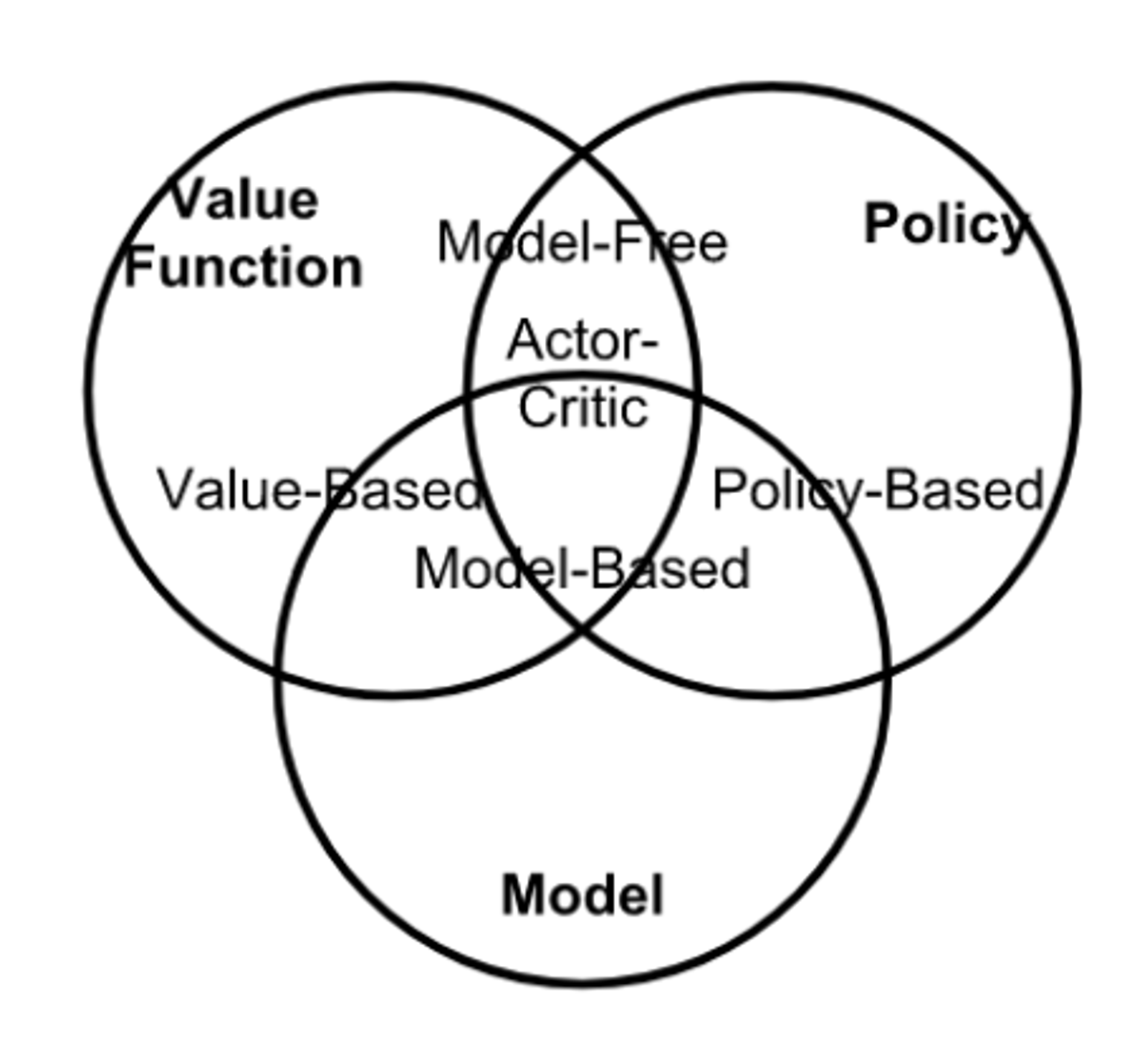

A comprehensive report on the latest state-of-the-art algorithms is available here. The image below demonstrates the overlap between these four approaches:

(Source)

Final thoughts

Reinforcement Learning provides a flexible and powerful framework for training agents that learn from their environment—not just from data. As RL continues to merge with deep learning, optimization, and human feedback, it's becoming a core pillar of intelligent decision-making in AI systems.

Whether it’s mastering ancient games, helping humans communicate with language models, or navigating the physical world, reinforcement learning continues to push the frontier of what machines can learn to do.

See an error or have a suggestion? Please let us know by emailing splunkblogs@cisco.com.

This posting does not necessarily represent Splunk's position, strategies or opinion.

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.