Mean Time To Acknowledge: What MTTA Means and How & Why To Improve It

The sooner you know about a problem, the sooner you can address it, right? Imagine if you could do that in your most important apps and software.

Well, that’s exactly what MTTA measures. Let’s take a look.

What does MTTA mean?

Mean Time to Acknowledge (MTTA) refers to the average time it takes to recognize an incident after an alert is issued. MTTA is measured as the average time between alerts created across all incidents and the time taken to respond to those respective incidents.

This failure metric is used to evaluate the performance of incident management teams in responding to an alert system. It uncovers both:

- The effectiveness of the monitoring mechanism.

- The capacity of the incident management team to respond to the issued alert.

(Related reading: reliability metrics for IT systems.)

What MTTA can tell us

At the highest level, MTTA is helpful for any effort to improve or enhance service dependability — which includes availability, reliability and effectiveness of an IT service. Failure to detect and therefore respond to an IT incident translates into a steep cost of downtime:

- 84% of surveyed organizations lose data thanks to a downtime incident.

- A 2024 survey uncovered that over two-thirds of businesses face unplanned system outages at least once in a month, costing about $125,000 per hour.

- Global 2000 companies lose approximately 9% of their profit because of unplanned downtimes. That's an estimated annual loss of about $400 billion total.

The importance of MTTA: how it helps the business

A focus on MTTA can help teams become more proactive. Prompt acknowledgment enables organizations to:

- Reduce the impact of downtime, thus demonstrating a commitment to reliability to employees and customers alike.

- Foster accountability, since acknowledgment is the first step to resolving an incident.

- Support continuous improvement by detecting and taking care of bottlenecks in the workflow of monitoring and incident response.

MTTA, along with other metrics we'll look at later in the article, all support your incident management execution and the overall dependability of the services you provide.

Splunk ITSI is an Industry Leader in AIOps

Splunk IT Service Intelligence (ITSI) is an AIOps, analytics and IT management solution that helps teams predict incidents before they impact customers.

Using AI and machine learning, ITSI correlates data collected from monitoring sources and delivers a single live view of relevant IT and business services, reducing alert noise and proactively preventing outages.

MTTA at network scale

MTTA isn’t just about monitoring for specific alerts. The challenge facing incident management teams primarily centers around the scale of networking operations.

Network nodes and endpoints generate large volumes of log streams in real-time. These logs describe every networking activity including:

- Traffic flows

- Connection requests

- TCP/IP protocol data exchange

- Network security information at a large scale

Advanced monitoring and observability tools use this information to make sense of every alert — but not every alert signals a potential incident.

Some tools are designed to recognize patterns of anomalous network behavior. Since the network behavior evolves continuously, predefined instructions cannot accurately determine the severity of impact represented by an alert.

Instead, the behavior of networking systems is modeled and generalized by a statistical system, such as a machine learning model and increasingly AI. Deviations from the normal behavior are classified as anomalous and therefore mandate an acknowledgement action from the incident management teams.

Since these models generalize the system behavior, which is continuously changing, some important alerts go under the radar, while most of the common and less important alerts do not necessitate a control action. Additionally, it may take several alerts in succession to definitively point to an incident that mandates a control action — which may not be entirely automated.

This discrepancy causes an average delay in issuing an alert and acknowledgment from the incident management teams to respond.

Ways to reduce MTTA

So, we understand that a long time to acknowledge means there's incidents causing all sorts of problems. Reducing MTTA, then, minimizes the damage from such incidents.

How do you reduce MTTA? The following best practices are key to reducing the Mean Time to Acknowledgement of an IT incident:

Integrate data in a data platform

Information that can be used to issue an alert is generated in silos — network logs, application logs, network traffic data. This information must be integrated and collected in a consumable format within a scalable data platform. For example, data lakes or data lakehouses that acquire data of all structures and various formats within a scalable cloud-based repository.

(Know the difference between logs & metrics.)

Train ML/AI models continuously

Because network behavior changes rapidly, the mathematical models that represent this behavior must be adaptable, learning continuously. That means they also require continuous training on real-time data streams.

Implement real-time analytics for decision making

It is important to reduce the time to issue an accurate alert, especially when only a pattern or series of alerts can point to a specific incident. This requires real-time analytics processing capabilities to make sense of the acquired data.

Automate repeatable actions

When alerts are already issued, incident management, risk management, and governance processes often contribute to the delays in responding thanks to their necessary countermeasures.

By integrating automation tools to your monitoring systems, you can reduce these delays — but you’ll still want a risk, governance, and incident management framework to streamline automation and reduce the risk of automatically responding to incident alerts.

Align to business value

Focus your resources on alert categories that have the largest impact on your…

- Business operations

- Service dependability for mission-critical functions

- End-user experience

It’s likely not possible or viable to invest all incident management resources into resolving issues that do not directly impact SLA performance and service dependability. Instead, prioritize based on the biggest impact to users and business.

Use modern incident management solutions

Incident management is a highly data-driven function. Traditional tools that follow fixed alert thresholds may require ongoing manual efforts to align the incident management performance with the service dependability goals of your organization.

Therefore, advanced incident management technologies are significantly important for two key reasons:

- Acquiring data from a multitude of siloed sources.

- Making sense of data patterns to issue the most impactful alerts in real time.

Identify the root cause

Incidents, and therefore alerts, can be recurring unless the resolution procedure addresses the underlying cause. Identifying incident types that contribute significantly to your MTTA metric performance and understanding the root cause can help IT teams establish long-term and impactful resolution.

This reduces the burden on incident management teams to respond to repeated issues while potentially eliminating the underlying cause.

What about MTTF, MTTR, & MTBF?

While MTTA focuses mostly on prompt acknowledgement of incidents, if you understand other metrics like MTTR, MTTF, and MTBF, you will get a broader perspective on incident management and system reliability.

Working together, these metrics will help you to more fully evaluate both the system's performance and the support teams' effectiveness, offering actionable insights into areas which you can improve.

On that note, let's explore these metrics in detail and how they relate to MTTA.

What is MTTR?

MTTR, otherwise known as Mean Time to Recovery/Repair, measures how fast a system or service can recover from downtime. The measurement includes the time spent while detecting the problem, diagnosing the root cause, fixing the issue, and restoring to normal operations.

MTTR can also refer to:

- Mean time to respond: This calculates the time spent to initiate corrective measures after incident identification.

- Mean time to restore: Calculation of the time spent to restore complete functionality after an outage.

How to calculate MTTR

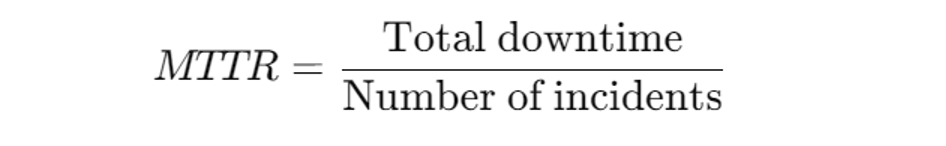

We can approximately calculate MTTR using the following formula:

However, accurate calculation requires the lifecycle of the entire incident, from detection to resolution.

What is MTBF?

Mean time between failures (MTBF) helps to assess a system's reliability. It does so by calculating the average operational time between two failures. MTBF helps with:

- Predicting the availability of a system or service.

- Planning maintenance schedules.

- Improvement of software and hardware reliability.

How to calculate MTBF

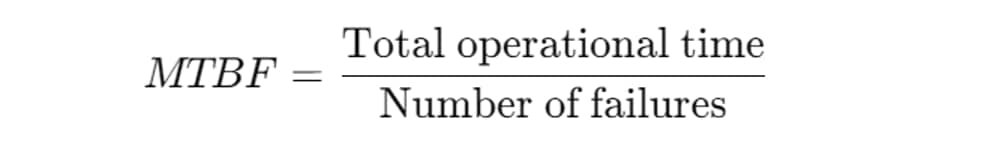

For approximately calculating MTBF, we can use the following formula:

What is MTTF?

Mean time to failure (MTTF) helps to predict the expected time of a system's operation before it fails for the first time.

MTTF is mostly used for predictive maintenence scenarios that involve single-use systems that cannot be repaired, like hardware components. Failure of such systems requires replacement instead of repair.

How to calculate MTTF

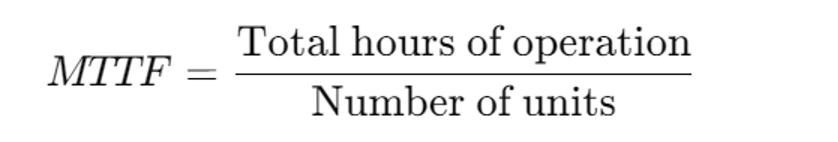

We can use the following formula for calculating MTTF:

Keep in mind that MTTF assumes a constant failure rate. This calculation might not work for systems with lifecycle stages or varying failure probabilities.

How does MTTA differ from MTTR, MTBF, and MTTF?

As we have previously discussed, MTTA checks the time to acknowledge an incident after receiving an alert, whereas:

- MTTR calculates the time taken to fix an issue.

- MTBF and MTTF focus more on a system's reliability by calculating the interval between failures and the expected time a system operates before a failure.

Each of the metrics addresses a unique phase in the incident management lifecycle. Their primary focus is to help a team in optimizing their maintenance and incident response strategies.

By understanding these reliability metrics, we can build a foundation for system performance evaluation. However, fostering a culture of accountability and rapid acknowledgment starts with a strong focus on MTTA.

Deliver reliable services

MTTA, along with MTTR, MTBF, and MTTF, are not only performance indicators, they form the foundation of an effective incident management strategy. By focusing on MTTA, you can establish a culture of responsiveness and accountability, while minimizing downtime and connected costs.

By coupling MTTA with insights from the other metrics, your team can:

- Enhance system reliability.

- Refine the process of incident resolution.

- Prioritize critical resources.

As the technical domain is constantly evolving, utilizing these metrics along with automation and other advanced tools will ensure that your team remains efficient and updated, your system remains dependable, and your business remains resilient while facing a challenge.

See an error or have a suggestion? Please let us know by emailing splunkblogs@cisco.com.

This posting does not necessarily represent Splunk's position, strategies or opinion.

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.