Data Trends in 2025: 8 Trends To Follow

The data field is expanding rapidly, with many areas overlapping other rising fields like cybersecurity and AI. As technology advances, the amount of data we generate and collect will continue to grow exponentially. This explosion will continue in 2024, when new developments in data management and security have emerged.

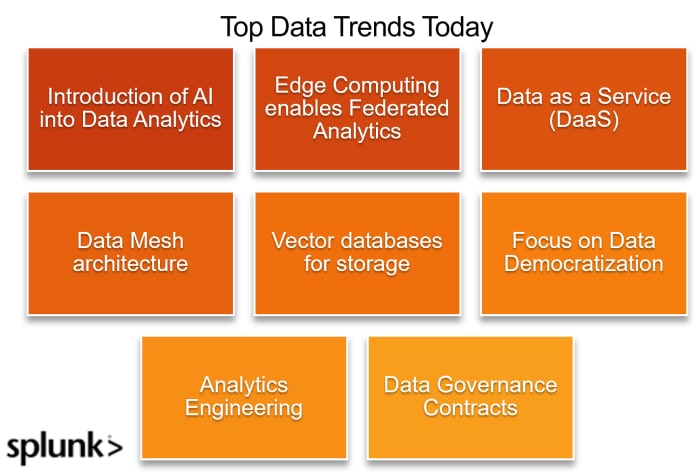

Today’s top data trends

The data field is going through some transformative changes today. Here are some of the top trends this year:

Trend 1: Introduction of AI into data analytics

With rapid advancements in Artificial intelligence (AI) since ChatGPT's release, AI has also begun revolutionizing the way data is analyzed and used. As such, we can expect to see even more advanced AI algorithms being used to analyze vast amounts of data in real-time, providing valuable insights and predictions for businesses and organizations.

Some notable AI data analytics tools include Data analysis with ChatGPT by OpenAI, Duet AI code assistance in BiqQuery Studio, and Julius AI.

AI assistance being used in data analytics varies across their functions, such as:

- AI code assistance

- Data visualization suggestions

- Auto-detection of outliers in data

- Simplified web scraping

AI-assisted data analytics not only makes the process more efficient but also provides a good support tools for data professionals.

(Related reading: real-time analytics.)

Trend 2: Edge computing enables federated analytics

Edge computing is the term for processing and analysis of data at or near the data’s source — instead of sending it to a centralized location. (This is also known as federated analytics.)

This trend is set to gain even more momentum as IoT (Internet of Things) devices become smarter and capable of handling complex tasks locally. Businesses in every industry are also steadily integrating IoT devices into their IT strategies, and the global number of IoT-connected devices is expected to grow to 29 billion by 2030.

This trend arose from the strong surge in demand for AI that responds fast, with the added security of edge computing. With data processing being done locally by the end user, data is not transmitted to any organization's private servers. This reduces latency, improves efficiency, and increases security.

Some examples of edge computing include:

- Self-driving vehicles

- Autonomous robots

- Smart watches and health trackers

Trend 3: Data as a Service (DaaS)

Data as a Service (DaaS) has emerged as one of the top trends in data for businesses that are looking to outsource their data management and analytics. DaaS offers companies access to reliable and quality data without investing in expensive infrastructure and resources.

DaaS providers will offer more customized solutions catering to specific business needs, such as creating a layer that can fit into the organization's existing data architecture. This will give businesses better control over the type of data they need and how it is managed and analyzed.

Some benefits of using DaaS include:

- Cost-effective

- Scalable

- Faster implementation time

Trend 4: Data mesh architecture

Traditional data architecture is often centralized, with all data being stored in one location. This can lead to challenges with scalability and flexibility, particularly as businesses expand and generate more data.

Data mesh architecture is a new approach that involves decentralizing data ownership and management while still maintaining a unified view of the data across the organization. Going forward, we can expect to see more organizations adopting this approach for their data infrastructure.

Some key components of Data mesh architecture include:

- Domain-oriented decentralized data ownership

- Self-serve data platform for easy access to diverse datasets

Trend 5: Vector databases for storage

Vector databases are optimized for storing and querying vectors — sequences of numbers or embeddings. They can perform similarity searches and help with understanding complex patterns and relationships in data.

This is particularly useful for Generative AI (GenAI), where similarity searches are key to generating the right responses. The method of storing and retrieving data used in vector databases is also more efficient in GenAI applications.

Vector databases are expected to become even more popular as businesses seek efficient ways to store and analyze this type of data. Some popular vector database tools include:

- Chroma: An AI-native open-source embedding vector database that uses embeddings. Chroma works by creating and using collections, which will be used to load embeddings or documents. All database queries will be retrieved from the collection.

- Pinecone: A serverless vector database made for GenAI applications.

- Weaviate: An open-source AI-native vector database made for hybrid search queries, using both the vector and keyword search approach to find similarities in your database.

(Related reading: democratized generative AI.)

Trend 6: Focus on data democratization

We can also expect a bigger push towards data democratization.

Data democratization is the concept of providing all members of an organization with easy access and understanding of data, regardless of their technical abilities. This allows individuals at every level to make informed decisions based on data-driven insights.

With the abundance of data available, it is becoming increasingly important for businesses to ensure that everyone in their organization has access to the right tools and resources to make sense of this data. Having a more democratized approach towards data also promotes collaboration and innovation within organizations as employees from different departments can easily share and analyze data together.

Trend 7: Analytics engineering

As the field of data engineering expands, a new role called "Analytics Engineer" has emerged. This role bridges the gap between data engineering and data science, focusing on developing and maintaining the infrastructure for data analysis.

To fill in this gap, companies will be looking to hire analytics engineers to optimize their data pipelines and ensure efficient data analysis. This will not only help businesses become more data-driven but also free up time for data scientists to focus on more advanced tasks.

Tools that support these activities include:

- SQL

- Snowflake

- Data pipeline automation tools (e.g. Airflow, dbt)

- Data orchestration tools (e.g. Prefect)

- Looker

Trend 8: Data contracts

Data contracts, sometimes referred to as data governance contracts, are agreements that define the rules and expectations for how data is collected, managed, and used within an organization.

These contracts ensure consistency and compliance in handling sensitive data while promoting transparency and accountability.

We will be expecting more companies to implement data contracts to better manage their data and protect customer privacy. This will also become increasingly important with the rise of strict data protection regulations such as GDPR and CCPA. Some key elements of a data contract include:

- Data types

- Data format

- Data structure

- Data encoding

- Data quality

- Data documentation

- Data constraints

Having all these components helps to ensure a standardized structure and format are used for data exchange. This reduces chances for errors and aligns with compliance data management regulations.

Final thoughts

To remain competitive in the AI boom since 2023, companies will require a robust data infrastructure to support the new AI products being launched. This will likely extend well beyond this year and grow as AI matures to support more flexible applications.

These trends in data are moving quickly to fill the much-needed gap of creating useful and highly reliable AI. Data continues to be a strong focus among companies—both today and in the coming years.

See an error or have a suggestion? Please let us know by emailing splunkblogs@cisco.com.

This posting does not necessarily represent Splunk's position, strategies or opinion.

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.