Data Modeling: An Introduction

Data modeling has gained significant importance in recent years due to the increasing amount of data being generated by businesses, organizations, and individuals. With the advancements in technology, the volume, variety, and velocity of data have increased exponentially. As a result, traditional methods of storing and managing data are no longer efficient or effective.

Proper data modeling helps solve this issue. It provides a standardized approach to organizing and structuring data. It helps identify the relationships between different data elements, making it easier to query and analyze the data for insights and decision-making.

In this guide, we will cover the basics of data modeling, the steps involved in data modeling, and its related techniques and tools.

What is Data Modeling?

Data modeling is the process of creating a conceptual representation of data and its relationships. It is an essential step in organizing and understanding complex datasets, making it easier to use and interpret them for various purposes.

Basic concepts

At its core, data modeling springs from several key components. Let's have a look at these components below.

Entities

Entities are the distinct objects, concepts, or events that need to be represented in a database. They can be:

- Tangible things like people, products, or places

- Abstract concepts like orders, transactions, or memberships

Attributes

Entities also have properties known as attributes. These attributes define the characteristics or properties of an entity. Attributes describe the characteristics of an entity and help distinguish one entity from another.

For example, a "person" entity may have attributes such as name, age, gender, and address. Attributes can be classified as:

- Key attributes are unique identifiers that uniquely identify an entity.

- Descriptive attributes provide additional information about an entity

- Non-descriptive attributes, which are used for internal purposes.

Relationships

Relationships define how entities are connected or related to each other. A relationship between two entities can be:

- One-to-one (e.g., a person has only one social security number)

- One-to-many (e.g., a person can have multiple bank accounts)

- Many-to-many (e.g., multiple people can be part of the same project team)

Keys

Keys are unique identifiers for entities within a database. They help identify and distinguish one entity from another, making it easier to retrieve and manipulate data.

Keys can come in two main forms: primary keys and foreign keys.

- A primary key is a unique identifier for an entity within its own table.

- A foreign key is a reference to the primary key of another entity in a different table.

However, not all components are present in every implementation. This is differs across the types of data models used.

Types of data models

To get a clearer picture of data models, you'll first need to understand the main types used in many business intelligence applications.

Conceptual data models

Conceptual data models serve as a high-level blueprint, illustrating how data entities relate to each other. They often capture business requirements in an easily interpretable format using a clear visualization. These models emphasize what data is pertinent, focusing on the scope rather than the technical specifics.

Conceptual data models offer a strategic advantage: they enable cross-functional teams to collaborate effectively. This collaboration ensures that the business logic aligns seamlessly with the database design. Therefore, they tend to facilitate clarity and foster innovation.

Tools and methods:

- Entity-relationship diagrams (ERD) using Lucidchart

- Unified Modeling Language (UML) class diagrams

Logical data models

Logical data models are more detailed than conceptual models. They outline the data structures and relationships involved in a specific business domain, including entities, attributes, and their interconnections. Logical data models serve as the reference point for building physical data models.

Tools/Methods:

- Enhanced ERDs that also represent subtypes and supertypes

- Data flow diagrams (DFD) using Microsoft Visio

Physical data models

Physical data models offer a granular view of the data elements that are required to address specific business needs. They define how the database will be structured physically, including details such as storage types, indexing options, and constraints. Physical data models also provide a basis for generating SQL code to create databases.

Here are some components of physical data models and their functions:

- Tables and Columns: Define data structures in the database.

- Indexes: Enhance query performance.

- Constraints: Enforce data integrity.

- Partitioning: Improve database management and performance.

- Storage: Determine data storage options and methods.

Tools/Methods:

- Oracle Designer

- DbSchema

- MySQL

- IBM InfoSphere Data Architect

Steps in data modeling

The process of data modeling works as several iterative steps and continuous refinement to achieve an accurate representation of the data.

- Identify business requirements: Identifying the business needs and objectives that the data model will address. This also involves data requirements analysis done by a data engineer or data modeler.

- Gather data sources: Identify and gather all the relevant data sources needed, including databases, spreadsheets, and flat files.

- Analyze data structure: Analyze the structure and content of the data sources to identify any potential issues or gaps.

- Create conceptual model: Use conceptual models to define entities, attributes, and relationships between them based on the business requirements.

- Create logical model: Create a more detailed logical model that incorporates data types, lengths, constraints, and other relevant elements.

- Create physical model: Translate the logical model into a physical one by defining database-specific details such as tables, columns, indexes, etc.

- Validate and refine models: Validate the models against the business needs and refine them to address any identified issues or improvements.

- Implement database design: Use the final physical model to implement the database design and create associated objects like tables, views, and procedures. It involves translating the designed structures into actual database schemas, configuring connections, and deploying the data infrastructure.

- Test and optimize: Test the database design to ensure it meets business requirements, and optimize for performance. It also includes robust testing to cover edge cases and anomalies.

- Maintain and update models: Data models should be regularly maintained and updated as business needs evolve or new data sources are added.

Data modeling techniques

Data modeling techniques are structured methodologies used to model data and create a database design. Here are some commonly used techniques.

Entity-relationship modeling

Entity-Relationship (ER) modeling is used to represent the relationships between data entities in a clear and concise manner. It involves identifying entities, attributes, and relationships between them.

There are two main components of ER modeling:

- Entities: Any real-world object, concept or event that can be identified uniquely is an entity. Entities can have attributes that describe their characteristics.

- Relationships: Relationships represent how two or more entities are related to each other and can be one-to-one, one-to-many, or many-to-many.

Dimensional modeling

Dimensional modeling is used mainly in data warehousing projects to improve query performance for analytical purposes. It involves organizing data into two types of tables: fact and dimension tables.

- Fact tables: Contains numerical measures or facts that can be aggregated, such as sales amount or customer count. They usually have foreign keys to connect to the dimension tables.

- Dimension tables: Contains descriptive attributes related to the fact table, such as product category, customer demographics, or time. Dimension tables are used for filtering and grouping data in queries.

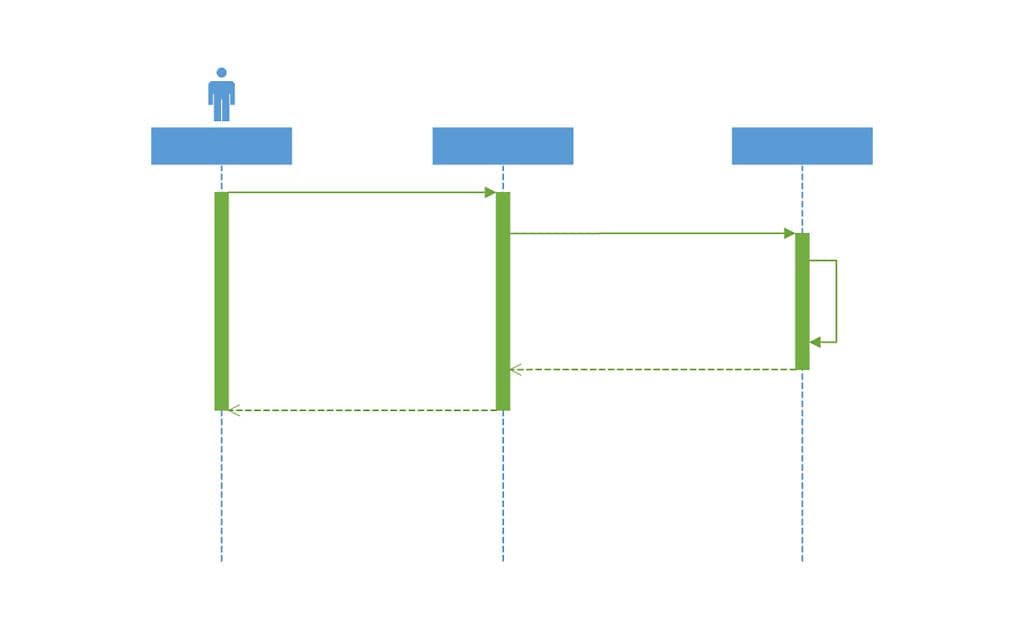

Unified Modeling Language (UML)

UML is another widely used method for data modeling, often favored for its flexibility and scalability. It is a visual modeling language that offers multiple diagram types, including class diagrams, object diagrams, and use case diagrams for visualizing different aspects of the data.

Here's what a basic UML diagram would look like:

UML also uses a standardized notation for depicting entities, attributes, and relationships. It also offers powerful constructs such as generalization and aggregation to represent complex data structures.

Additionally, UML can be integrated with other software engineering processes. Programmers and software engineers can layer object-oriented class models onto a relational database with ease. This makes it an ideal choice for large-scale enterprise-level data modeling projects.

Data modeling tools

Data modeling is made more convenient through the use of advanced tools to ensure accuracy and efficiency. Here are some popular data modeling tools:

ER/Studio Data Architect

ER/Studio Data Architect is a popular data modeling tool used by organizations to design and manage their databases. It supports various database platforms, including SQL Server, Oracle, MySQL, etc., and offers a user-friendly interface for creating ER diagrams, logical models, and physical models.

Some key features of ER/Studio Data Architect include:

- Visual data modeling with data observability/lineage showing source/target mapping

- Forward and reverse engineering

- Metadata management

DBSchema

DbSchema is a visual database design tool that supports multiple databases, including SQL Server, MySQL, PostgreSQL, and more. It offers a drag-and-drop interface for creating ER diagrams, generating SQL code, and managing the entire database lifecycle.

Some key features of DbSchema include:

- Using reverse engineering to convert schemas into visual diagrams

- Query builder for building complex queries without writing code

- In-built SQL editor for advanced users

MySQL Workbench

MySQL Workbench is an open-source, cross-platform data modeling tool designed specifically for MySQL databases. It offers a comprehensive set of features for visual data modeling and database design, including:

- ER diagramming with point-and-click functionality

- Database performance analysis tools

- SQL code editor with syntax highlighting and auto-completion

Lucidchart

Lucidchart is a cloud-based platform that offers intuitive drag-and-drop tools for creating various types of diagrams, including ER diagrams for data modeling. It also supports collaborative editing and integration with popular database platforms.

Some key features of Lucidchart for data modeling include:

- A user-friendly interface with a variety of templates and shapes

- Real-time collaboration with team members

- Integration with other tools such as Google Drive, Jira, and Microsoft Teams

- Revision history to track changes made to diagrams.

Oracle SQL Developer Data Modeler

Oracle SQL Developer Data Modeler is a free data modeling tool provided by Oracle. It allows users to create logical, physical, and multidimensional models for Oracle databases. It also supports reverse engineering, allowing users to import existing databases and generate visual representations of the data structure.

Some key features of Oracle SQL Developer Data Modeler include:

- Support for various database platforms such as Oracle, SQL Server, and MySQL

- Ability to automatically generate DDL scripts for physical models.

- Collaboration capabilities for team projects.

Final thoughts

Data modeling plays a crucial role in designing efficient and effective databases that support business processes and downstream decision-making. To achieve that, data modelers employ the various techniques and tools available, such as conceptual, logical, and physical data modeling.

As database technology continues to advance, businesses should consider incorporating them into their data modeling strategies to achieve better downstream data analytics and reporting efforts.

See an error or have a suggestion? Please let us know by emailing splunkblogs@cisco.com.

This posting does not necessarily represent Splunk's position, strategies or opinion.

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.