Data Lakes: What Are They & Why Does Your Business Need One?

The sheer amount of data businesses generate today is staggering.

Yet, without an effective strategy, most of this information remains untapped, with data left in silos.

Enter the data lake — a powerful and flexible solution for modern data management that can transform raw information into valuable assets.

If your organization is seeking better ways to handle and analyze data, this guide is for you. We’ll break down what a data lake is, how it works, and why businesses in diverse industries are adopting it.

Introduction to data lakes

Let's begin with a basic background of what data lakes are.

What is a data lake?

A data lake is a centralized repository designed to store vast amounts of structured, semi-structured, and unstructured data. Unlike traditional systems like data warehouses, a data lake doesn’t require predefining a schema before storing the data.

This makes it an ideal solution for organizations handling diverse types of raw data. Think a data lake as a vast, open body of water where data streams flow in from multiple sources. This includes everything from databases and IoT devices to social media platforms and application logs.

Components of a data lake

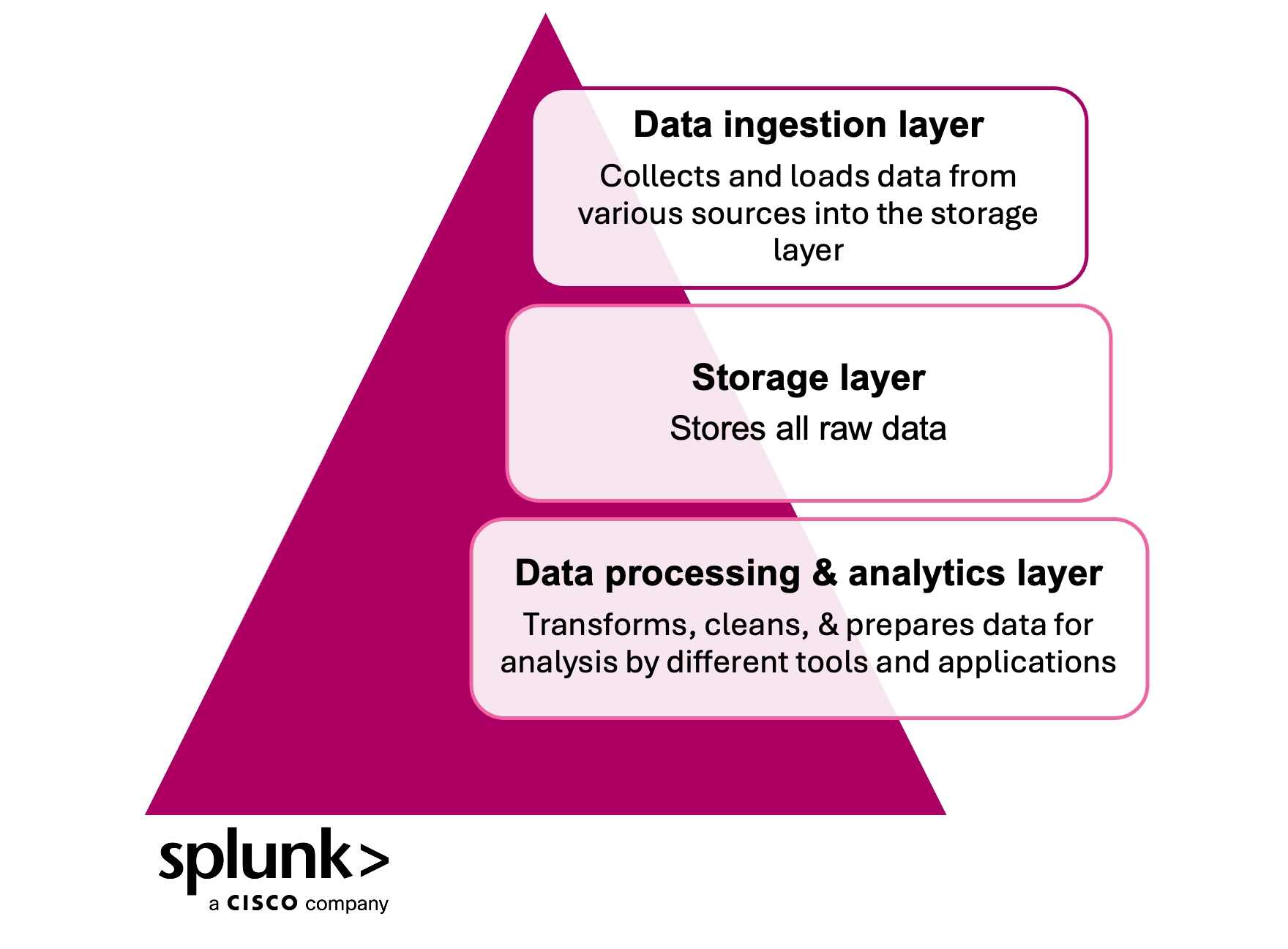

A data lake typically consists of three main components:

- Data ingestion layer: This component collects and loads data from various sources into the storage layer.

- Storage layer: This is where all the raw data is stored.

- Data processing and analytics layer: This is where the data is transformed, cleansed, and prepared for analysis by different tools and applications.

Other supporting components may include metadata management tools, security frameworks, and governance policies.

How is a data lake different from a data warehouse and a data lakehouse?

Understanding the distinction between a data lake and a data warehouse is critical, as they often complement one another rather than act as replacements.

- Data lakes store raw data in its native form without imposing a fixed structure. They are highly scalable, cost-effective, and support advanced analytics methods like machine learning.

- Data warehouses are structured environments that process data to fit a defined schema. They are optimized for business intelligence (BI) tools and transactional queries, offering high performance but limited flexibility.

This means that data lakes are able to hold unstructured data like images, videos, and text, while data warehouses are better suited for structured data like numbers and dates.

There’s also the data lakehouse, a modern hybrid architecture that combines the scalability and flexibility of data lakes with the structured data management and performance of data warehouses. It supports both advanced analytics and business intelligence from a unified platform.

These photos illustrate the fundamentals: a data lake doesn’t have an imposed structure, just like this natural lake, whereas data warehouses organize the data for particular uses.

Benefits of implementing a data lake

Adopting a data lake provides several major advantages for businesses:

Seamless data accessibility

Data lakes enable the collection of information from frequently siloed sources into one central location. This accessibility allows analysts, data scientists, and executives to draw from the same well of information, reducing friction in data-driven decision-making.

Flexibility with all data types

Unlike data warehouses, which work best with structured data, data lakes can house any type of data: text, images, videos, streaming data, you name it. Organizations can innovate without being locked in by rigid schemas.

Scalability for growth

Modern solutions like AWS S3 and Azure Data Lake make it easy to scale petabytes of data without significant cost increases. The elastic nature of data lakes ensures your storage evolves along with your growing business.

Enabling advanced analytics

For organizations exploring machine learning, big data analytics, or predictive modeling, data lakes are the gold standard.

Data teams are able to access raw data directly through the use of data lakes. This empowers them to perform advanced techniques impossible within traditional BI systems. For example, Amazon Security Lake and Splunk deliver an integrated centralized data lake for advanced analytics capabilities.

Cost efficiency

Storage solutions for data lakes are notably more affordable than high-performance databases or warehouses. Moreover, with the reduced need for extensive ETL (extract, transform, load) preparation, data lakes can provide significant cost savings.

Data lake tools and platforms

To properly implement a data lake, you'll need a readily available solution. Several data lake solutions exist, catering to various use cases and budgetary constraints.

Some popular options include:

- AWS S3: Amazon’s cloud-based storage service is highly scalable, affordable, and easy to integrate with other AWS services.

- Azure Data Lake Store (ADLS): Built on top of Azure Blob Storage, ADLS enables storing petabytes of unstructured data in the cloud.

- Google Cloud Platform: Google offers several data lake solutions, including Google Cloud Storage and BigQuery, tailored to different business needs.

- Hadoop: An open-source framework supporting big data processing capabilities. Hadoop is used in some of the world’s most extensive data lakes.

- Snowflake: An all-in-one data platform that combines the capabilities of a traditional data warehouse and data lake.

Is Splunk a data lake?

Splunk is not a traditional data lake but offers some similar capabilities. It’s primarily an analytics and observability platform used to collect, index, and analyze machine-generated data like logs and metrics.

While the Splunk data platform can store large volumes of semi-structured data, its strength lies in real-time search, alerting, and monitoring — especially for IT operations, security, and DevOps.

Splunk can act like a data lake in specific scenarios, such as with Amazon Security Lake or Splunk Data Fabric Search, where it centralizes and analyzes raw data. However, it’s not designed to be a full-scale, schema-flexible data lake for broad enterprise use.

(Explore the Splunk unified platform for data management, observability, and cybersecurity.)

Real-world data lake use cases

Many organizations across industries rely on data lakes to power innovation. Here are a few examples:

Customer personalization in retail: Retail chains use data lakes to integrate purchase history, social media activity, and website behavior. This unified data can enable highly tailored shopping experiences for individual customers.

Healthcare data integration: Hospitals and laboratories store medical records, imaging data, and patient telemetry in data lakes. This enables streamlined research, predictive diagnostics, and improved patient care.

IoT and smart device analytics: Manufacturing firms leverage data lakes to analyze high-velocity IoT data from connected devices, helping them predict maintenance needs, optimize workflows, and minimize downtime.

Risk management in finance: Banks use data lakes to model customer behavior and predict risks such as credit defaults or fraud. Through the combination of structured financial records with unstructured web activity, banks can improve their overall risk management strategies.

(Related reading: financial crime risk management.)

Building and maintaining data lakes: best practices

To maximize the utility of your data lake, it’s essential to follow these step-by-step best practices:

1. Start with a clear data governance strategy

Without proper governance, your data lake risks becoming a “data swamp,” where information is disorganized and difficult to extract. You should also establish rules for data organization, usage, and access early in the process.

Effective data governance isn’t really about organization — it’s essential for meeting regulatory compliance standards like GDPR, HIPAA, or SOC 2. Ensuring proper access controls, audit trails, and data lineage tracking helps businesses avoid legal pitfalls and maintain customer trust.

2. Prioritize security

Ensure your data lake’s security by implementing robust user authentication, encryption, and compliance monitoring. Technologies like AWS Lake Formation make this process seamless for organizations.

3. Maintain metadata for faster retrieval

Include metadata tagging to help users search, locate, and retrieve data efficiently. Tools like Apache Atlas can assist in managing comprehensive metadata across your lake.

4. Design flexible data pipelines

Platforms like Apache Spark and AWS Glue simplify the process of extracting, transforming, and loading data without locking you into specific schema requirements. This aligns with the fluid storage philosophy of the data lake.

5. Monitor performance regularly

Make sure to keep an eye on user queries, job runtimes, and data storage costs. Try to look for bottlenecks and optimize operations to ensure the data lake evolves alongside your usage patterns.

6. Optimize for performance

Actively optimizing your data lake can significantly improve performance and reduce costs.

- Partitioning data by time or category speed up query execution by narrowing the search scope.

- Using columnar storage formats such as Parquet or ORC improves read efficiency and reduces storage footprint.

- Implementing query acceleration layers (e.g., materialized views or caching engines) can enhance response times for repeated queries.

Together, these practices ensure your data lake stores data effectively and also delivers insights quickly and reliably.

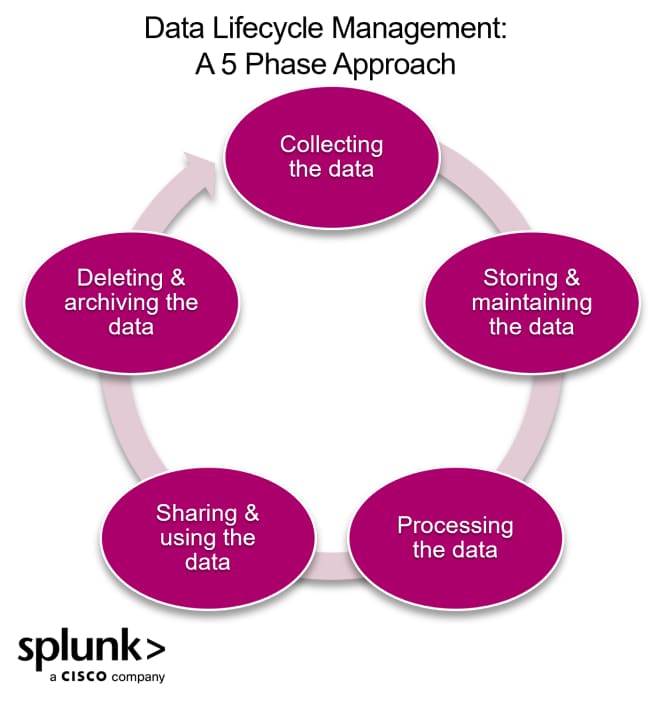

7. Manage the data lifecycle

As data lakes grow, managing the lifecycle of stored data becomes crucial. Not all data holds long-term value, so implementing strategies — like archiving, tiered storage, or automated deletion of stale datasets — helps keep storage costs under control and performance optimized.

Many cloud providers offer built-in lifecycle policies to automate this process.

Challenges in managing a data lake (and how to solve them)

While the advantages of data lakes are compelling, there are challenges to consider:

- Poor data quality: Raw data often carries inconsistencies, errors, and duplications. Implement automated cleansing tools and data validation pipelines to ensure high-quality inputs.

- Overwhelming volume: The sheer volume of unfiltered data can overwhelm users. Implementing efficient indexing and metadata tagging can mitigate this.

- User-access complexity: New users may have difficulty querying unstructured data. To overcome this, invest in user-friendly query solutions like Presto orSQL-on-Hadoop tools.

Future trends for data lakes

As data ecosystems evolve, data lakes are becoming more intelligent and unified. Emerging trends include:

- AI-powered data cataloging for automated tagging and discovery

- The rise of data lakehouses that merge analytical performance with flexibility

- Tighter integration with real-time streaming platforms.

Organizations are also moving toward governed self-service models, allowing more teams to access and analyze data without heavy IT involvement — all while maintaining control and compliance.

Harness the power of data lakes

A well-designed data lake goes beyond an advanced storage solution and presents an opportunity to unify your organization's data.

To bring in new business insights from disparate and siloed data, consider building a data lake for your business today.

See an error or have a suggestion? Please let us know by emailing splunkblogs@cisco.com.

This posting does not necessarily represent Splunk's position, strategies or opinion.

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.