Top 6 Data Analysis Techniques Used by Pro Data Analysts

Data is often referred to as the currency of the digital age, and for good reason. Decisions based on accurate data analysis can propel businesses, organizations, and even individuals toward better outcomes. But what exactly does it take to analyze data effectively?

More importantly, how can you leverage the right data analysis techniques, to gain actionable insights?

In this article, I will unpack the most common data analysis techniques and their unique use cases.

What is data analysis and why does it matter?

Data analysis is the process of examining, organizing, and interpreting data to uncover valuable patterns, trends, or insights.

This can come in several forms, whether it's helping a retail company predict consumer behavior or enabling healthcare providers to identify disease outbreaks early, the applications of data analysis span across all industries and sectors.

Why is data analysis so important?Here are three main reasons:

- Improved decision-making: Instead of relying on gut instinct, data provides objective evidence.

- Enhanced efficiency: Insights drawn from data can streamline operations and cut costs.

- Competitive advantage: Businesses that leverage data analysis are more likely to outperform competitors who don't.

Whether you're a business analyst, marketer, or researcher, mastering data analysis is pivotal in the increasingly data-driven world.

Types of data analysis

There are several types of data analytics, each achieving a different aspect of bringing unique insight. Here are the four types:

- Descriptive analytics: This technique involves describing or summarizing data to gain a better understanding of it. Examples include calculating averages, percentages, and frequencies.

- Diagnostic analytics: Going one step further, diagnostic analytics involves identifying the cause of a problem or trend. It aims to answer 'why' something occurred.

- Predictive analytics: As the name suggests, this method uses historical data and statistical modeling techniques to predict future outcomes.

- Prescriptive analytics: This technique uses data analysis combined with optimization and simulation algorithms to determine the best course of action in a given scenario.

Each of these analysis types can then be further broken down into specific techniques. These are largely based on the outcomes that you require. We'll share more about this below.

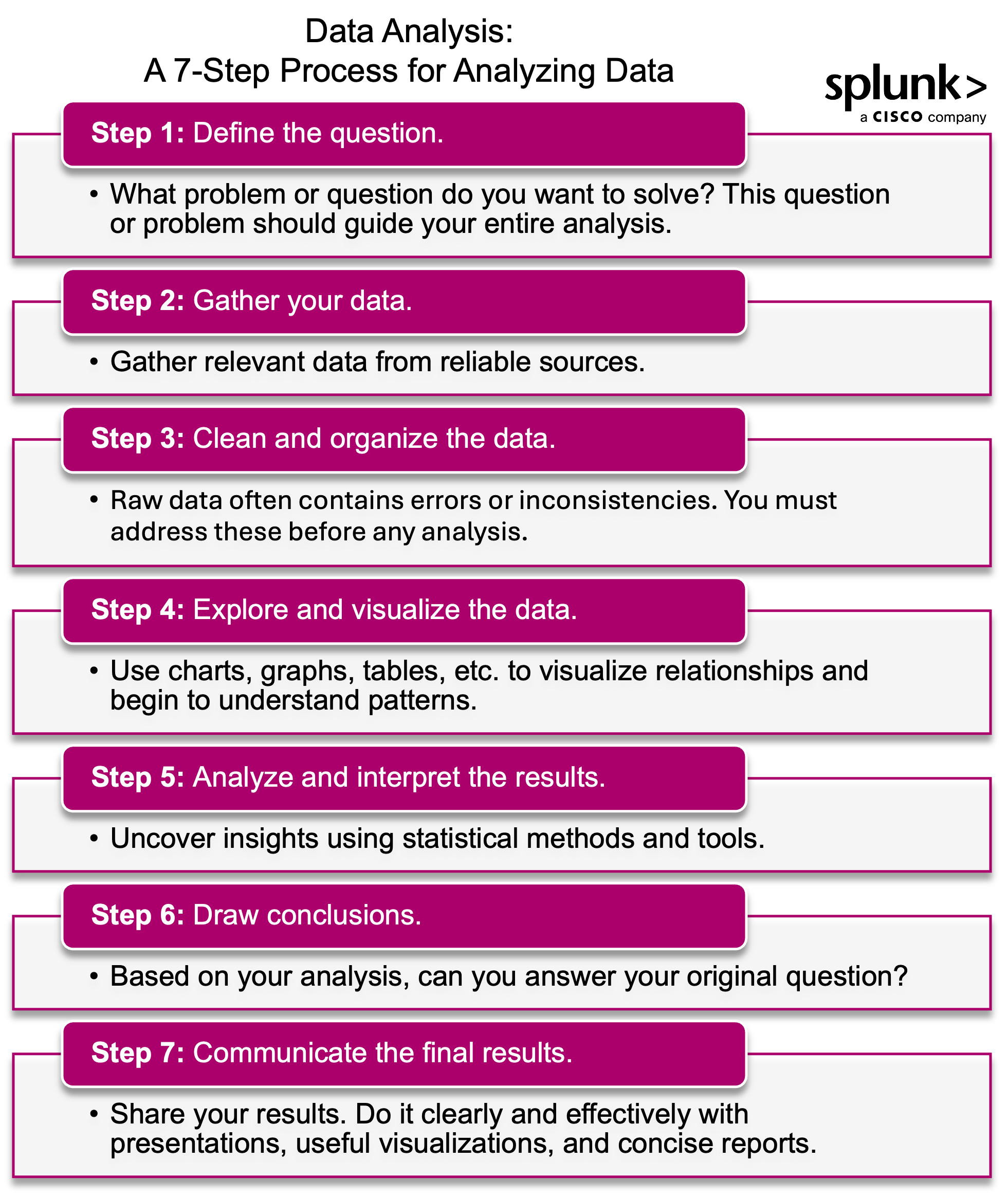

Data analysis in 7 steps

When you're performing data analysis, it generally follows a standard sequence of steps.

While the process may vary slightly depending on the methodology or tools used, here are seven fundamental steps you can follow:

- Defining the problem/question: What problem or question do you want to solve? Identifying this up front can guide your entire analysis.

- Gathering data: Without data, there's no analysis. Gather relevant data from reliable sources.

- Cleaning and organizing: This step is crucial as raw data often contains errors or inconsistencies that need to be addressed before analysis.

- Exploring and visualizing: This involves looking at patterns and relationships in the data through charts, graphs, tables, etc.

- Analyzing and interpreting: Using statistical methods and tools, analyze the data to uncover insights or patterns.

- Drawing conclusions: Based on your analysis, what conclusions can you draw? Can you answer your initial question/problem?

- Communicating results: Finally, communicate your findings clearly and effectively through reports, presentations, or visualizations.

Common data analysis techniques

When it comes to analyzing data, there are a plethora of methodologies to choose from depending on your goals. In fact, even the type of dataset you're working with plays a part as well.

Let's have a closer look at some widely used techniques below. (And do check the top data analysis tools to use, too.)

1. Regression analysis

Regression analysis is used to identify relationships between variables. It's a statistical method that predicts one variable based on the values of others.

Regression analysis can be done using various models, such as linear regression or multiple regression.

Example: A marketing team might use regression analysis to determine how changes in advertising spending influence sales. They might also use regression analysis to predict sales based on advertising spend or customer demographics.

To get started on this technique, try using scikit-learn, a machine-learning library in Python.

2. Clustering

Clustering involves grouping similar data points together based on shared characteristics. It’s especially useful in segmentation tasks such as dividing customers into distinct groups with shared buying behaviors.

Clustering can come in several forms:

- K-Means clustering: This method divides data into clusters based on a pre-defined number of clusters.

- Hierarchical clustering: Unlike K-Means, this technique doesn't require the number of clusters to be specified beforehand. It creates subgroups that are then merged together until all data points are in one group.

Example: A retail company might use clustering to segment its customer base by buying behaviors and tailor marketing strategies accordingly.

To explore how clustering can work for your business or organization, check out scikit-learn, which offers various clustering algorithms in Python.

3. Time series analysis

Time series analysis evaluates patterns over a specified period to draw trends and make predictions. It's commonly used for:

- Stock market forecasting

- Weather predictions

- Supply chain management

Example: A logistics company might use time series analysis to optimize delivery schedules during peak seasons. These techniques form the backbone of data analysis and can be adapted across a variety of datasets and challenges.

(Related reading: time series forecasting & time series databases.)

4. Text analysis

Text analysis is a technique that allows for the extraction of insights from large amounts of text data. With the rise of social media and customer reviews, this technique is becoming increasingly valuable for businesses looking to understand their customers' sentiments and preferences.

A more advanced form of text analysis isnatural language processing (NLP), which involves the use of algorithms to process and analyze human language.

Example: A hotel chain might use text analysis to gather customer feedback from online reviews and improve its services based on common themes or issues mentioned by customers.

To learn more about text analysis, check out NLTK (Natural Language Toolkit) for Python.

5. Data visualization

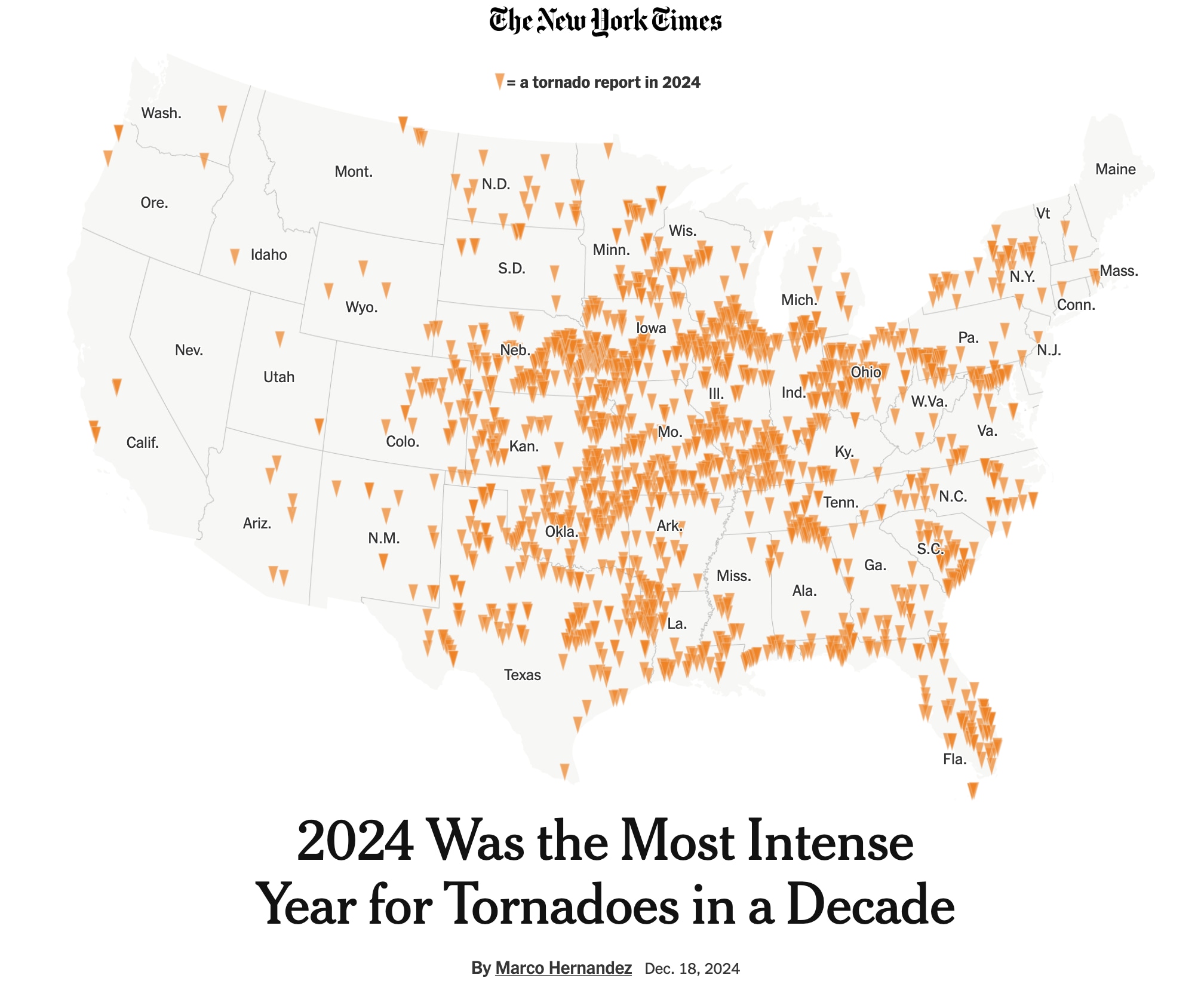

Data visualization is exactly what it sounds like: presenting data in visual formats — such as charts, graphs, and infographics — to help identify trends and patterns quickly. This technique is especially useful when working with large datasets or complex information.

Examples: A news organization can use data visualization techniques to create interactive charts and maps to present election results in an easy-to-understand format. (The New York Times is one great example of this.) Or a finance team can visualize stock market trends to inform investment decisions.

An example of data visualization.

Original source: https://www.nytimes.com/interactive/2024/12/18/us/tornadoes-2024.html

This can be performed through the use of data visualization tools that do not require programming, such as Tableau or Power BI. These tools allow you to create interactive visualizations that can be shared with others.

In addition, if you require more advanced and complex visualizations, you can generate charts programmatically using programming languages like R, JavaScript, and Python.

(Get more details on popular programming languages.)

6. Exploratory data analysis

Exploratory data analysis (EDA) involves examining a dataset to understand its structure, variables, and relationships between them. This technique is often used at the beginning of a project to get an overview of the data and determine which techniques would be most suitable for analysis.

EDA is a common technique used among data analysts that can be done using various tools such as Microsoft Excel, SQL, and R.

Example: A data analyst in a market research company might use exploratory data analysis to identify key demographics within their target audience before conducting surveys or focus groups.

(Hands-on tutorial: Perform EDA with Splunk for anomaly detection.)

Wrapping Up

Data analysis is a broad field with numerous techniques that can be applied depending on your goals and datasets.

With the huge potential of data analysis techniques available through the ever-expanding data analytics toolset, learning how to harness them is crucial. Therefore, I recommend trying out some of these techniques for your projects for yourself.

See an error or have a suggestion? Please let us know by emailing splunkblogs@cisco.com.

This posting does not necessarily represent Splunk's position, strategies or opinion.

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.