Concurrency in Programming and Computer Science: The Complete Guide

Ever notice how your computer can stream music, update software, and run apps all at once without missing a beat? That kind of multitasking isn’t just luck — it’s all thanks to a foundational concept in computer science called concurrency.

Concurrency means your computer is handling multiple computations or tasks that are happening at the same time. It’s what allows systems to structure and manage multiple operations efficiently, even when running on a single processor.

Whether you’re working with embedded devices or large-scale distributed systems, concurrency is key to making the most of your computing resources. Let’s take a look at why it matters and how it works.

What is concurrency?

Your computer is running many tasks and processes at this very moment: some apps you’ve opened and are actively using, others that are running or updating in the background. In computer science, concurrency refers to the execution of independently operating processes, typically code functions. Concurrency is one key function that enables all this activity.

Imagine your computer is like a busy kitchen, where a cook must prepare multiple dishes at the same time. Think of concurrency like the cook multitasking — doing different tasks that seem to happen at the same time, even though they may not be completed simultaneously.

It differs from parallelism, which involves executing multiple processes simultaneously, regardless of whether those processes are related.

Concurrency vs. parallelism

Concurrent operations and parallel operations can go together, and quite often do. But they’re not the same thing:

- Concurrency means handling different tasks in a way that they can be switched between quickly. It's like the cook chopping vegetables and stirring a pot, moving between tasks as needed. The cook isn't doing both tasks at the exact same moment but manages to keep everything going smoothly.

- Parallelism on the other hand, is like having multiple cooks working on different tasks at the same time — each one doing their own thing independently.

When a computer runs several programs at once, as it typically is, it uses concurrency to manage them. Even if the computer has only one CPU, it can still juggle tasks, just like the cook in a single kitchen. It organizes and coordinates these tasks, so they run efficiently without interfering with each other.

So, we can say that concurrency is about juggling tasks efficiently, while parallelism is about doing many tasks at the same time. Both help computers run multiple processes effectively, even if they have limited resources.

Must be interruptible

The secret to concurrency is making sure tasks are interruptible — meaning, the tasks can be paused and resumed. This way, the computer can allocate resources like memory and processing power to different tasks as they need them, keeping everything running smoothly.

Concurrency in action

Here is a visual demonstration of concurrent operations, developed and presented at Heroku’s Waza conference.

Imagine a gopher tasked with moving obsolete manuals into a fire pit. One gopher alone would take too long. However, by using multiple gophers in parallel, the task is completed twice as fast.

Now, to make this concurrent, you can decompose the one tasks into smaller sub-tasks — loading, moving, and tossing books. These different tasks can happen at the same time, limited by resource availability, like number of gophers, carts, and incinerators available. Adding more gophers can reduce delays caused by cart-bound operations.

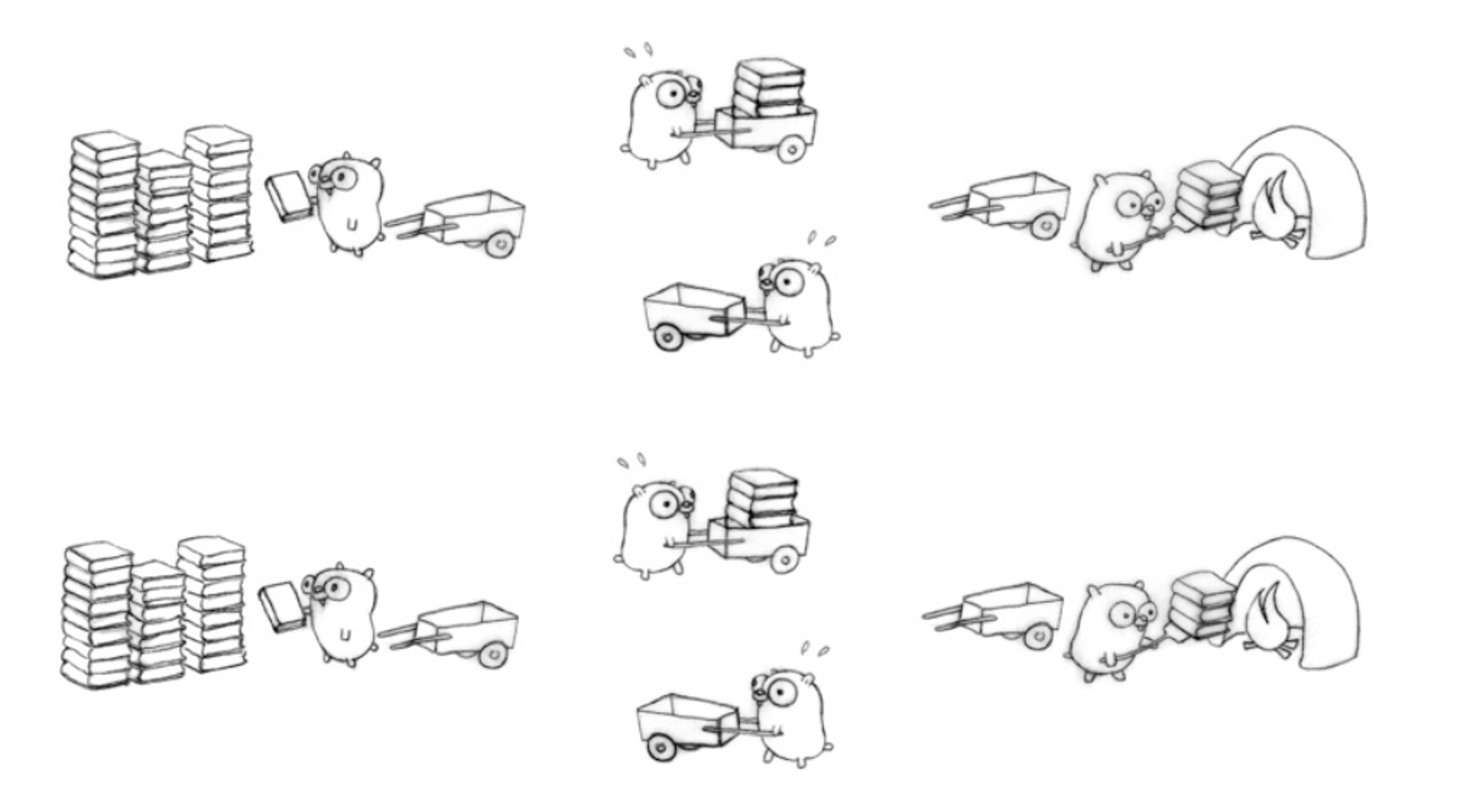

Concurrency in a multi-core CPU system

Key to concurrency: each sub-task is interruptible. Gophers can communicate to eliminate delays, enabling concurrent processes. A concurrent process is then easily parallelized and scalable, for example if you have a multi-core CPU system:

Enabling multi-core, multi-thread parallel processing

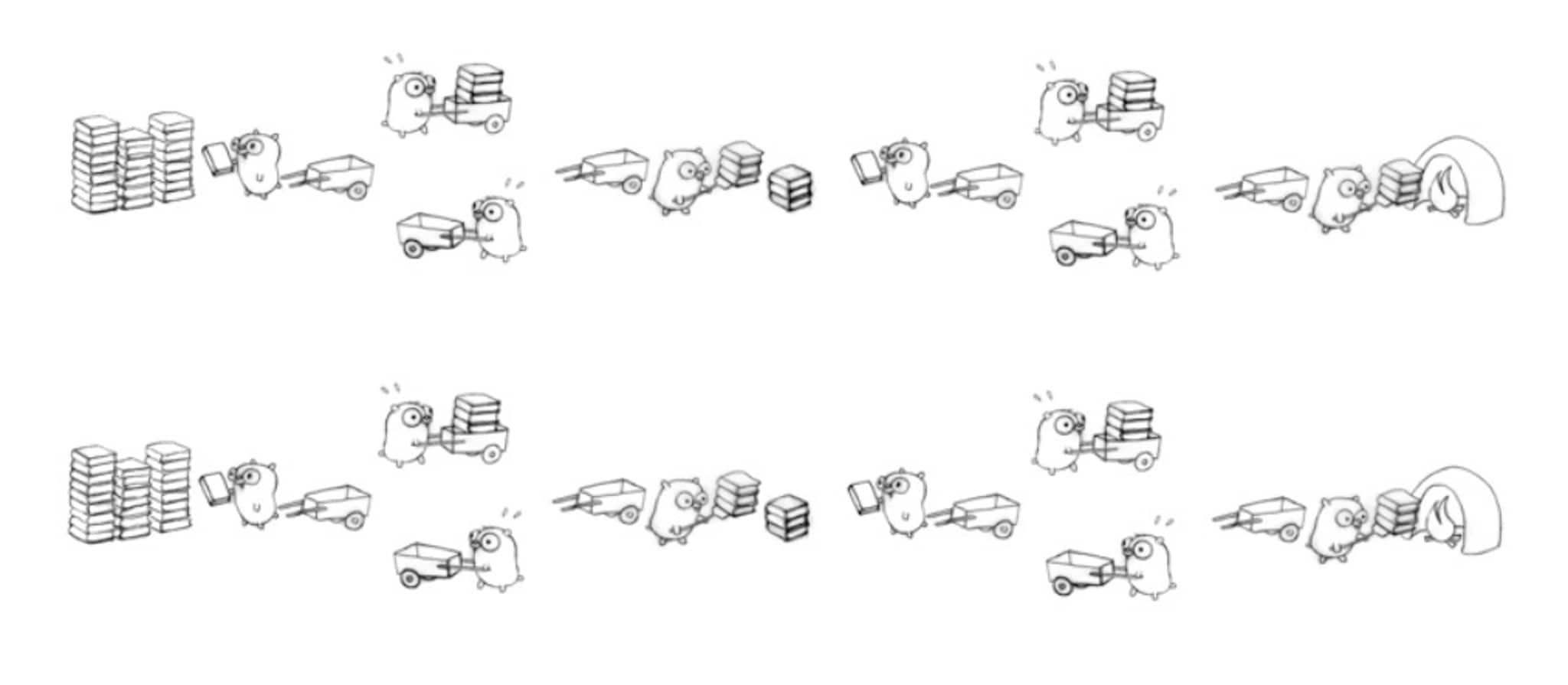

And here’s a faster, more optimal solution. Think multi-core, multi-thread parallel processing that takes advantage of the concurrent structure:

Advantages of concurrency

Concurrency offers several advantages, especially when limited computing resources are available to perform multiple computing processes. These processes may be bound by:

- I/O operations

- Hardware and software performance

- External factors including time, user input and access to data

- Other factors

By exploiting the process structure to enable concurrent execution of sequential process tasks, you can expect the following key advantages:

Improved performance and throughput

Concurrent execution takes advantage of the process structure to run multiple processes simultaneously. During the idle time of I/O and CPU bound tasks, a process can be interrupted and system resources are released to other sequential tasks ready for execution during this idle time.

Parallelism

While concurrency is not parallelism, it makes parallelism easy. Concurrency decomposes the problem into independent tasks. For example, a chef can prepare ingredients and boil water in parallel as separate, isolated and independent tasks, even when only one stove is available.

Implicit synchronization

The structure of the process implicitly synchronizes interruptible tasks, and no explicit task synchronization is required. Resources can be allocated to competing tasks during idle time.

Improved resource utilization

Resource sharing between multiple processes reduces idle time. It keeps running system components active with tasks. For example, while waiting for data from one I/O channel, the CPU core can process another task.

Responsive systems

Background tasks such as monitoring and data logging continue to run without interrupting main operations, whenever adequate computing resources are available for the background tasks.

Scalability, modularity, and fault isolation

Since the process is decomposed into smaller tasks, each can be distributed across modular systems. Each system can handle its own task or logic, and the concurrent units coordinate and communicate across the distributed network to maintain synchronization of processes.

These value propositions hold true for centralized or monolithic systems — or conceptually, for any computing system executing multiple processes on a single CPU core. But what happens in a distributed environment?

Concurrency in distributed environments

When computers have access to more resources, like multiple servers or processors, they can handle tasks in a way that's both concurrent and parallel. This means tasks can run side-by-side, making systems work faster and more efficiently. Here's how it works:

- Concurrency and parallelism: Concurrency helps manage tasks efficiently — engaging or disengaging resources as needed. In contrast, parallelism allows tasks to run at the same time. In distributed systems, these concepts work together to make the system faster and more flexible.

- Communication: Imagine each part of a distributed system as a person in a team. For the team to work well, they need to communicate in real-time. So, when scaling up concurrency, make sure to maintain the real-time communication across system components.

- Reliability and consistency: Distributed systems need to be reliable. Concurrency control requires fault isolation, replication, and consensus. This improves reliability, performance, and availability across distributed nodes. In database systems, replication needs consensus: even when some nodes fail, some data is corrupted or lag causes a delay in node state update, the database must establish consensus on a single value or state.

- Scheduling strategy and resource management: It's important to use resources wisely to avoid issues like latency and extra cost. This means organizing tasks so that they use the available computing power effectively, prioritizing important tasks, certain schedules, and network traffic to keep everything running smoothly.

In distributed environments, concurrency helps systems stay efficient and reliable, allowing them to handle complex tasks and large amounts of data without slowing down or crashing.

To wrap up

Traditional computer programs were designed for deterministic execution. Parallelism enabled scalability. Doing more, with more. The key challenge here is that modern technology problems require highly scalable computing capabilities. Data grows exponentially and most technology processes are inherently data driven.

On an abstract level, code functions and the interacting hardware components are not always self-contained. They engage and communicate with multiple distributed systems, nodes, and code functions. For this reason, we need concurrency: being able to execute more computing operations with fewer computing resources.

It’s plain to see how concurrency touches every aspect of our digital lives, enabling both efficiency and innovation.

See an error or have a suggestion? Please let us know by emailing splunkblogs@cisco.com.

This posting does not necessarily represent Splunk's position, strategies or opinion.

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.