Predictions: AI and Automation

Artificial Intelligence (AI) - or more specifically Machine Learning (ML) - and automation were big topics for many of our customers in 2022. Common reasons for the interest in AI and automation were to: increase efficiency, reduce manual processing, minimise human error and - especially for the use of ML - identify ‘unknown unknowns’.

Artificial Intelligence (AI) - or more specifically Machine Learning (ML) - and automation were big topics for many of our customers in 2022. Common reasons for the interest in AI and automation were to: increase efficiency, reduce manual processing, minimise human error and - especially for the use of ML - identify ‘unknown unknowns’.

These are all great benefits, and in the interest of efficiency, the header image for this blog has been created using DALL-E - asking for a Splunk dashboard showing some complex machine learning predictions.

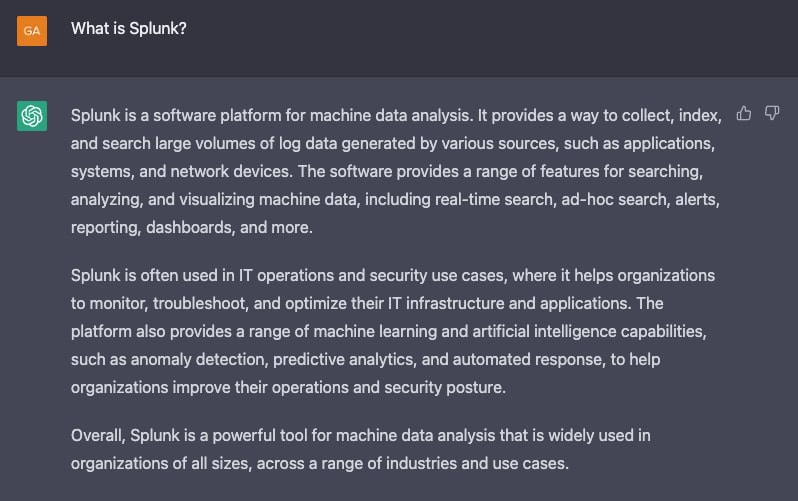

Looking at DALL-E, one of the things that stood out in 2022 was how OpenAI has really led the way in showing people the art of the possible with machine learning. Both DALL-E and ChatGPT showcase some incredible innovations. In particular, the excitement around ChatGPT was really interesting to follow - did anyone else find their LinkedIn feed consumed with ChatGPT examples? Check out the near-perfect elevator pitch for Splunk that it created.

Thankfully, the OpenAI reality of 2022 was more enjoyable than the dystopian prediction from the 1973 film Soylent Green, though both raised some interesting questions around placing too much trust in technology.

So what does 2023 have in store for us with respect to AI and Automation? Here are some thoughts on where we are likely to see a focus on this year:

- People: Data science skills continue to be in demand, but you may witness a rise in Data Champions - people who are experts in their enterprise data.

- Bridging the operational gap:Moving from experimentation to production is a well-known gap with data science projects, but this year expect to see more focus on how data science platforms can be more tightly integrated with operational platforms.

- Keeping things simple: For all the excitement around Large Language Models (LLMs), the biggest successes this year are likely to come from organisations who focus on the impact rather than the novelty of their analytics.

- Oversight: Tighter regulation - particularly in the EU - means you need to have better checks and balances in place for your analytics and automations.

- Hype: Dissecting the hottest automation & AI topics: widespread adoption of LLMs, causal AI and malicious attacks on ML models.

People

Despite how quickly some of the advances in ML are being made, key skills in data will remain hugely important this year and beyond.

It might not be that exciting, but the simple premise that you should know thy data is critical to the successful use of AI and automation. Customers who have been really successful with AI or automation all employ experts on their enterprise data - a.k.a. Data Champions - knowing which sources and features are of good quality and being able to articulate exactly what their data relates to in their organisations. Having people in your organisation who understand the data that you are putting into AI processes and really understand the enriched outputs is critical if you want to keep your processes understandable, ethical and ultimately valuable to the business.

Staying on the theme of people for a minute, keeping a human-in-the-loop is also important to validate the output of AI based systems or confirm decisions that are being made by automations. To circle back to ChatGPT, check out these images for why having someone validate the output of an AI system is important. Talk about easily persuaded…

Furthermore, not having a human-in-the-loop for advanced automations can be a risk. For example, it can be riskier to automate a response action based on the output of a complex analytic, rather than an operator manually assessing the output of the analytic and then deciding on the action. It’s a common concern that the ML used by an analytic makes the wrong recommendation, and the automation to take an action disrupts a business service.

Automation doesn’t need to be pervasive to be successful; you should focus on the use cases that have common resolutions in your organisation rather than trying to automate everything. This could be as simple as providing more enriched inputs for your analysts to assess by automating their triage process, but leaving them to make the decision on what the follow up action should be.

So, what to do about it? Identify your Data Champions and get them involved in the assessment and triaging of the outputs of ML-based analytics, rather than aiming for full automation.

Bridging the operational gap

Many organisations run data science environments that are separate to their operational analytics environments. This is sometimes borne out of data science investments originating from experimental projects, but also because the compute requirements for data science technology stacks have different profiles to that required by an operational analytics stack. Bringing these two environments closer together is important to be able to gain deeper operational insights from data science, but can often be challenging to achieve.

Expect to see more effort from vendors and customers this year to help to alleviate some of the integration hurdles between data science technologies and operational technologies.

There are mitigations to these lack of integrations. You could use your data science stack as a true experimentation platform, to highlight techniques that might be successful in your operational stack, but perhaps aren’t as accurate as a scaled machine learning approach. Alternatively you could consider integrating at the UI layer, or building some REST-based services on top of multiple platforms, so you can bring result sets back to users in a single place.

In addition to these technical challenges, it is also important to set well-posed business problems that you want AI or automation to solve. Without this framing, you are entering a research project with uncertain outcomes, which can be a massive time and money sinks.

So, what to do about it? Having well-designed governance systems is a good way to protect yourself from technology silos and research projects. There are a few gotchas here, namely: don’t expect business leaders to be able to set the best direction. Trusting your Data Champions to govern at a lower, more technical level is a critical success factor when trying to avoid costly experiments with AI and automation. Placing responsibility (or freedom?) in the hands of those who really understand the data that is available, and the context of the systems that the data is used by, can really help drive innovation and adoption of technologies like AI and automation.

Keeping things simple

Keep it simple! Use of novel or complex techniques like LLMs should be secondary to keeping ML processing easy to understand. We used to have a section in one of our products titled 'explain it to me like I'm five' that I think should still be around - if it's too hard to explain in simple terms, then you might be safer choosing a simpler technique for processing your data.

Advances in technology and software libraries have democratised the ability to create complex analytics, which is a great feat. Perhaps more importantly than this, however, is that this democratisation has really drawn attention to the opaque processing of data. Going back to the start of my career, I can recall numerous examples where only one person within an organisation would know how a particular analytic would work, which is hugely risky - especially if that analytic is business critical!

So, what to do about it? Keep users at the heart of any AI or automation project with frequent feedback loops. If users get left behind, then you are probably making things too opaque. This can protect you from moving from a silo of tribal knowledge about how a particular analytic works to a slightly bigger silo of tribal knowledge about how a particular algorithm, library, framework or technique works.

Oversight

With the EU publishing the world's first act for regulating AI systems a few years ago, there is increasing scrutiny on the use and application of AI.

A large part of this stems from the complexity of some AI systems, with the explainability of AI being a crucial part of building trust in the outputs of complex systems. Providing the rationale behind decisions is really important, and I’d encourage you to check out this snippet from John Brockman’s seminar “Possible Minds”, where Stuart Russell speaks to some of the risks around widespread adoption of poorly crafted AI systems.

To compound these issues, if you are also processing personal data then you could be creating a system that contains or persists biases. The risk of this happening, however, is reasonably low for most Splunk use cases, even for those that use personal data. To explain this, it is probably best to look at the way Splunk is used, which is normally in a highly operational context such as in a security or network operations centre. This means that there is typically a human-in-the-loop for any analytical outputs from Splunk; whether that is a dashboard showing predicted IT outages or an alert that identifies a potential cyber attack a person is required to triage this information before taking action. In addition to this, the actions that are ordinarily automated by our customers are for common, everyday tasks like those described in our ebook ‘5 automation use cases for Splunk SOAR’ - running tasks such as enriching alerts or triaging a malware alert.

So, what to do about it? Even if you are not using AI or automations in risky scenarios, being able to have an open debate about data and how it is processed in your organisation is important.

If you can’t talk about how data is used within your organisation, how will you talk to a regulator about it?

Additionally, consider reporting on any automated processes that rely on data, which should detail: the data that is used, where it is sourced from (particularly if personal data is used), the decisions being made (especially if these decisions impact customers, employees or citizens), fail safe conditions in case of errors, incorrect results or uncertainty in the process.

Hype

So… what about Large Language Models (LLMs)? Don’t believe the hype. LLMs won’t replace Google any time soon, but they might help your kids write their school essays or help you draft an email to a customer. Although the reasoning provided by the LLMs used by ChatGPT seems convincing, it is hard to verify the accuracy of the answers the LLM gives. Additionally, it will take some time to optimise LLMs specifically for search - although Microsoft's $10bn investment could well speed that research up.

So… is causal AI going to be big? Believe some of the hype on this one. Causal AI is way more explainable than ML or deep learning, and therefore can provide better reasoning for a layperson than other techniques. It will be a while before it is mainstream, but expect to see some use cases creep into operational use this year, such as automated root cause analysis.

So… will we see malicious attacks on ML processes? Don’t believe the hype. The idea of poisoning models or data sets by malicious actors is like claiming that the school bully did your homework for you badly, just to get you in trouble. If a malicious actor understands an organisation's advanced analytical processes better than that organisation, then that malicious actor should be applauded - they clearly have a lot of time to kill analysing data and processes… It’s definitely quicker and easier to fire off some phishing emails and use mimikatz to harvest credentials…

Right, on that note - I’m off to use ChatGPT to write my next blog post, while I brush up on my knowledge of causal inference. Plus I’ll be blaming any errors in my next machine learning project on a malicious actor corrupting my model, rather than my own ineptitude…

Happy Splunking!

Read more on other topics from our Predictions 2023 series here.

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.