AI & Sustainability: Better Together with the Splunk Sustainability Toolkit

Co-Authors: A Dream Team

Sophie Dockstader, Sustainability SME | PS Security, Marc Thomas, Senior Sales Engineer, Javier Sanz, Senior Solutions Engineer, Carrie Christopher, Splunk Senior Director of ESG and Sustainability

Artificial Intelligence (AI) has significant potential to tackle sustainability challenges. For example, it could help mitigate between five to ten percent of global greenhouse gas emissions by 2030. That’s the equivalent of the total annual emissions of the European Union (EU), according to a report from Google and Boston Consulting Group.

On the other hand, the growth and use of AI can pose significant sustainability challenges. Read on to learn how the Splunk Sustainability Toolkit can demonstrate how AI & Sustainability can be “Better Together” in the area of data centers.

The Growth of AI Creates Unprecedented Demand for Data Center Power

Bloomberg Intelligence projects the generative AI market will grow to $1.3 trillion by 2032 from a market size of approximately $40 billion in 2022. This development results in AI-ready data centers being built in unprecedented numbers, and at far higher compute densities than ever before. DataCenterKnowledge forecasts a 50% increase for the power footprint of data centers by 2025 whereas data center traffic is expected to increase 50 times by 2024 compared to 2010 according to Statista. Data centers as critical infrastructure need to pack more punch per rack to keep up with this projected demand.

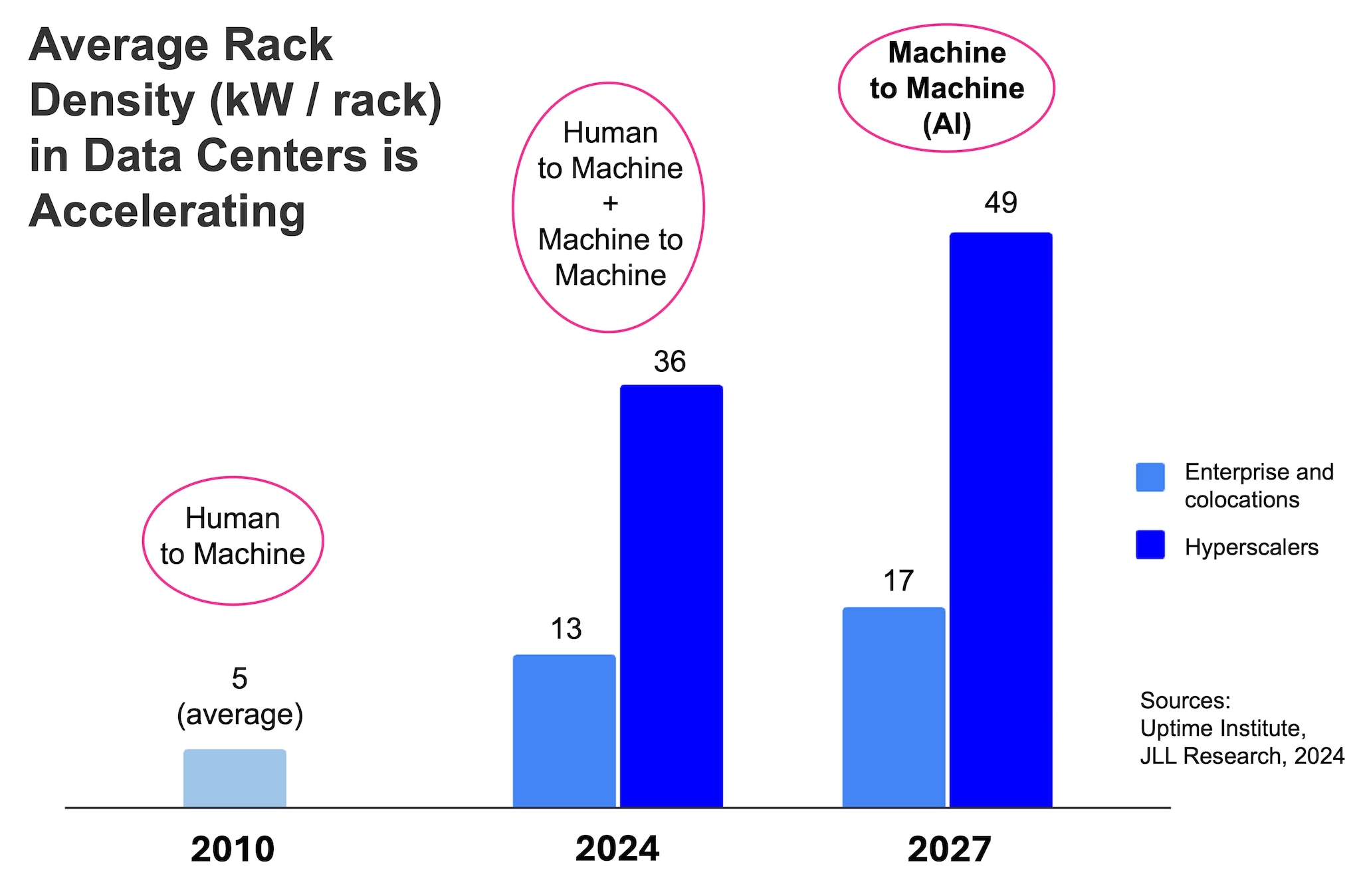

A good metric to measure data center power is rack density which refers to the amount of computing equipment installed and operated within a single server rack (kW/rack).

As per the chart above, average data center rack density is rapidly accelerating. Initially, data centers had to cope with “simple” “human to machine” data such as text, images, audio, and video files created by humans. In the very near future, a much higher rack density will be needed to tackle “machine to machine” data, such as generative AI, which generally require a more densely clustered and performance-intensive IT infrastructure than the framework found in older “human to machine” standard data centers. For example, the workload of an AI image generator application requires much more power than a text generation application, according to the Data Centers 2024 Global Outlook by Jones Lang Lasalle.

Taking these developments into account, the data center company Digital Realty launched a high-density colocation service for workloads of up to 70kW per rack. While a good first step, it does not address the projected demand, since AI and high-performance computing is expected to require rack densities of up to 100kW per rack.

Meta plans to spend billions of dollars on servers and data centers to support AI. The company even increased 2024 capex forecasts to $35bn-$40bn to help address its AI efforts. Microsoft, Alphabet and Amazon invested a total of $40bn in the expansion of their data centers from January to March 2024 alone according to The Economist.

The Dark Side of AI: A Significant and Soaring CO2 Footprint

According to Statista, one month training of a large language model (LLM) like GPT-3 is using about 1,300 megawatt hours (MWh) of electricity.

This is more than the average electricity consumption of

almost 4,500 German households in the same time period.

(One German household consumes on average approximately 3,500 kWh per year = 292 kWh per month according to CleanEnergyWire)

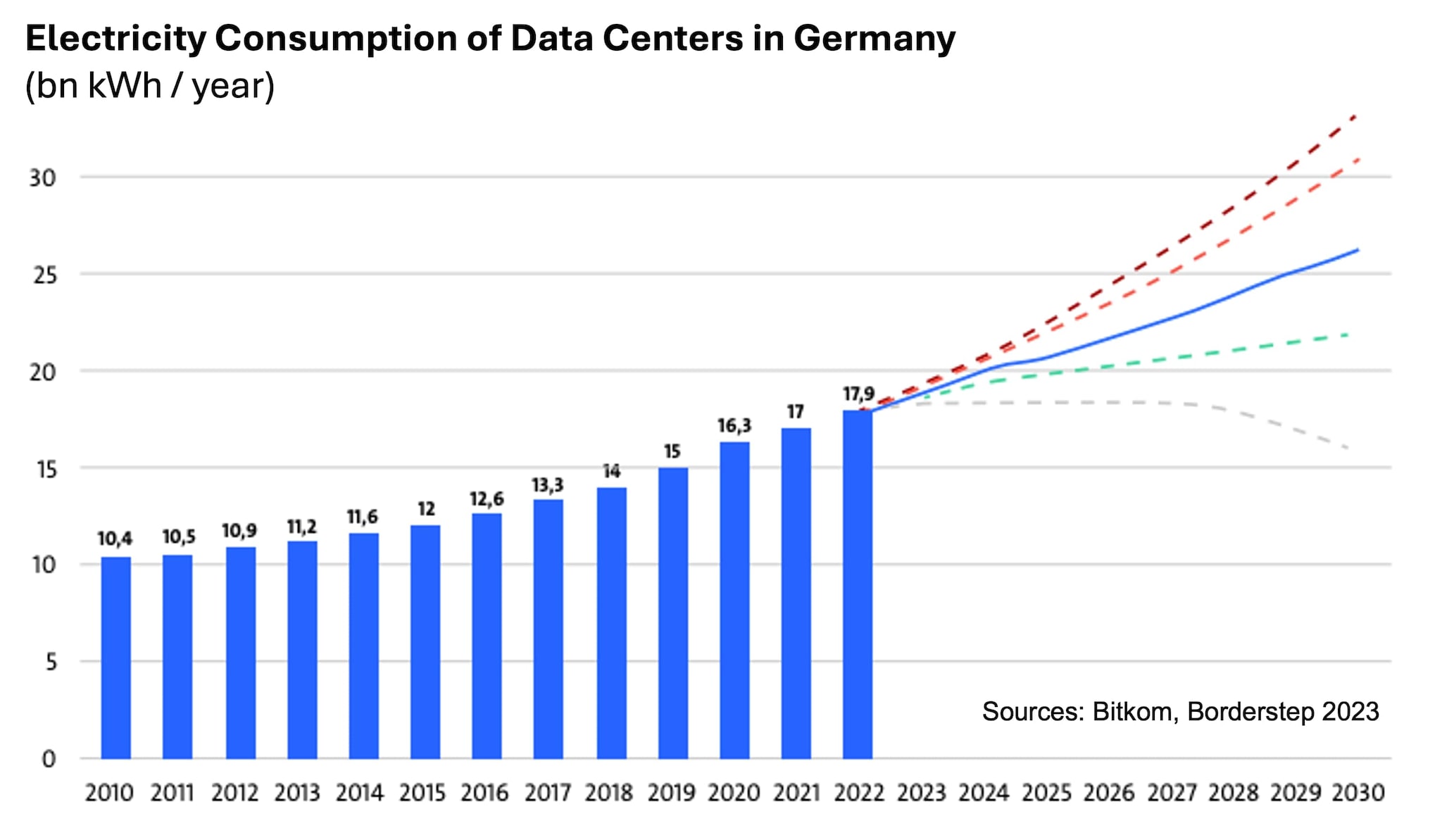

Today, data centers worldwide consume 1-2% of overall power and their carbon footprint exceeds that of the aviation industry according to a Goldman Sachs and World Economic Forum analysis. But this percentage will likely increase to 3-4% by the end of the decade, forecasts Goldman Sachs. In 2022, Germany’s data centers gobbled up 18bn kWh of electricity - an increase of 70% within 10 years according to Bitkom. Regardless of forecasting models, data center electricity consumption continues to increase.

The International Energy Agency Electricity Report estimates that data center electricity consumption globally was around 460 terawatt hours (TWh) in 2022 and could increase to between 620 and 1,050 TWh in 2026. This increase is equivalent to the energy demands of Sweden or Germany, respectively.

Hand in hand with this increased electricity consumption goes a higher CO2 footprint. For example, Microsoft’s CO2 emissions increased by almost 29% compared to last year, mainly caused by new AI-ready data centers according to a Handelsblatt report.

Schneider Electric predicts substantial energy consumption for AI workloads globally: By 2028, AI power consumption could more than quadruple to nearly 19GW. Almost 14 additional nuclear power plants would be needed to tackle this energy challenge.

As a result of these developments, data center sustainability reporting has become mandatory from January 2024 onwards, based on the EU’s Corporate Sustainability Reporting Directive (CSRD). The Energy Efficiency Act (EnEfG) is a new data center specific regulation in Germany which requires an increasingly lower Power Usage Effectiveness (PUE). In the quest to lower the CO2 footprint, data center operators need to seek specialized data-driven solutions to propel optimization and efficiency gains.

Operators must act now or risk falling foul of new legislation.

Colm Shorten, Global Strategy & Innovation at Jones Lang Lasalle.

Tackling the CO2 and Energy Cost Challenge with Splunk’s Sustainability Toolkit

The Splunk Sustainability Toolkit, an app for Splunk customers, equips organizations with capabilities to gain deep insights into their carbon footprint and as such empowers them to take the necessary actions toward their carbon neutrality goals. On the one hand, it provides big-picture visualizations across cloud / multi-cloud, hybrid and on-premise environments via an executive dashboard. On the other hand, it empowers organizations to deep-dive into emission hotspots in real-time and take the required action based on data.

The sweet spot of the Splunk Sustainability Toolkit is IT sustainability focusing on data center analytics and efficiencies.

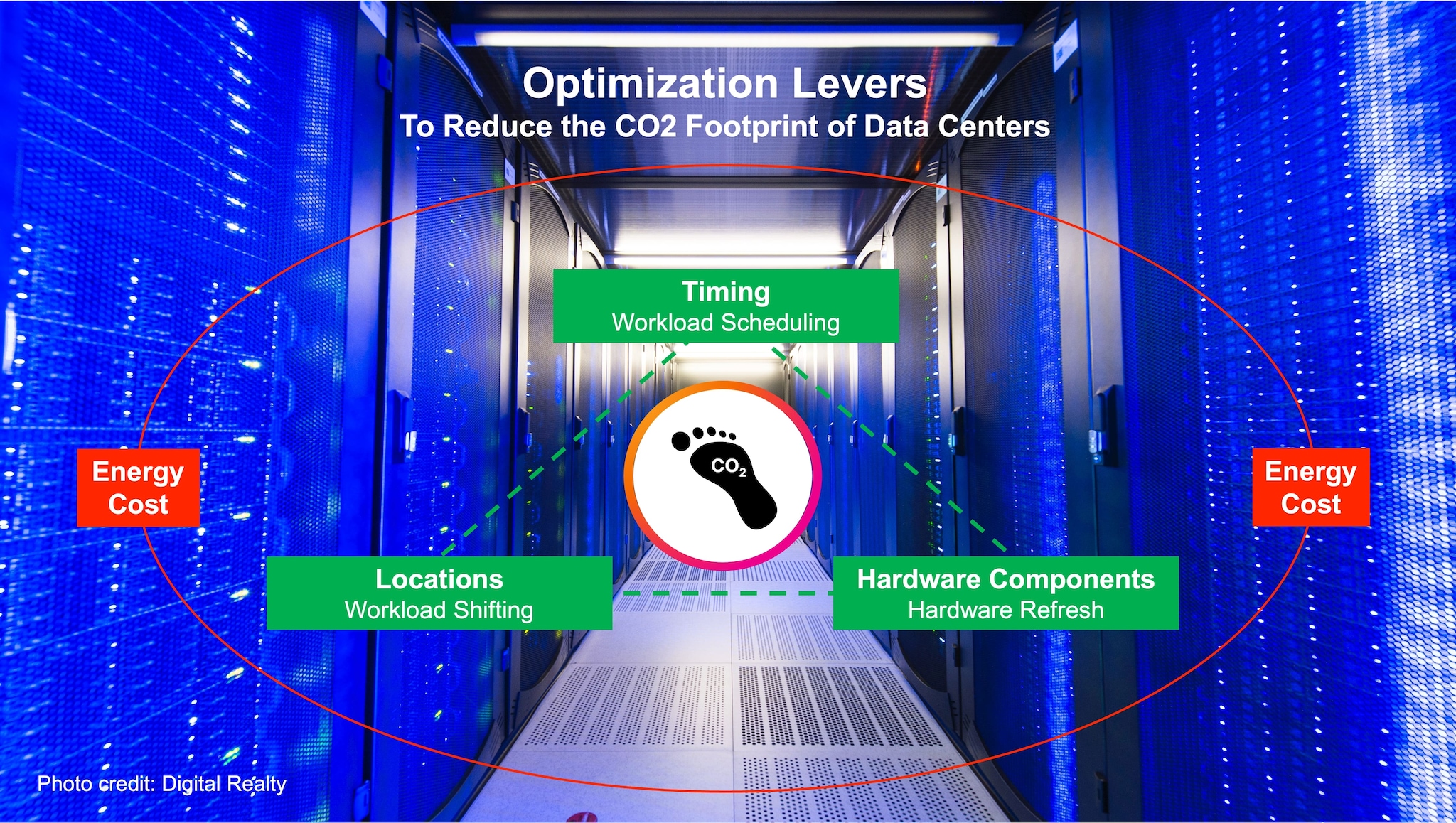

Here are three ways the Splunk Sustainability Toolkit can support the CO2 challenge of AI sustainability in data centers:

1. Optimizing Three Key Levers + Energy Cost

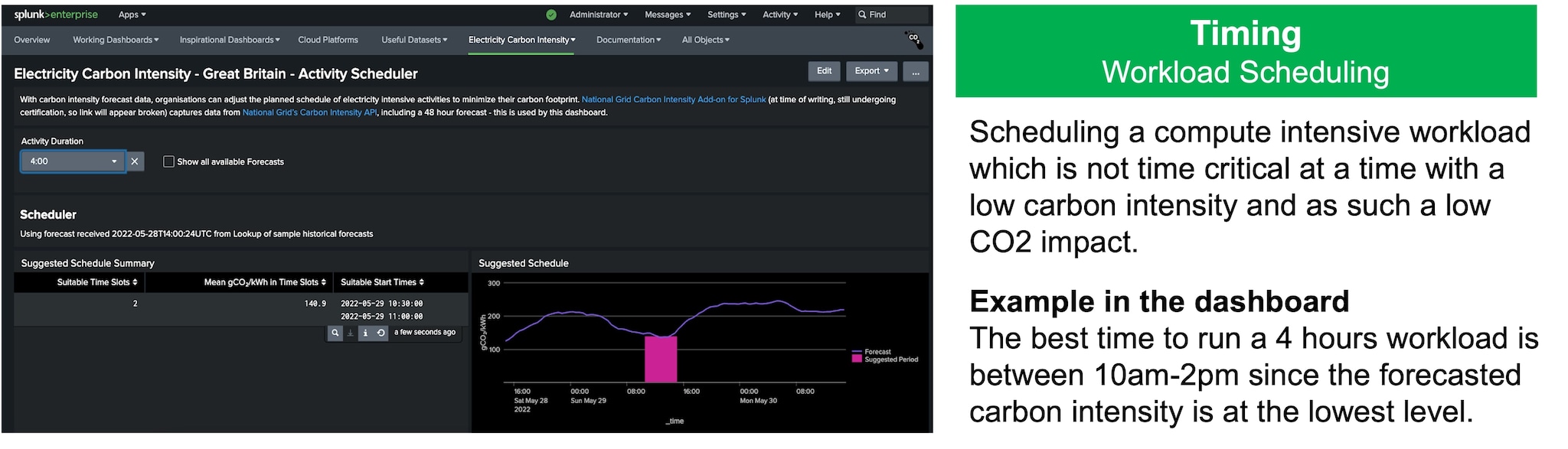

The basis for the timing and locations levers are out-of-the-box integrations and data normalization with Carbon Intensity APIs as follows. Regional specific data can be easily onboarded via a supporting Splunk Add-On for Electricity Carbon Intensity.

- Real-time data and decision support: The toolkit supports integration and data normalization of real-time carbon intensity metrics from Electricity Maps, which provides access to 24x7 grid carbon intensity for 160+ countries in real-time, and as a forecast for the next 24 hours.

- Near-future predictive analytics and decision support: On a national level, the Carbon Intensity API from UK’s National Grid can port to the toolkit, providing near-term predictive trends that show the regional carbon intensity of the electricity system in Great Britain 96+ hours ahead of real-time.

- Customization for customer-specific data and analytics: Ability to easily customize the toolkit to integrate with other carbon intensity data sources, including open source APIs, with flexible macros and lookups to adapt the real-time carbon intensity factor based on your organization's needs.

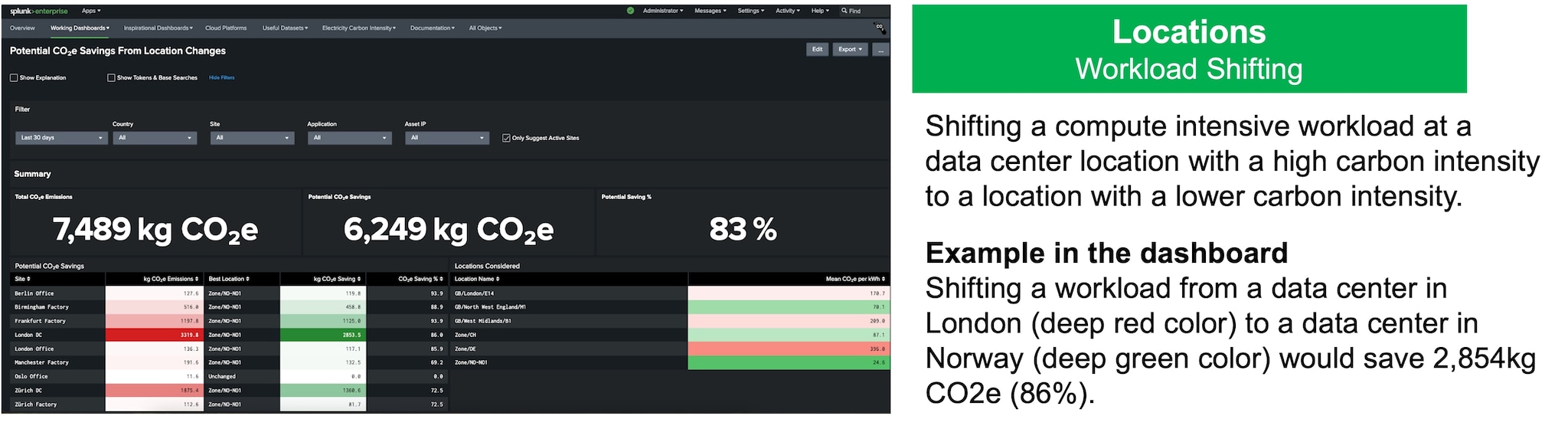

According to Data Centers 2024 Global Outlook by Jones Lang Lasalle power requirements for the three stages of generative AI — model creation, tuning and inference — vary significantly. Training and tuning application workloads for AI are not typically latency-sensitive. Therefore, operators running AI-ready data centers can be more flexible in site selection when running compute heavy workloads. They can select locations with a lower carbon intensity and as such lower the CO2 footprint.

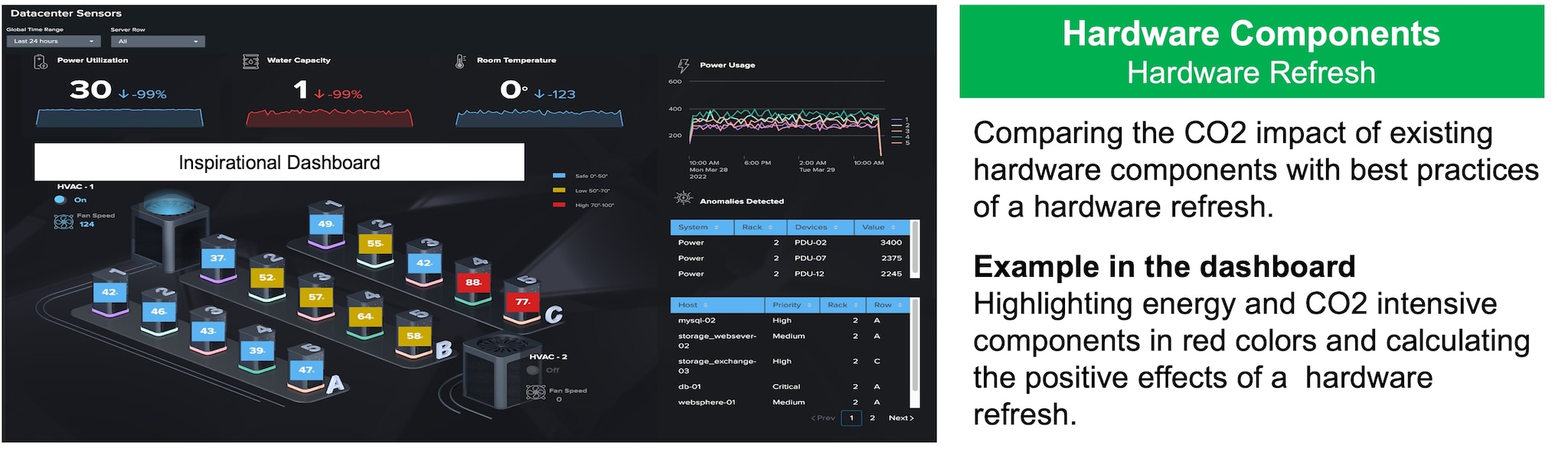

At first glance, hardware refresh cycles are broadly lengthening rather than shortening - up to five years compared to three years in 2015, according to an analysis of the Uptime Institute 2022 with one reason being a stagnating Power Usage Effectiveness (PUE) in the past years (2022: average PUE 1.55).

However, with the seismic shift brought by AI, rising energy costs and heightened scrutiny, new regulations and sustainability reporting requirements, data center operators are being pushed for better, more energy-efficient infrastructure. As such, 64% of the respondents of a global survey expect “improved sustainability practices” from a two year refresh cycle according to a study by Forrester Consulting. Data centers can use less hardware overall, saving precious rack space and reducing their CO2 footprint and energy costs.

Hardware refresh analytics is an expected upcoming value creating feature of the Splunk Sustainability Toolkit.

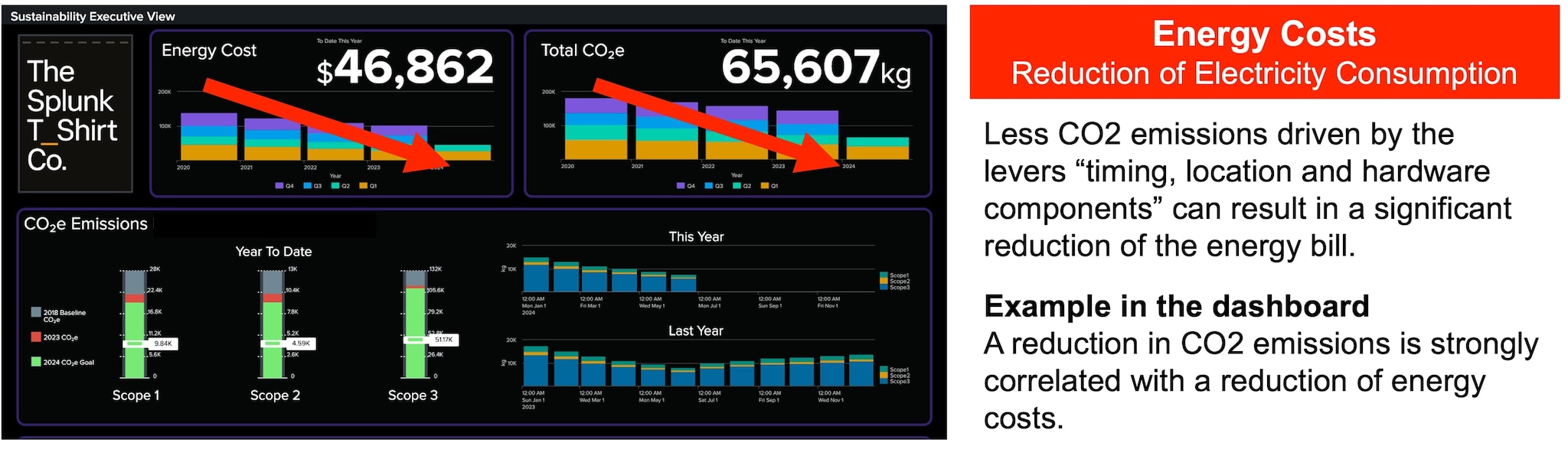

An unbelievable 11 million EUR was the yearly energy cost of a German data center in 2022.

Source: Bitkom, average power consumption: 5 MW, yearly electricity consumption: 43,800 MWh, electricity price: 24.6 cents / kWh

Just a few percentage points less electricity consumption driven by the above mentioned levers “timing, location and hardware components” can result in a significant reduction of the enormous energy bill. This also applies to data center operators which obtain 100% green electricity from renewable sources or pay to offset emissions with green carbon credits. A reduction of green energy results in a reduction of costs as well.

2. Granularity Across Key Software Applications and Hardware Components

The Splunk Sustainability Toolkit can allow for very granular CO2 analysis, from application level to specific data center hardware components, as shown in the dashboards below.

Configurations for performance improvements and ease of customization include the following:

- Ability to support asset analysis using the customizable asset Lookup Files, which includes attributes to define assets (such as the Server’s role, application, and embodied CO2) and sites (location and source of carbon intensity data). The toolkit includes a suite of sample lookup files.

- Asset energy data and electricity CO2 data can be summarized as metrics, enabling custom point-and-click analysis in the Splunk Analytics Workspace, which can lead to significant performance improvements

- All-in-one location to adjust energy or carbon intensity data source inputs with flexible macros, which reflect changes across all dashboards and background searches

This granular level understanding of energy and CO2 hotspots enables data driven decisions for targeted improvements and cost savings.

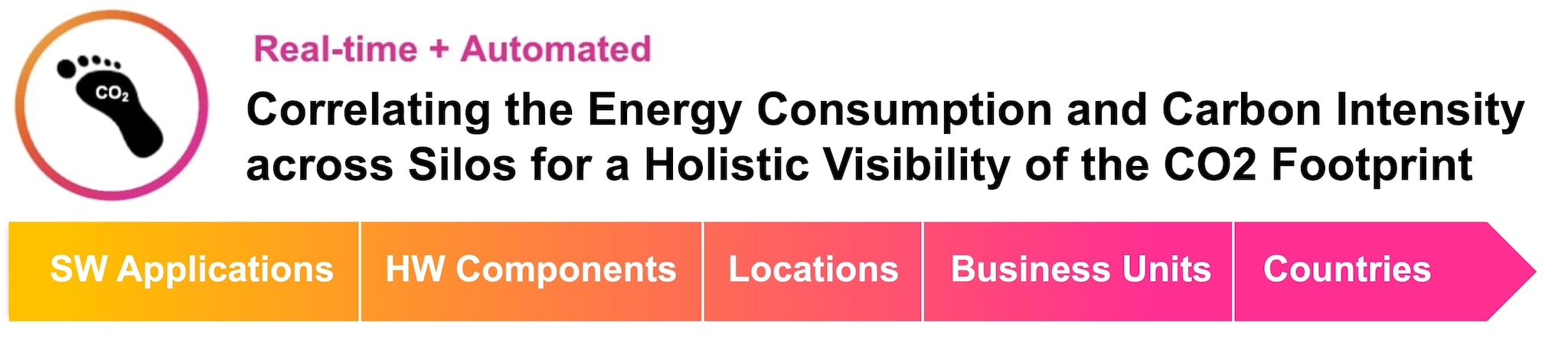

3. Unified View Across All Key Areas in Data Centers: Real-Time + Automated

One of Splunk’s core strengths is the ability to analyze data of almost any source, type and time scale — and do this in real-time. By ingesting and correlating disparate data sources across software applications, hardware components, locations, business units and countries, Splunk can provide an all-in-one view and transparency. This equips organizations with deep insights on the CO2 footprint of their data centers. As such, customers are empowered with real-time data sets that help them make smart decisions that can support their sustainability goals.

Conclusion

Sustainability has matured from a “nice-to-have”, feel-good ESG initiative to a numbers- and data-driven imperative that often directly ties to organizations’ carbon reduction goals and strategic business objectives. Transparency of real-time data and analytics is critical for monitoring, managing and reducing the energy consumption and CO2 footprint of AI in data centers. The Splunk Sustainability Toolkit aims to show that AI & Sustainability can be “Better Together” in the area of data centers. The upcoming V3 version will dig even deeper into this topic. Stay tuned!

Read On

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.