Technical Review of Splunk AI Assistant for SPL

The Splunk AI Assistant for SPL (SAIAS) is a generative AI-powered assistive app that accelerates user day-to-day tasks. SAIAS accomplishes this by generating SPL from a natural language prompt and increasing the user's knowledge via explanations of SPL, product concepts, and functionality for Splunk products. This blog includes a high level summary of the system which supports the AI model, the guardrails used to protect the model, and how we evaluate the system’s capabilities for SPL generation.

Solution Overview

Datasources

The model is fine-tuned using a combination of manually created and synthetically-generated data, Splunk documents, Splunk training materials, and other Splunk resources. To maximize coverage and diversity of our SPL examples, we clustered and sampled sequences of SPL commands from our SPL queries.

Retrieval Augmented Generation (RAG)

The AI Assistant leverages a RAG based approach to improve model performance. We have indexed a diverse set of SPL syntax with a vector database to reference multiple scenarios including IT, observability, and security. Every time a user executes a query, our system classifies the intent of the query, searches for previous similar requests, and ranks the retrieved examples to determine which subset to show the Large Language Model (LLM). Our evaluation showed that providing these examples to pretrained LLM’s improved SPL command syntax which led to improved parsibility.

Model Fine-tuning

Over the past year there has been an explosion in the number of open source LLMs which have demonstrated impressive reasoning performance to parameter count ratios. We evaluated several pretrained models’ next token prediction results for SPL completion to determine a subset of open source models which have SPL in their training corpuses. For fine-tuning, we created a corpus which leveraged both synthetically generated natural language descriptions of SPL queries and a combination of open source conversation and code instruct datasets. We observed that our fine tuned model, when integrated with chain of thought and RAG system, reduced syntax mistakes and references to SQL analogs, leading to increased execution accuracy.

Guardrails

Guardrails are designed to help improve quality, ethics, security and general alignment with other AI trustworthy principles. In SAIAS, we currently have three input guardrails in place covering:

- Language Guardrail: The model detects the natural language(s) provided in the prompt, and filters out requests to the model for non-supported languages. Four languages supported currently in SAIAS are: English, French, Spanish, and Japanese.

- Gibberish Text Guardrail: Gibberish refers to nonsensical or meaningless language or text that lacks coherence or any discernible meaning.

- Prompt Injection Guardrail: Prompt injection attacks manipulate language models by inserting or altering prompts to trigger harmful or unintended responses.

Note: System guardrails do not do the following:

- Guarantee the correctness of the answer - for example the SPL generated might not be efficient, might have grammar errors, or might be wrong in the context. These are referred to as hallucinations.

- Does not replace the end user agreements and disclaimer about user’s responsibilities of interacting with the system.

Latency

When it comes to LLMs, latency can significantly impact a user’s experience. The latency is directly impacted by the length of the chat history, user’s request, and the input from RAG. The results are streamed back to the user with initial tokens appearing in a few seconds. We expect users to iterate on a query to obtain the desired output in a single chat. Once you have obtained the desired output, we suggest starting a new conversation to reduce the context history which will improve the system's throughput.

Measuring Model Quality

A key challenge to producing an AI assistant for generating a structured query language is balancing the near zero tolerance for syntax errors while simultaneously capturing the user’s intent with a well structured query. When evaluating the AI Assistant’s output, we focus on three categories:

- Token Similarity: Measures how closely the generated SPL tokens match a known reference query. This metric helps understand if a query may be useful even if the query cannot execute in a given splunk environment (for example if the model produces place holders for implicit / unspecified values). Similarly, we check for coverage of fields and indices when the information is specified in the input.

- Structural Similarity: Compares sequences of SPL commands between a reference and candidate SPL queries. This comparison captures a notion of SPL structural similarity that gives insight into the efficiency and readability of a proposed query when using high quality SPL references.

- Execution Accuracy: Execution accuracy measures how well the the results of an SPL query match their expected output when executed on the intended index. This metric assesses the LLM’s ability to generate accurate SPL queries.

| Model | Bleu Score | Matched Source - Index, input, hyperlinks | Matching Sourcetype | Command Sequence Normalized Edit Distance (lower is better) | Execution Accuracy |

| GPT 4 - Turbo | 0.313 | 52.10% | 65.10% | 0.5683 | 20.40% |

| Llama 3 70B Instruct | 0.300 | 42.25% | 78.17% | 0.6477 | 8.40% |

| Splunk SAIA System | 0.493 | 82.40% | 85.90% | 0.4104 | 39.30% |

Prompting Guidance

Write SPL

When writing a query to the Assistant you should use directives rather than questions. Our examples are indexed based on descriptions of SPL queries rather than the intent. For this reason it is also important to be verbose to avoid implicit information. For example, instead of asking ‘Find all overprovisioned instances’ (which may have a different meaning for your organization than another), try searching for ‘Find all EC2 instances with less than 20 percent average cpu utilization or memory usage less than 10 percent.’ This will give the model critical information about what index may be reasonable and select examples which focus on the structure of your desired query when generating its response. Similarly, specify indices, source types, fields, and values with hyphens or quotations to help mitigate hallucinated information. If you are not sure which indices you have access to, try asking “List available indices.” In many cases the structure of the SPL will be correct however; the model may assume the existence of fields or flags that may need to be replaced with an intermediate computation.

When conversing with the Assistant to iterate on a query, try using complete sentences and including the prefix ‘‘update the previous query by.’ This will improve the likelihood the model will edit a previous query instead of trying to generate the query using new examples. Finally, if the model’s responses no longer seem relevant to the user’s request after multiple chat iterations we suggest starting a new conversation to help remove unnecessary turns and previous erroneous model outputs.

Explain SPL

The “Explain SPL” tab takes in any parsable SPL and generates a natural language description to explain the SPL in detail. Make sure to only enter SPL in the prompt window. If specifying variables use double angle brackets ‘<<variable>>’.

Tell me about (QnA)

When asking natural language questions it is important to make sure your text is clear and meaningful, avoiding any gibberish or nonsensical content, as well as grammatical errors or syntactical abnormalities. This helps maintain the quality of a given conversation. Additionally, it is crucial to avoid including any manipulative language intended to provoke harmful or unintended responses, as this can compromise the integrity and safety of the system. If any of the guardrails are triggered the assistant will respond with an error message.

Language Support

Currently, we provide responses in only four languages: English, French, Spanish, and Japanese. Text in other languages will not be supported, so ensure that your input is in one of these supported languages. This limitation helps us maintain high-quality interactions and manage the system effectively.

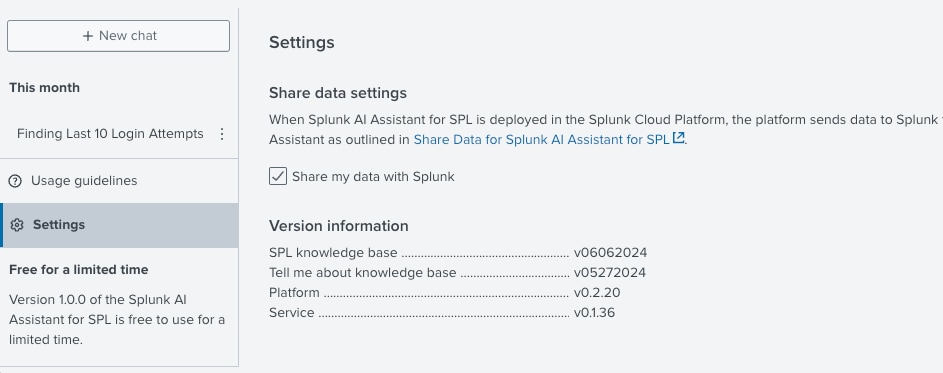

Data Privacy

SAIAS collects different data for research and development purposes depending on whether you have opted out of data sharing when the app is installed.

If the EULA agreement is signed and sharing is enabled, the collected pieces of data can be found in our data collection blog. If you choose to opt out, data collection for research and development purposes stops going forward, but previously collected data remains.

Next Steps

The Splunk AI Assistant for SPL is generally available on Splunkbase for use with the Splunk Cloud Platform on AWS. If you want to learn more about the user value of Splunk AI Assistant for SPL, read our product blog. For more information on how to use this app, refer to the documentation. To get started with this app today, visit Splunkbase.

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.