Generative AI for Metrics in Observability

The AI Assistant in Observability Cloud (the “AI Assistant”, in private preview1) provides a natural language interface for observability data sources and workflows. SignalFlow is the core analytics engine in Splunk Observability Cloud, as well as a programming language and library, designed for real-time data processing and analysis of metrics. It enables users to express computations that can be applied to large volumes of incoming data streams and generate insights in the forms of metrics, charts, and detectors. In an accompanying blog post, we have provided an overview of the AI Assistant's design and architecture, in particular how we adapted the agent pattern to the observability domain. This blog focuses specifically on the challenges and methodologies involved in using large language models (LLMs) to generate SignalFlow programs.

SignalFlow grammar is modeled on Python grammar, and includes some built-in functions for data retrieval, output, and alerting. To start, we’ll look at a simple example SignalFlow program:

data('cpu.utilization',

filter=filter('host','example-host')).mean(over='5m').publish()This program first retrieves a filtered set of data points for the “cpu.utilization” metric so that only data points where the dimension “host” is equal to “example-host” are included in the computation. Next, the program computes the mean of the data points in the stream, calculating the average over 5-minute rolling windows. The publish block instructs the analytics system to output the result. Note that function calling and method chaining work similarly to how they do in Python.

Challenges and Methods

Among problems in generative AI, code generation is particularly difficult because even a single incorrect character can cause a syntax error. Generation for a niche language like SignalFlow poses an even greater challenge because very few models (open or closed-source) have any knowledge of SignalFlow, or their knowledge of SignalFlow can be diluted by information about Python. Therefore, even a model fine-tuned to generate code would require significant further customization to produce high-quality SignalFlow.

Methods for tailoring an LLM's generation towards a specific task rely on augmenting the model with additional examples, typically in the form of input-output (e.g., question-answer, sentence-translation) pairs. Prompting involves describing the general relationship between input and output, and perhaps including a few examples. Retrieval augmented generation (RAG) is a refinement wherein the provided examples depend on the input. A common implementation involves performing a semantic similarity search using the user’s prompt against a data source, then including the most relevant retrieved examples in the prompt. This approach enhances the model’s responses by providing contextually relevant information. Fine-tuning goes even further by training the model on the additional examples, adjusting its internal weights and making it more proficient at the desired task. While RAG can be computationally expensive due to the increased number of input tokens (the examples are added into the prompt), fine-tuning requires a significant initial effort but offers long-term benefits by embedding the task-specific knowledge within the model itself. Both RAG and fine-tuning demand a large and diverse set of high-quality examples.

Data Curation

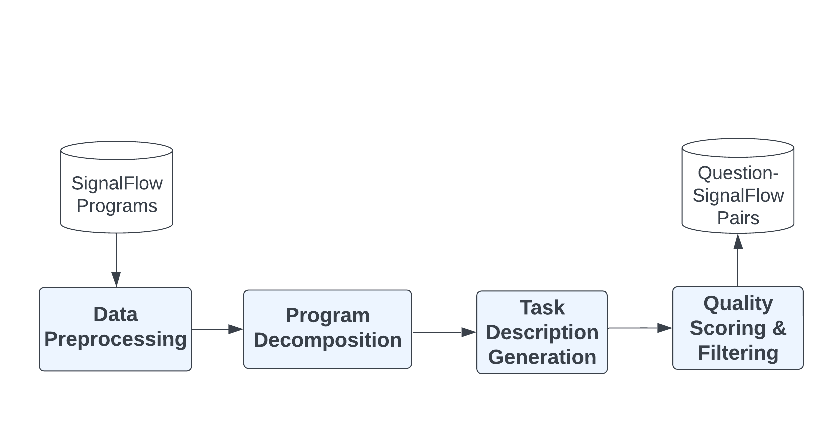

To translate user questions about their environments (e.g., “What’s the average CPU utilization for example-host over the past 5 minutes?”), our first goal was to curate a dataset with pairs of English questions and associated SignalFlow programs. This was achieved via a multi-step process indicated in the following diagram: data collection and preprocessing, SignalFlow decomposition, generating task instructions and questions, quality scoring, and producing final question-answer pairs.

Fig 1: Data Curation Steps

- Data collection and preprocessing: We collected many SignalFlow programs from internal Splunk O11y Cloud usage, along with various metadata, including chart titles and descriptions. During preprocessing, we anonymized and masked sensitive information (e.g., custom metric names, organization information). We also ensured all programs passed through our SignalFlow syntax validation tool, making sure they would execute without syntax errors.

- SignalFlow decomposition: Many of our internal programs are quite complex, and represent multi-step human reasoning. To extract useful building blocks for our model, we used an LLM to decompose complex SignalFlow programs into independent units, with each unit being itself an executable program. We provided the LLM with chart descriptions, chart titles, and metrics metadata, and asked it to decompose the SignalFlow program into units. Each unit should be independent of any other lines of code in the original program. Once the decomposition was completed, we asked the model to score the decompositions and filtered out those with poor scores. The remaining units were then validated for syntactic correctness.

- Task description generation: We used a two-stage approach to generate the descriptions for the SignalFlow program tasks, to be applied to the units extracted from decomposition.

- Task instruction generation: We first prompted an LLM to generate task instructions that give details for someone to understand and solve the SignalFlow programming task. We provided the original SignalFlow program along with useful context to help the LLM generate more specific and meaningful descriptions.

- Question generation: We then used the task instruction along with the SignalFlow program and the context to generate English questions by prompting the LLM. Specifically, we prompted it to generate both casual and detailed questions that users might ask to solve the current task. We determined that the casual questions were better for RAG and fine-tuning since they matched the kinds of questions users would practically ask.

- Casual question: “How much free RAM does each Load Balancer-API instance have?”

- Detailed question: “Can you monitor, measure, and report the memory usage of each instance of Load Balancer-API to accurately calculate the percentage of available or free RAM?"

- To ensure we had a diverse set of questions, we also prompted an LLM to generate a variety of questions that address the same task and result in the same generated program. This ensures the data covers different perspectives and formulations and gives us a better chance of retrieving a relevant QA pair during our RAG stage.

- Quality Scoring: We later asked the model to “self-reflect” by scoring these questions for complexity and diversity, and then filtering out low-scoring ones. The goal is to ensure every question reasonably matches a program that can be considered the “ground truth” program that answers that question.

Altogether, this procedure resulted in a curated dataset consisting of tens of thousands of examples, where each example consists of a casual question, a detailed task instruction, and a SignalFlow program.

SignalFlow Generation Architecture

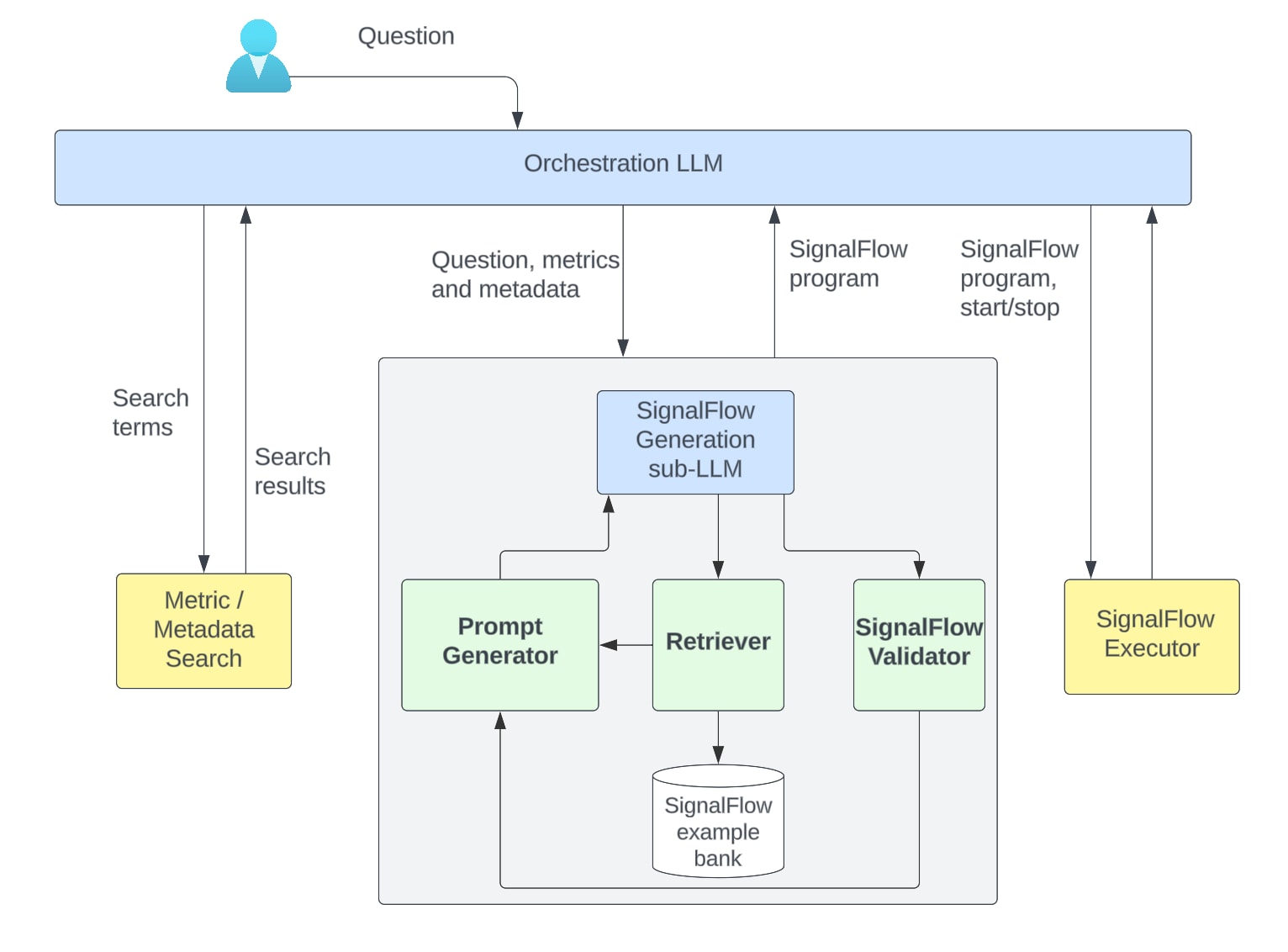

Building the natural language to SignalFlow model requires some scaffolding around the dataset we have just constructed. This scaffolding consists essentially of prompting for the agent (“sub-LLM”) responsible for SignalFlow generation, a mechanism for retrieving customer-specific metrics and metadata, and program validation (including use of error messages in the event validation fails). In the current implementation, the dataset enters the agent’s workflow via RAG.

Given an incoming user question, the orchestrator LLM will determine whether a metrics query is needed to provide an answer (which is the case if the user doesn’t provide a metric name). It obtains the metric names and their metadata by calling the appropriate tools. This requires extracting search terms from the user’s question. It then passes the initial user question, the metric name(s) and metric metadata to the SignalFlow generation sub-LLM. The workflow is depicted below.

Figure 2: Overall framework of our SignalFlow generation process

The SignalFlow generation sub-LLM coordinates all steps involved in generating SignalFlow programs from questions. Its signature is to take a natural language question and some metadata context, and produce a fully formed SignalFlow program to answer the question (using the provided metadata context in the program if necessary). Its system prompt includes descriptions and examples of important SignalFlow concepts and constructions. Its main components are as follows:

- The prompt generator crafts LLM inputs (prompts) from the original question, related metrics and metadata, any retrieved examples, and syntax validation feedback.

- The example bank is the set of curated question-SignalFlow pairs, organized into a vector index and with an accompanying retrieval mechanism. The most relevant examples are fetched from the example bank by embedding the user’s question in the same space as the questions from the curated pairs, then using cosine similarity to find the top k most proximate question-SignalFlow pairs. The retrieved examples are inserted into the prompt.

- The SignalFlow validator checks a candidate program for syntactic correctness. Should the SignalFlow program be successfully validated, the sub-LLM returns it to the orchestrator. The orchestrator will typically proceed to execute the program. If not, any error messages are appended to the sub-LLM context and the sub-LLM will retry, up to a few times.

Evaluation

Evaluating the correctness of a generated SignalFlow program is difficult since there may be multiple correct answers (programs) for a given question. Therefore, we use several evaluation metrics to get an overall picture of the performance of the sub-LLM.

- We use embedding-based similarity to measure how close the generated and ground truth programs are. This is helpful as semantically similar programs can look very different from one another. The main problem here is to choose an embedding model that is sufficiently sensitive to typical SignalFlow constructions.

- It can be difficult and time consuming to manually compare so many program pairs, so we also utilize an LLM-based score. We prompt an evaluator LLM model with the user’s question, the generated program, and the ground truth, and ask the evaluator to score the generated program based on various factors (which functions are used, relevance to the user’s original question, brevity, etc.), and to give an explanation justifying the scoring. This approach depends heavily on providing an explicit scoring rubric.

In terms of guiding our development and identifying potential weaknesses in our generative AI, the most valuable outcome of the evaluator LLM is its explanations. When the model explains the differences between two programs and how impactful those differences can be, it greatly aids human / manual evaluation efforts because we can simply cross-reference our understanding of the program with what the evaluator itself explained. It’s also a lot easier to see if the sub-LLM is weak in specific aspects of SignalFlow generation simply by reading the evaluator feedback and discerning patterns.

Across these metrics, our sub-LLM based approach surpasses mainstream SignalFlow-capable models by a large margin. In addition, our approach excels at generating SignalFlow programs with consistently high syntax quality (with an over 99% validation success rate across 1000 programs), thanks to the syntax validation feedback loop. This empirical testing aligns with our internal anecdotal evidence as well, as we have seen multiple examples of the AI Assistant generating correct SignalFlow of moderate complexity, while other LLM-based chatbots were unable to provide useful assistance.

Conclusion

Building a generative AI capable of writing SignalFlow programs has required technical progress along various dimensions: curating high-quality question-program pairs, ensuring semantic and syntactic correctness in generated code with RAG and validation tools, and creating semi-automated evaluation tools. Through the SignalFlow generation sub-LLM, we have both advanced the capabilities of the AI Assistant in Observability Cloud, and also laid a foundation for future enhancements. Looking ahead, we aim to explore ways to enhance the SignalFlow generation capabilities via advanced RAG techniques, improved evaluation pipelines, and user feedback integration.

Splunk’s position at the forefront of observability technology provides a solid platform for these advancements, promising ongoing innovation and improved user experiences in monitoring and analytics. Moving forward, we will continue to refine the AI Assistant, driven by customer feedback and evolving technologies. The AI Assistant in Observability Cloud is currently in private preview and available to select preview participants, so sign up today!

1 The AI Assistant in Observability Cloud is currently available to selected private preview participants upon Splunk's prior approval.

2 The “self-reflection” capabilities of LLMs are still being studied and debated. There are some papers, such as Reflexion: Language Agents with Verbal Reinforcement Learning and Large Language Models Can Self-Improve that support the idea of self-reflection. Without supporting either side of the debate, we chose to have an LLM score and filter out its own generations because we empirically found that our follow-up manual curation efforts to label generated data as high quality were aided by the self-reflection we had the LLM perform. For our specific task of generating a dataset of English question and SignalFlow program pairs, this was a convenient way to filter out generated pairs, not justify the inclusion of other pairs within our dataset.

This blog was co-authored by:

- Om Rajyaguru, an Applied Scientist at Splunk working primarily on designing, fine-tuning, and evaluating multi-agent LLM systems, along with working on time series clustering problems. He received his B.S. in Applied Mathematics and Statistics in June 2022, where his research focused on multimodal learning and low-rank approximation methods for deep neural networks.

- Joseph Ross, a Senior Principal Applied Scientist at Splunk working on applications of AI to problems in observability. He holds a PhD in mathematics from Columbia University.

- Akshay Mallipeddi, a Senior Applied Scientist at Splunk. His principal area of focus is to augment AI Assistant in Observability Cloud by formulating strategies to improve data integration aspects, critical for the large language models. He is also involved in fine tuning large language models. He did his M.S. in Computer Science from Stony Brook University, New York.

- Kristal Curtis, a Principal Software Engineer at Splunk working on a mix of engineering and AI science projects, all with the goal of integrating AI into our products so they are easier to use and provide more powerful insights about users’ data and systems. Prior to joining Splunk, Kristal received her Ph.D. in Computer Science from UC Berkeley, where she studied with David Patterson and Armando Fox in the RAD & AMP Labs.

With special thanks to contributors:

- Liang Gou, a Director of AI at Splunk working on GenAI initiatives focused on observability and enterprise applications. He received his Ph.D. in Information Science from Penn State University

- Harsh Vashishta, a Senior Applied Scientist at Splunk working on the AI Assistant in Observability Cloud. He did M.S. in Computer Science from University of Maryland, Baltimore County.

- Christopher Lekas, a Principal Software Engineer at Splunk and quality owner for the AI Assistant in Observability Cloud. He holds a B.A. in computer science and economics from Swarthmore College.

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.