From Zero to LLM-Hero: Plan, Architect and Operationalize your AI Assistant in Splunk

The AI hype is in full swing. However, for a few months now, we’ve been able to observe that the hype is actually becoming reality and very specific use cases are emerging in the B2B world. We’ve seen initial tests and “preview” implementations delivering real business value.

In this blog post we are going to show you how to connect Splunk data with LLMs to interact with them, based on the way Zeppelin - a global leader in sales and services for construction machinery, power systems, rental equipment and plant engineering - achieved this.

Spoiler Alert - BEFORE YOU START

Once you’ve finished reading this blog, you probably can't wait to set up a prototype yourself. But here’s a word of warning: PAUSE.

As a first step, it is key for you to sit down and write a project scope that includes:

- the purpose and goal of your AI implementation,

- the business value for your organization,

- what data is needed and

- what the data flow would look like.

This allows you to establish guardrails to validate your efforts, balance set expectations and identify data privacy and security requirements, as the latter influences which components you can and cannot use. You'll probably also want to start by learning about AI security reference architectures from Cisco Robust Intelligence about secure design patterns and practices for chatbots, RAG Apps and AI agents.

As an example, you can directly connect to a cloud-hosted LLM or indirectly through a broker to set up security and compliance protections including capacity or monitoring capabilities like those you get with Cisco Robust Intelligence. You might even decide to take a pre-trained model in island mode, hosted on prem or deploy your custom LLM in a container connected with the Splunk App for Data Science and Deep Learning (DSDL).

The Business Case

Before we jump into the architectural details that you can adapt for your project, I'd like to show you an example that Florian Zug built and successfully put into operation at Zeppelin.

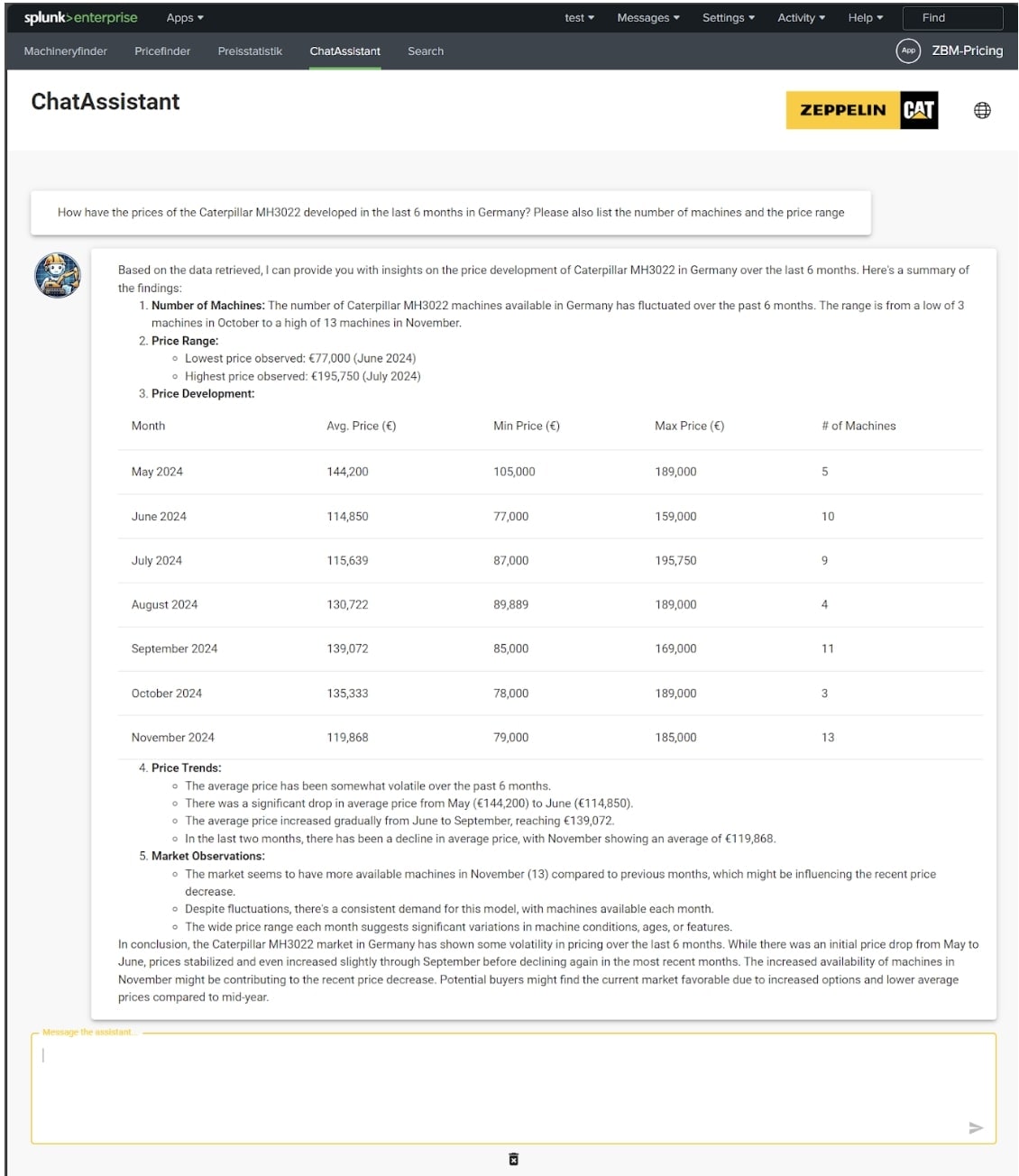

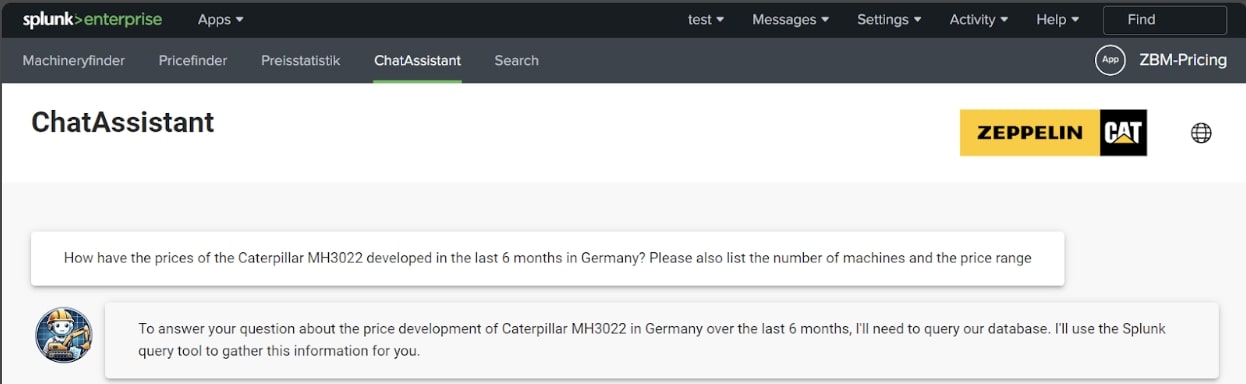

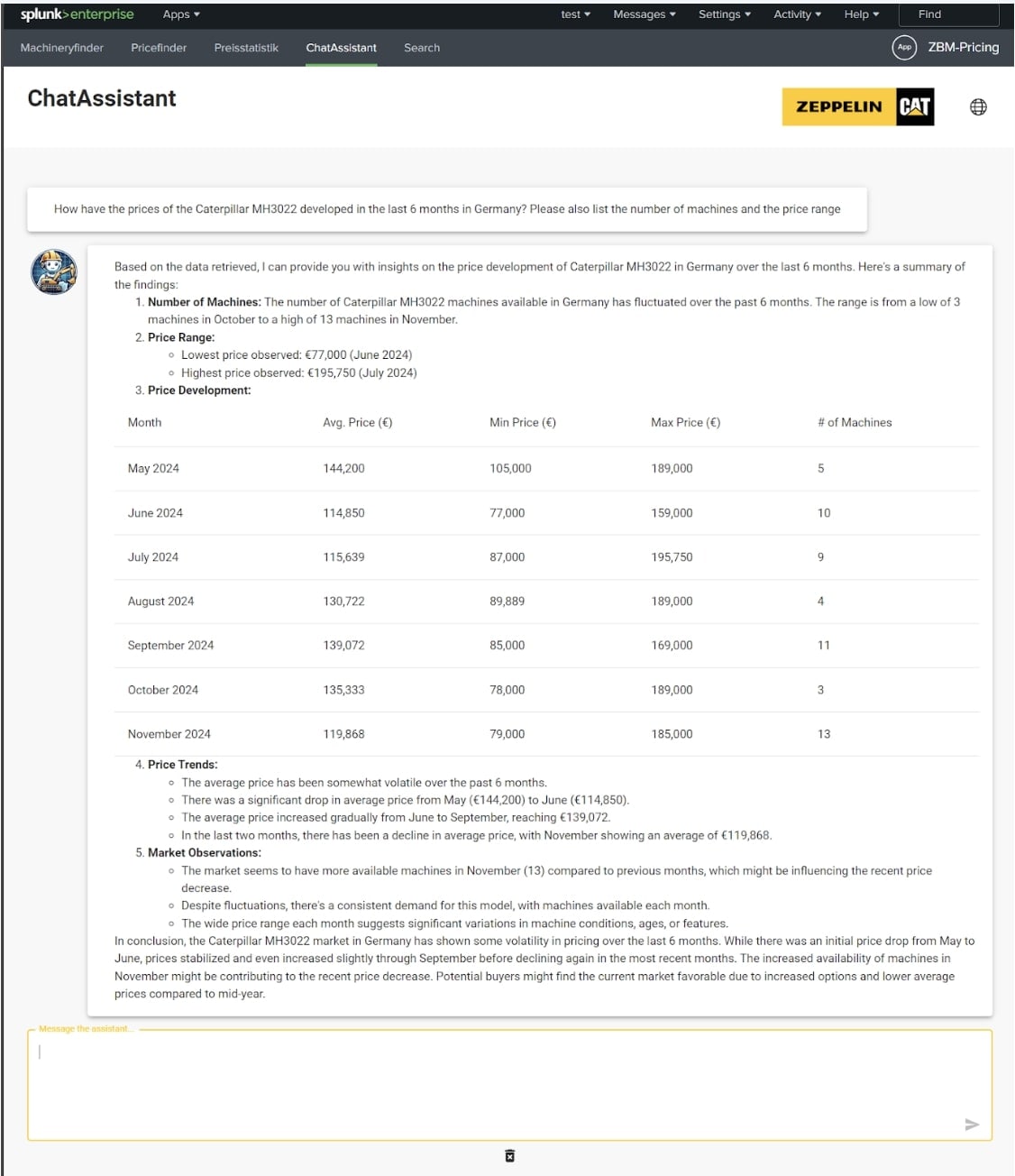

The goal was to create an AI assistant that would allow employees to query ANY pricing information about used machinery. The data is a constant pull of listings from multiple websites around the world which sell used equipment. The data is stored in Splunk and even without any Splunk knowledge, the power of AI should be able to deliver the right answers. A visualization using traditional Splunk dashboards or the SplunkReact UI only allows to answer questions that were known when the dashboard was created. The use of an AI assistant removes this barrier. A question with AI assistant can contain a user prompt such as: “How have Caterpillar MH3022 prices in Germany changed in the last 6 months?”

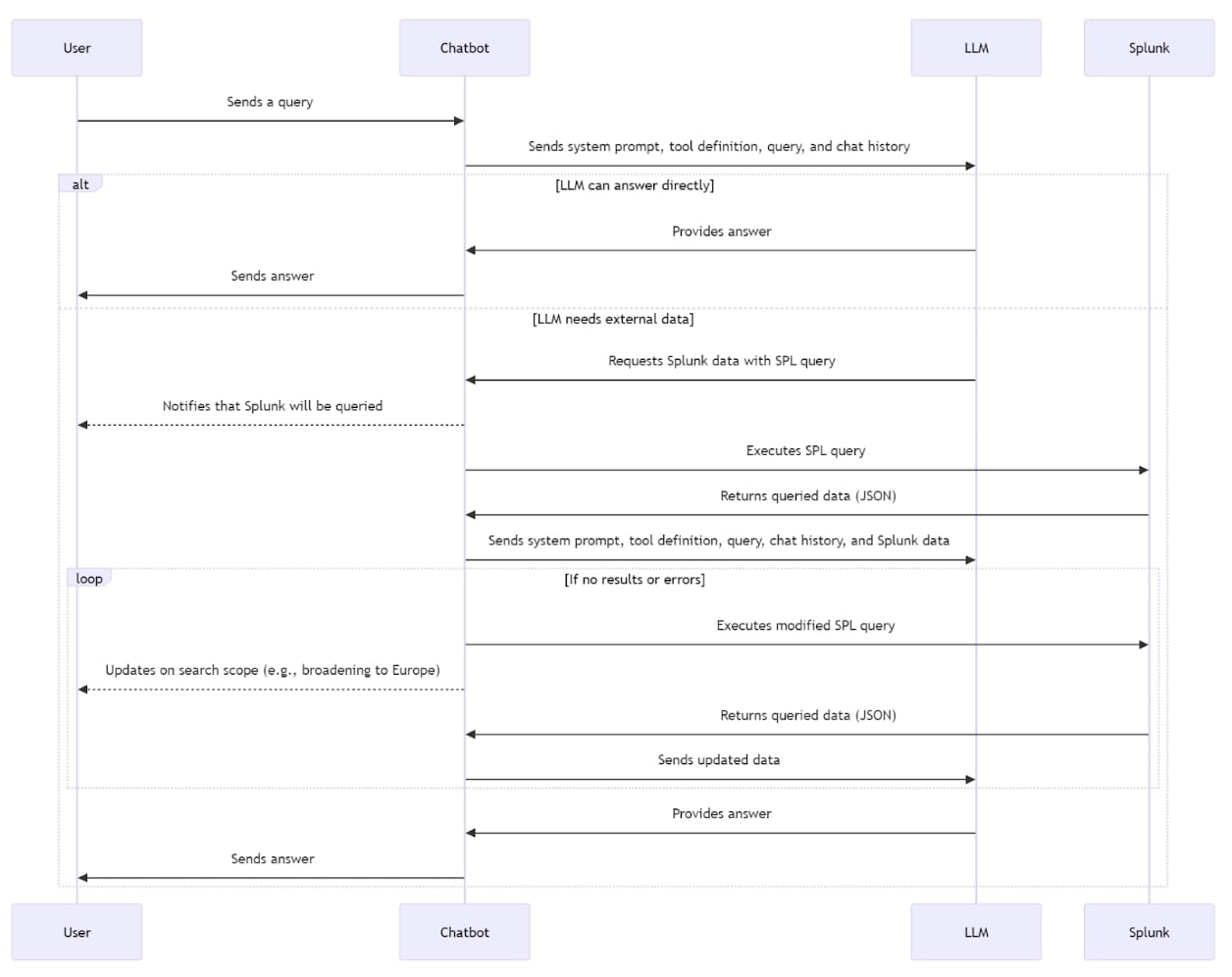

The Architectural and Data Flow

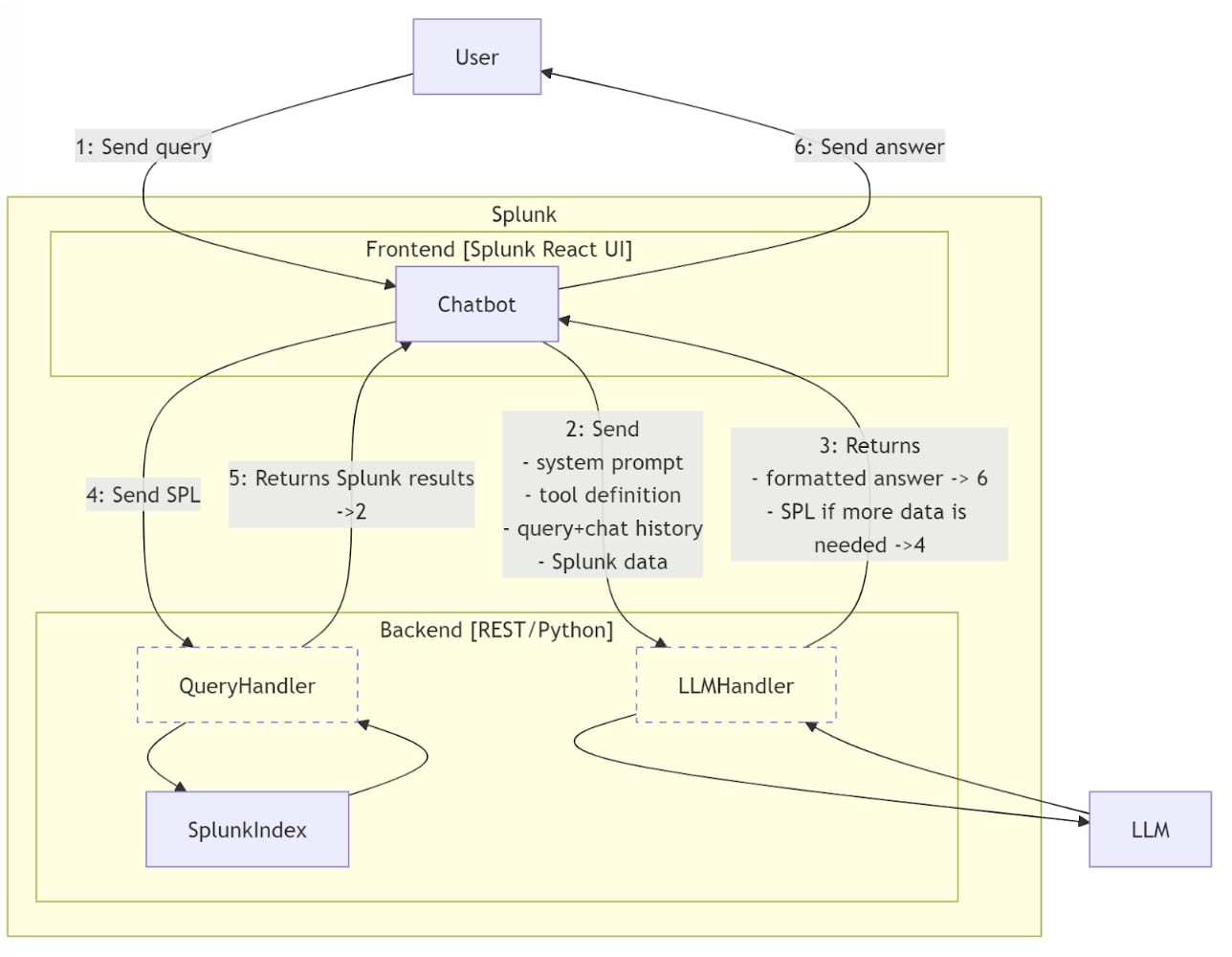

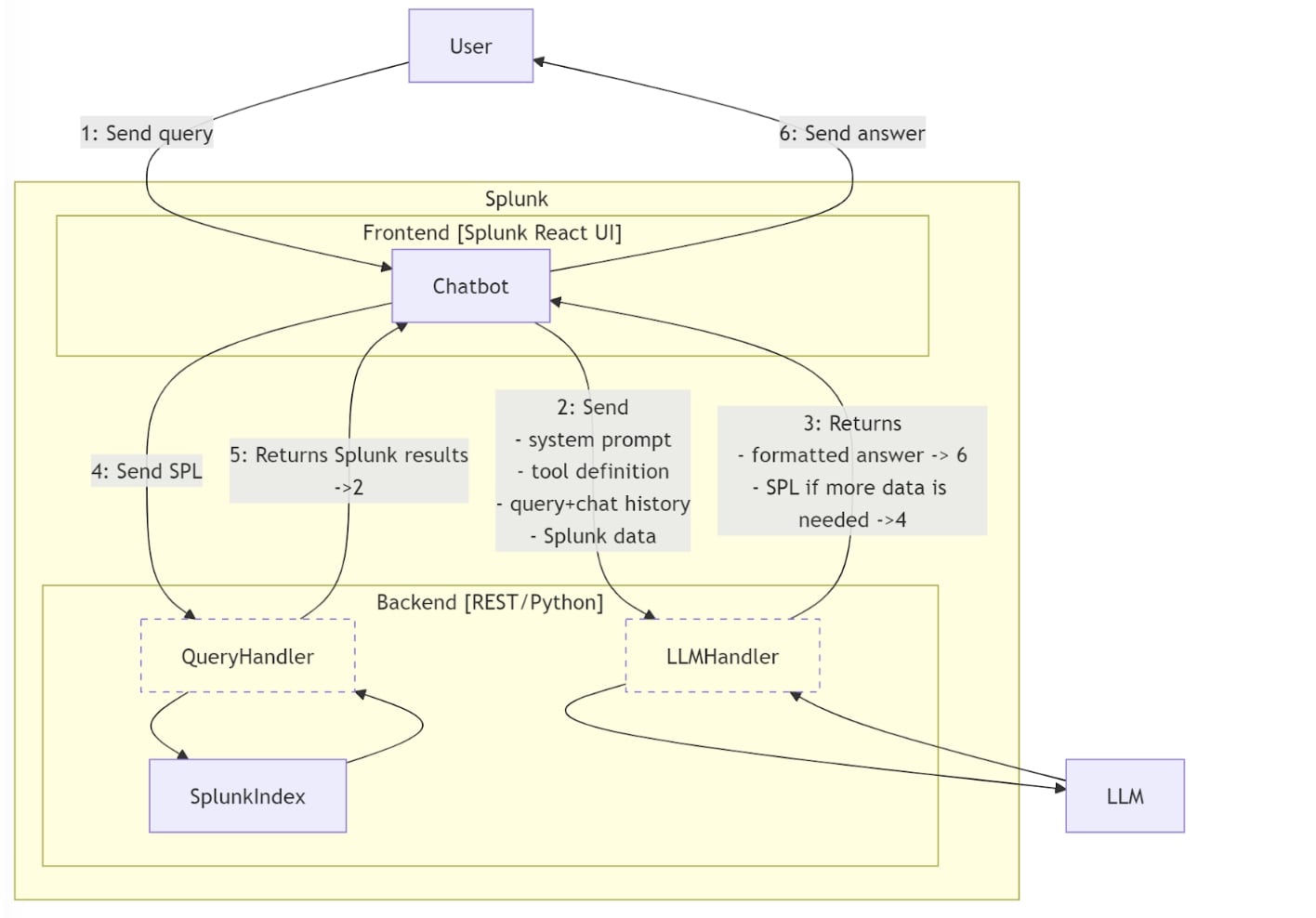

It’s key to understand the high level architecture with its components:

- Chatbot: Implements interaction logic in the frontend using the Splunk React UI framework, hosted within Splunk.

- QueryHandler: A small proxy that sends SPL queries to Splunk within the context of a restricted user (access to the required index only) and returns the Splunk data.

- LLMHandler: Manages LLM credentials outside the frontend. It sends requests and responses to and from the LLM.

- SplunkIndex: The Splunk index containing data for used machinery offers.

The Flow:

1. The user asks a question to the Chatbot within Splunk.

2. The Chatbot sends a request through the LLMHandler to the LLM system (e.g., Anthropic Claude in this case), including:

- User’s question

- System prompt (describing the chatbot’s behavior and its ability to query used machinery information via Splunk)

- Tool definition (specifies that the LLM should return a SPL if data from Splunk is needed. Additionally, it includes a description of the Splunk index, detailing its fields and values)

- Chat history (complete chat history is sent)

- Splunk data (if previously queried)

3. The LLM sends back either a formatted answer (proceed to step 6) or generates a SPL to fetch the necessary data.

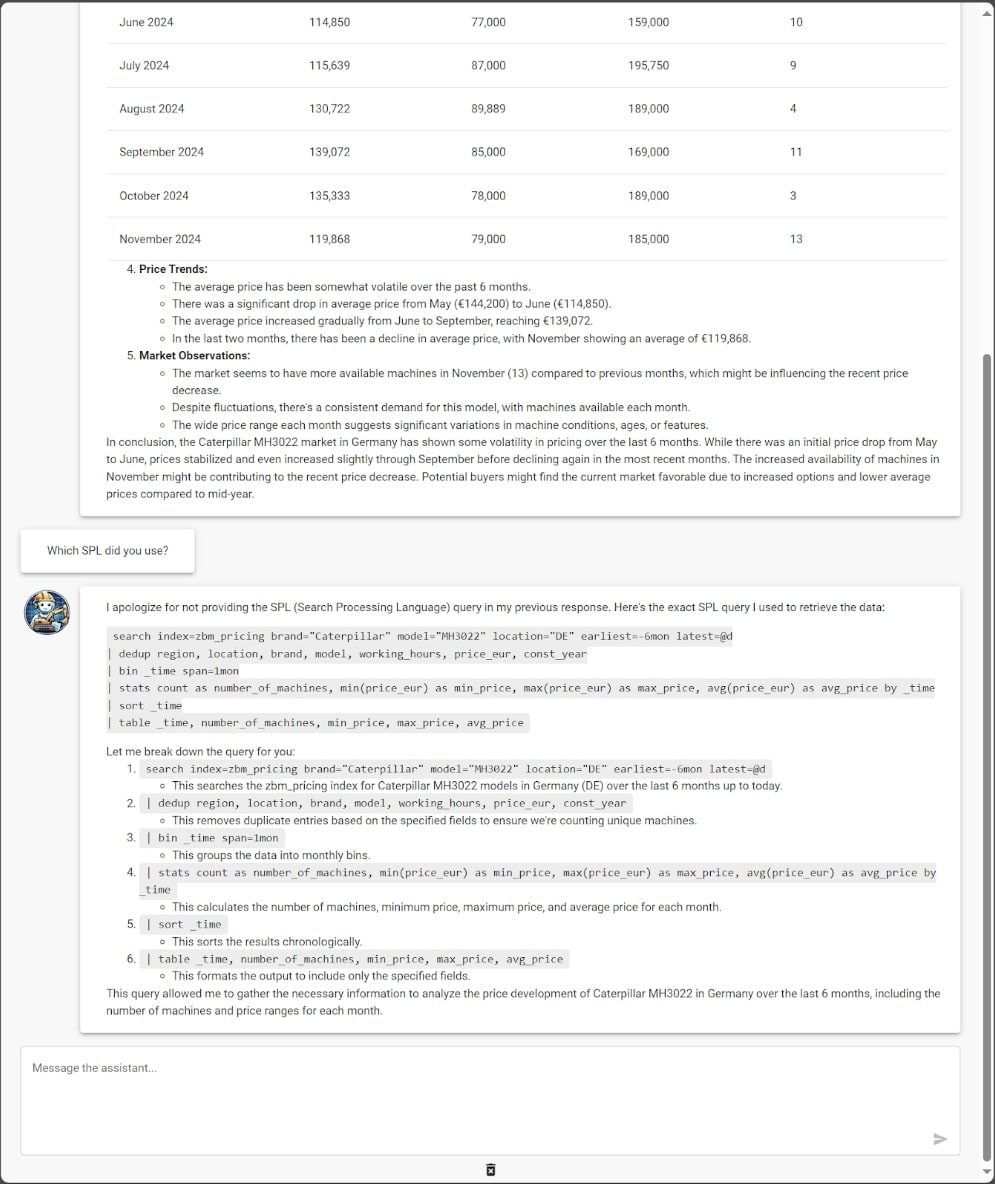

4. If a SPL is generated, it is sent to Splunk via the QueryHandler.

5. The results are processed and sent back to the Chatbot, which then jumps back to step 2 with the new data.

6. If the LLM returns a complete answer, the Chatbot displays it to the user.

If the SPL query returns no results, the LLM generates improved queries or broadens the search scope (e.g., if the user asks for offers of a specific machine in Germany and Splunk returns no data, the LLM might create a new query considering offers in Europe or worldwide).

Detailed Flow:

The User Experience:

In the same conversation, ask for details to gain insight into which Splunk query was generated and used for the question:

Thanks a lot to Florian for his amazing work and his passion. Sharing is caring!

Authors: Florian Zug (Zeppelin), Philipp Drieger (Splunk) and Matthias Maier (Splunk)

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.