From Complexity to Clarity: Leveraging AI to Simplify Fraud, Waste, and Abuse Investigations at Every Level

Fraud, waste, and abuse pose significant challenges across all sectors, from corporate enterprises to federal institutions. With increasing scrutiny from stakeholders and the public, addressing these issues has become an urgent priority, given their considerable financial and reputational impacts.

Traditionally, detecting and remediating fraud involves a myriad of specialized tools that, although capable, remain largely inaccessible to the very professionals who need them most—less-technical business users.

Fraud investigators typically have law enforcement, accounting, legal affairs, or compliance backgrounds. While highly skilled in their respective domains, they often face challenges when using complex analytical tools designed primarily for technical specialists.

There are significant, yet frequently overlooked opportunities to simplify existing tools.

Enter Artificial Intelligence (AI) and Large Language Models (LLMs)—transformative technologies that promise to reshape the landscape of fraud detection, waste minimization, and abuse prevention by making advanced data analytics accessible and intuitive for non-technical users.

AI and Machine Learning in Your Organization

AI presents an unprecedented chance to simplify complex analytics. The goal is clear yet powerful: enable business users to pose questions in plain, domain-specific language and receive actionable answers in intuitive formats such as visual dashboards, concise summaries, or interactive visualizations.

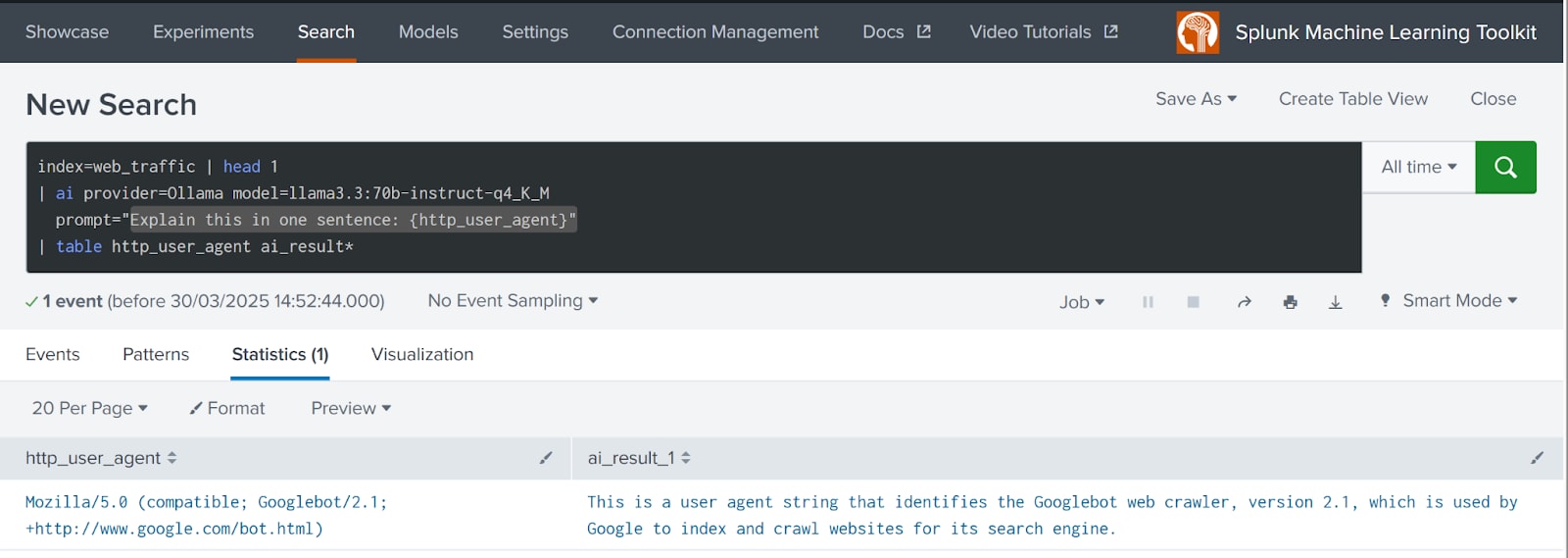

The upcoming update to Splunk’s Machine Learning Toolkit (MLTK) version 5.6 and above introduces powerful new features, including an integration to 3rd party LLMs. These enhancements allow Splunk to seamlessly interact with any local or remote LLM using a simple | ai ... SPL command, as demonstrated below:

This also demonstrates the syntax where 'prompt' content can be expanded with the values from other available data fields.

Following these updates to MLTK and AI commander functionalities, we've successfully conducted a Proof of Concept (POC) to demonstrate how Splunk and AI integration can simplify complex data analytics for business users.

The idea was to implement the following logic with medium-sized LLM models without fine tuning:

The plan is simple: let non-technical business users drive fraud or cyber security investigations in their business language of choice.

First POC test implementation of such logic was done within the Splunk App for Fraud Analytics.

The interface was intentionally designed for simplicity, featuring just a single input field for user questions:

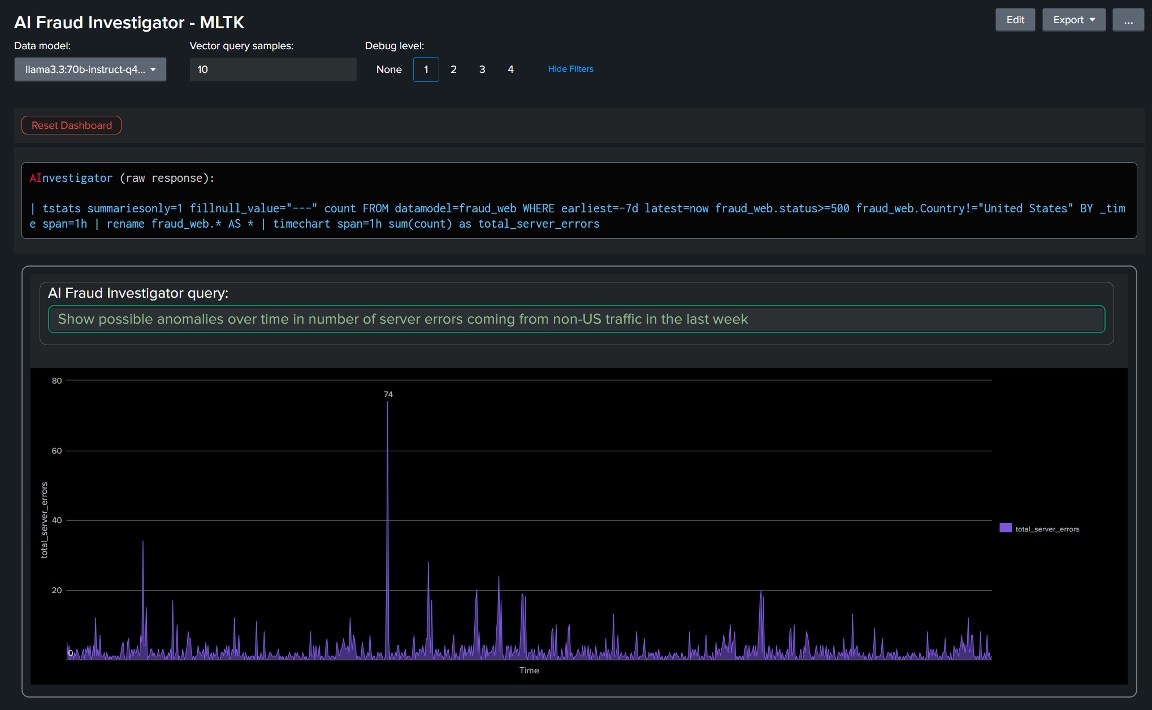

In the following example, a user asked to display anomalies in server errors over time, specifically filtering for non-US traffic. The AI agent generated the appropriate SPL query, selected a suitable Timechart visualization, validated the query, and automatically executed it, presenting results seamlessly.

We can also peek into the raw response from an AI investigator agent to see the actual SPL query that was generated by LLM in response to the user’s question:

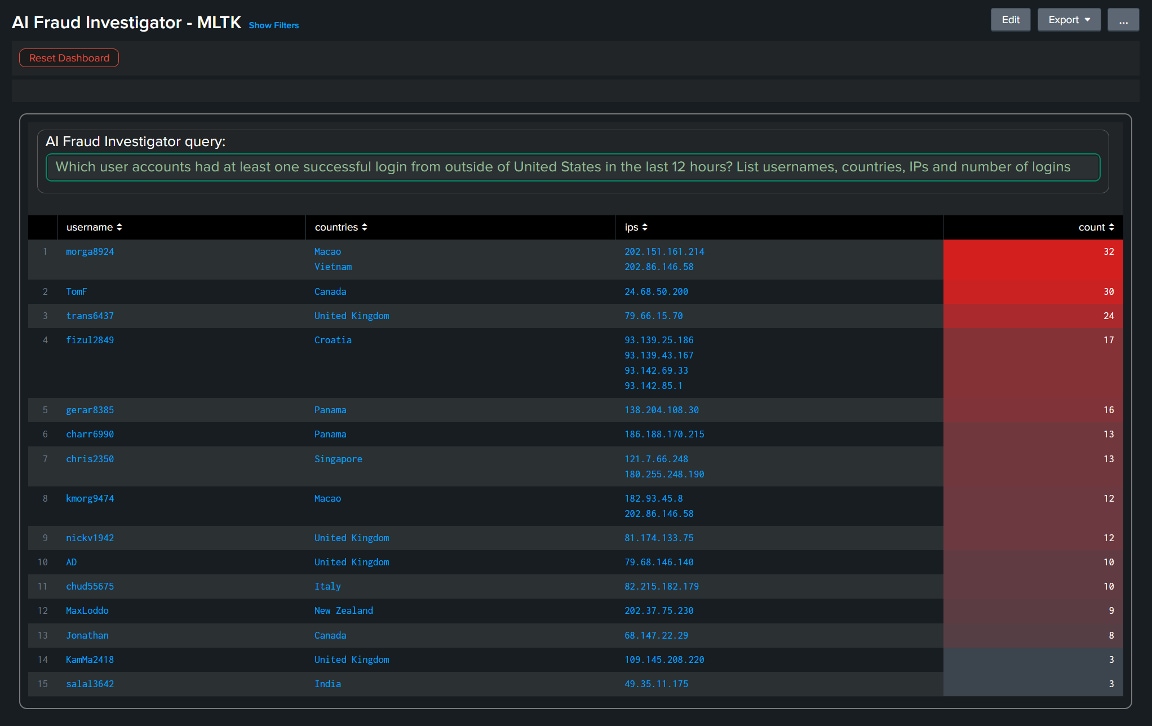

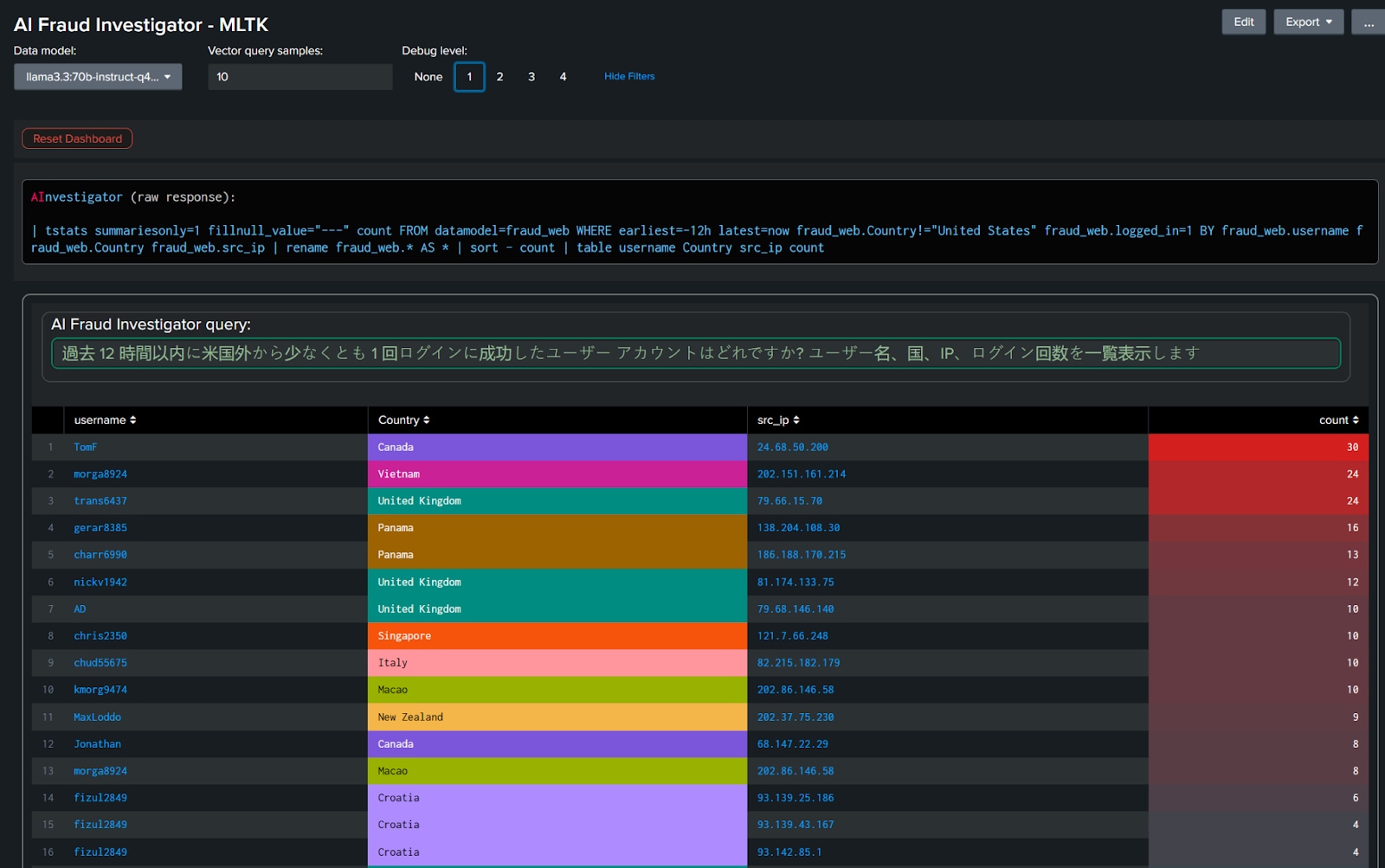

In another example, the user requested identification of accounts potentially exposed to account takeover attempts (logins from non-US IP addresses) within web server logs of a US-based credit union:

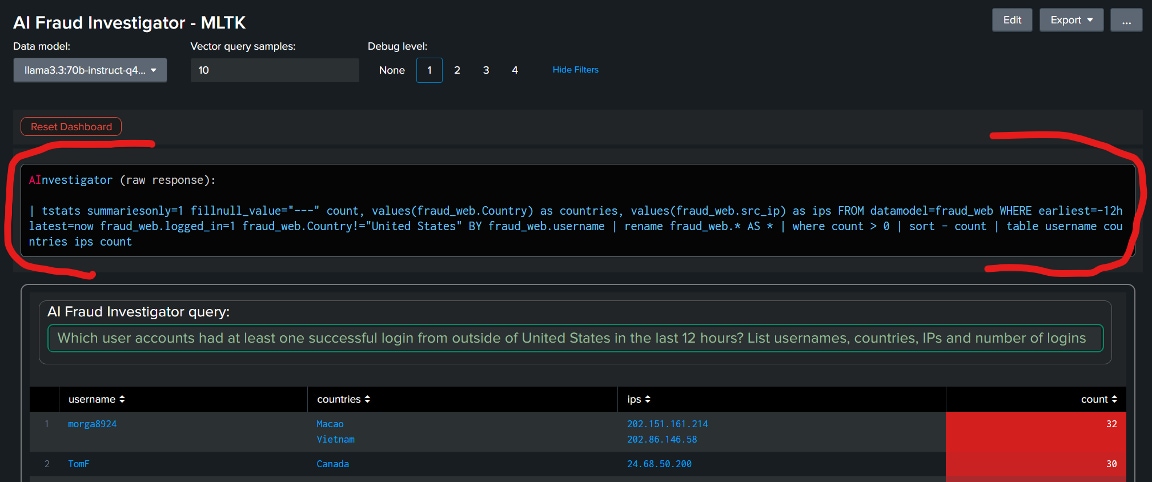

Here is the actual query that was generated by LLM in response to user question:

The query is syntactically correct, logically valid and returns all the fields requested by the user.

In the example below, we translated a user query to Japanese and ran it again with no other changes, and the results shown were essentially the same! In this case, the SPL query was slightly different from the English variant (due to the subtle differences LLM interpreting semantic meanings of each user request) but remained valid enough to answer the user's request:

How are these functions implemented?

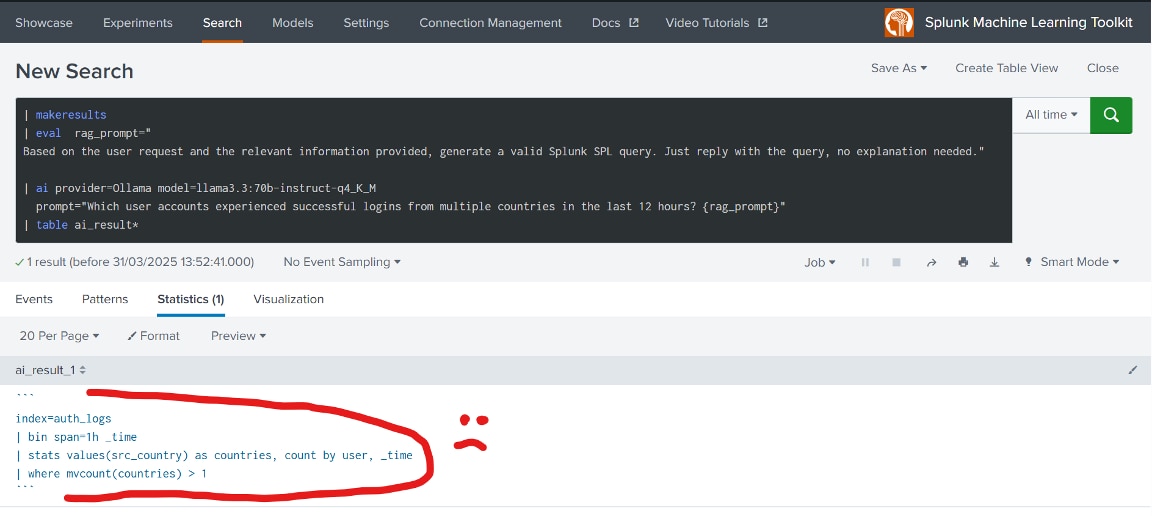

The key point is to leverage LLM's uncanny ability to learn from well-structured examples. With the latest MLTK installed and configured, you can test this capability directly using the Splunk search prompt. Consider this query to list accounts with multiple logins from different countries:

Without context on available data sources or fields, the LLM generated a query that appeared valid but failed to execute properly, highlighting the necessity of context. LLM had no idea what kind of data sources and fields are available.

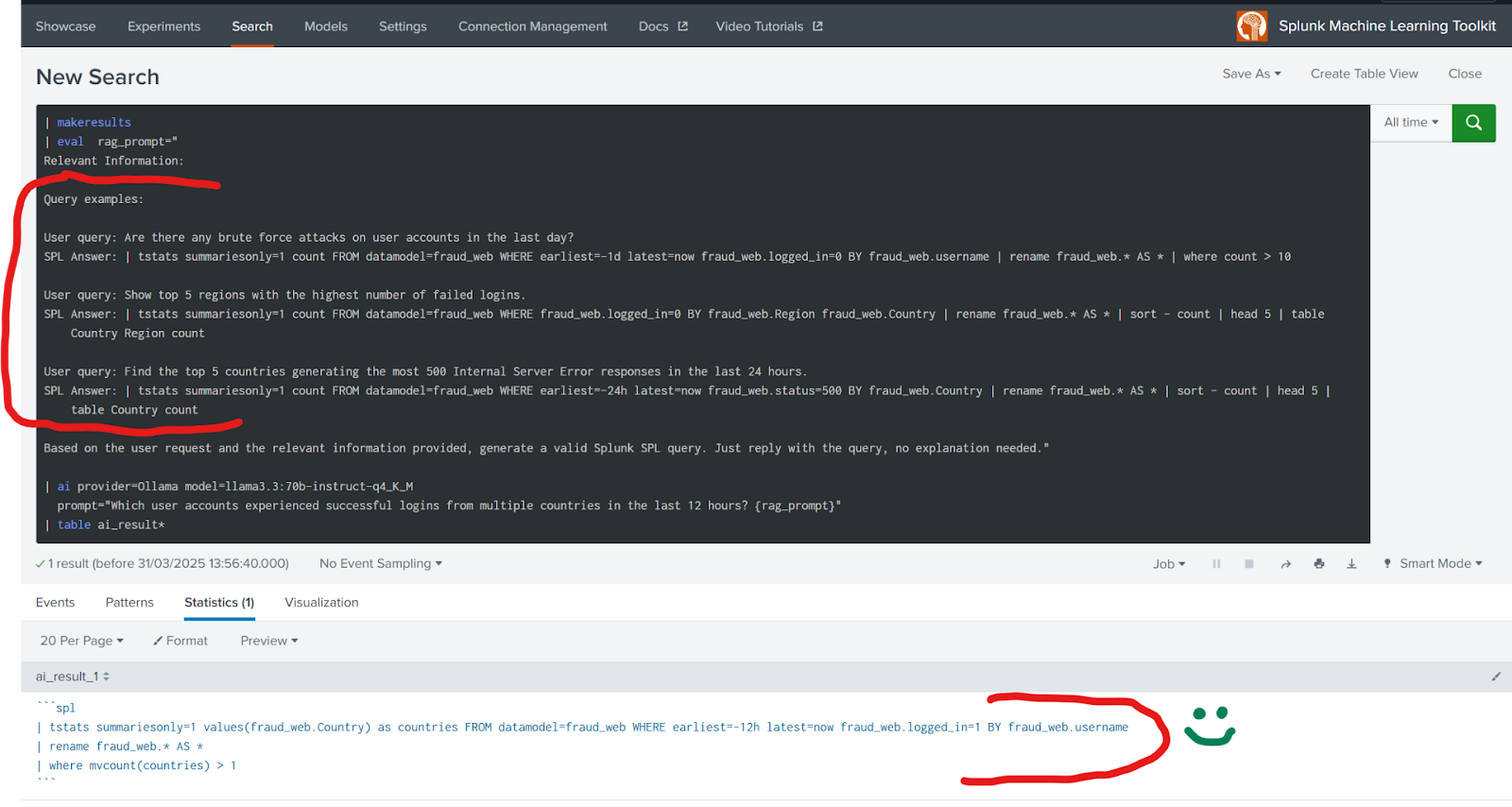

Now, consider the second example. The generated SPL query was valid:

The only difference is that we've provided an important context in RAG-style right within the prompt. By giving LLM just 3 working examples using the actual data source and actual fields, the LLM successfully produced a syntactically correct and logically sound SPL query.

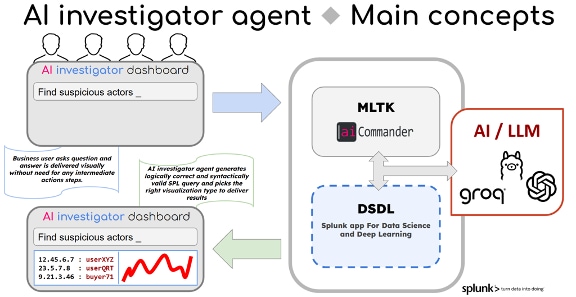

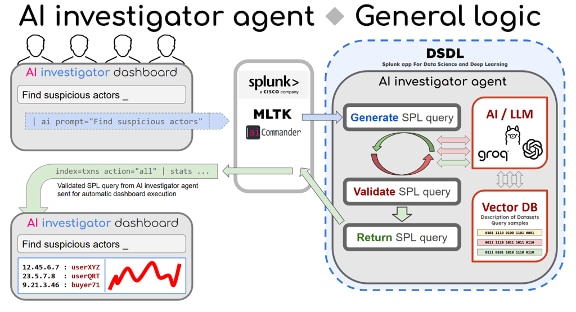

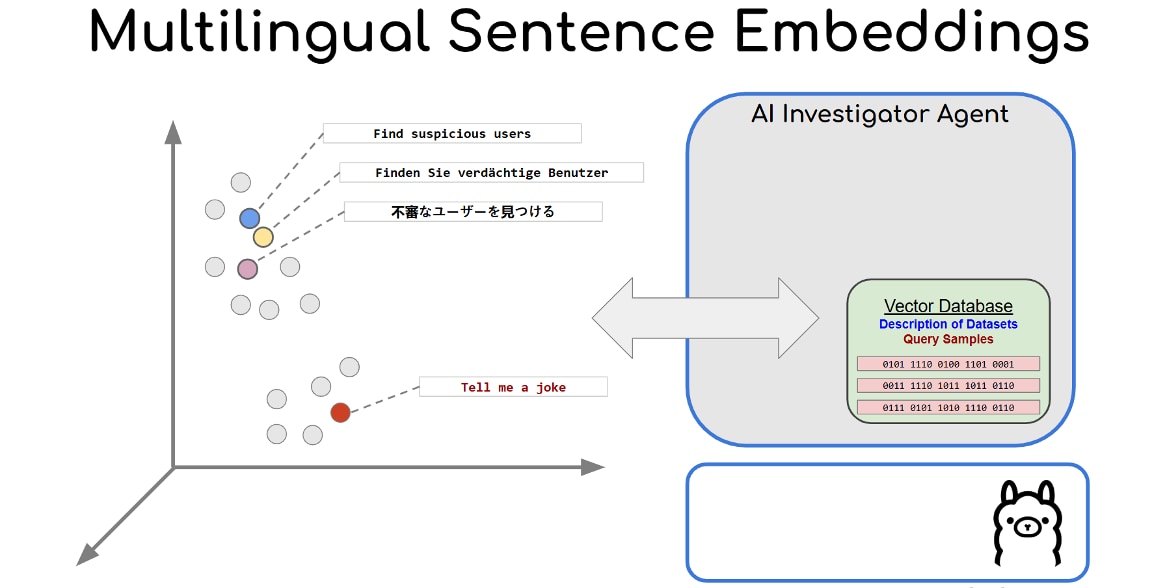

Here's more complete architecture of actual implementation of AI investigator agent:

The Vector DB stores descriptions of all available data sources, including descriptions of available fields for each.

The Vector DB also stores the samples of SPL queries (total about 50 in this POC implementation).

When a user types a request, it gets routed by MLTK / AI Commander to an AI investigator agent. AI agent uses vector DB query to match the user's request to the most relevant data source as well as extracts a number of the most relevant SPL query samples. These results augment the user's query before passing it to LLM for execution.

Thanks to the nature of embedding models and cosine similarity techniques, user queries translated into various languages preserved their semantic meaning. This ensured the generation of valid SPL queries regardless of the input language.

For this proof-of-concept implementation, the Pydantic AI framework was used to streamline the process. It also facilitated automatic SPL validation and handled retries when necessary to enforce valid output.

Once an SPL query successfully passes all validation checks, it is sent back to the dashboard along with recommended visualization methods. The dashboard then executes the query and renders the results.

For non-technical fraud investigators, the ideal scenario is clear: ask questions naturally in their domain-specific business language and receive intuitive answers. The LLM-driven system should interpret these questions, autonomously generate and execute the appropriate queries, and subsequently present insights visually or through straightforward, actionable narratives. This method significantly reduces the cognitive and operational burden on investigators, enabling them to focus more effectively on their primary role—interpreting results and deciding actions.

Organizations committed to effectively combating fraud, waste, and abuse must prioritize simplifying data analytics, making powerful insights accessible, actionable, and impactful for all investigators, regardless of their technical proficiency. This strategic adoption of AI is key to staying ahead of increasingly sophisticated fraudsters and protecting the integrity, efficiency, and public trust in both the private and public sectors.

Read more blog posts about Splunk MLTK here.

Download Splunk Machine Learning Toolkit (MLTK).

Download Splunk App for Fraud Analytics.

Related Articles

About Splunk

The world’s leading organizations rely on Splunk, a Cisco company, to continuously strengthen digital resilience with our unified security and observability platform, powered by industry-leading AI.

Our customers trust Splunk’s award-winning security and observability solutions to secure and improve the reliability of their complex digital environments, at any scale.